-

SOTA

-

Accelerator Toolkit

-

Deep Learning Toolkit

-

-

- Resume

- Add

- AlphaDropout

- AdditiveAttention

- Attention

- Average

- AvgPool1D

- AvgPool2D

- AvgPool3D

- BatchNormalization

- Bidirectional

- Concatenate

- Conv1D

- Conv1DTranspose

- Conv2D

- Conv2DTranspose

- Conv3D

- Conv3DTranspose

- ConvLSTM1D

- ConvLSTM2D

- ConvLSTM3D

- Dense

- Cropping1D

- Cropping2D

- Cropping3D

- DepthwiseConv2D

- Dropout

- Embedding

- Flatten

- ELU

- Exponential

- GaussianDropout

- GaussianNoise

- GlobalAvgPool1D

- GlobalAvgPool2D

- GlobalAvgPool3D

- GlobalMaxPool1D

- GlobalMaxPool2D

- GlobalMaxPool3D

- GRU

- GELU

- Input

- LayerNormalization

- LSTM

- MaxPool1D

- MaxPool2D

- MaxPool3D

- MultiHeadAttention

- HardSigmoid

- LeakyReLU

- Linear

- Multiply

- Permute3D

- Reshape

- RNN

- PReLU

- ReLU

- SELU

- Output Predict

- Output Train

- SeparableConv1D

- SeparableConv2D

- SimpleRNN

- SpatialDropout

- Sigmoid

- SoftMax

- SoftPlus

- SoftSign

- Split

- UpSampling1D

- UpSampling2D

- UpSampling3D

- ZeroPadding1D

- ZeroPadding2D

- ZeroPadding3D

- Swish

- TanH

- ThresholdedReLU

- Substract

- Show All Articles (63) Collapse Articles

-

-

-

-

- Exp

- Identity

- Abs

- Acos

- Acosh

- ArgMax

- ArgMin

- Asin

- Asinh

- Atan

- Atanh

- AveragePool

- Bernouilli

- BitwiseNot

- BlackmanWindow

- Cast

- Ceil

- Celu

- ConcatFromSequence

- Cos

- Cosh

- DepthToSpace

- Det

- DynamicTimeWarping

- Erf

- EyeLike

- Flatten

- Floor

- GlobalAveragePool

- GlobalLpPool

- GlobalMaxPool

- HammingWindow

- HannWindow

- HardSwish

- HardMax

- lrfft

- lsNaN

- Log

- LogSoftmax

- LpNormalization

- LpPool

- LRN

- MeanVarianceNormalization

- MicrosoftGelu

- Mish

- Multinomial

- MurmurHash3

- Neg

- NhwcMaxPool

- NonZero

- Not

- OptionalGetElement

- OptionalHasElement

- QuickGelu

- RandomNormalLike

- RandomUniformLike

- RawConstantOfShape

- Reciprocal

- ReduceSumInteger

- RegexFullMatch

- Rfft

- Round

- SampleOp

- Shape

- SequenceLength

- Shrink

- Sin

- Sign

- Sinh

- Size

- SpaceToDepth

- Sqrt

- StringNormalizer

- Tan

- TfldfVectorizer

- Tokenizer

- Transpose

- UnfoldTensor

- lslnf

- ImageDecoder

- Inverse

- Show All Articles (65) Collapse Articles

-

-

-

- Add

- AffineGrid

- And

- BiasAdd

- BiasGelu

- BiasSoftmax

- BiasSplitGelu

- BitShift

- BitwiseAnd

- BitwiseOr

- BitwiseXor

- CastLike

- CDist

- CenterCropPad

- Clip

- Col2lm

- ComplexMul

- ComplexMulConj

- Compress

- ConvInteger

- Conv

- ConvTranspose

- ConvTransposeWithDynamicPads

- CropAndResize

- CumSum

- DeformConv

- DequantizeBFP

- DequantizeLinear

- DequantizeWithOrder

- DFT

- Div

- DynamicQuantizeMatMul

- Equal

- Expand

- ExpandDims

- FastGelu

- FusedConv

- FusedGemm

- FusedMatMul

- FusedMatMulActivation

- GatedRelativePositionBias

- Gather

- GatherElements

- GatherND

- Gemm

- GemmFastGelu

- GemmFloat8

- Greater

- GreaterOrEqual

- GreedySearch

- GridSample

- GroupNorm

- InstanceNormalization

- Less

- LessOrEqual

- LongformerAttention

- MatMul

- MatMulBnb4

- MatMulFpQ4

- MatMulInteger

- MatMulInteger16

- MatMulIntergerToFloat

- MatMulNBits

- MaxPoolWithMask

- MaxRoiPool

- MaxUnPool

- MelWeightMatrix

- MicrosoftDequantizeLinear

- MicrosoftGatherND

- MicrosoftGridSample

- MicrosoftPad

- MicrosoftQLinearConv

- MicrosoftQuantizeLinear

- MicrosoftRange

- MicrosoftTrilu

- Mod

- MoE

- Mul

- MulInteger

- NegativeLogLikelihoodLoss

- NGramRepeatBlock

- NhwcConv

- NhwcFusedConv

- NonMaxSuppression

- OneHot

- Or

- PackedAttention

- PackedMultiHeadAttention

- Pad

- Pow

- QGemm

- QLinearAdd

- QLinearAveragePool

- QLinearConcat

- QLinearConv

- QLinearGlobalAveragePool

- QLinearLeakyRelu

- QLinearMatMul

- QLinearMul

- QLinearReduceMean

- QLinearSigmoid

- QLinearSoftmax

- QLinearWhere

- QMoE

- QOrderedAttention

- QOrderedGelu

- QOrderedLayerNormalization

- QOrderedLongformerAttention

- QOrderedMatMul

- QuantizeLinear

- QuantizeWithOrder

- Range

- ReduceL1

- ReduceL2

- ReduceLogSum

- ReduceLogSumExp

- ReduceMax

- ReduceMean

- ReduceMin

- ReduceProd

- ReduceSum

- ReduceSumSquare

- RelativePositionBias

- Reshape

- Resize

- RestorePadding

- ReverseSequence

- RoiAlign

- RotaryEmbedding

- ScatterElements

- ScatterND

- SequenceAt

- SequenceErase

- SequenceInsert

- Sinh

- Slice

- SparseToDenseMatMul

- SplitToSequence

- Squeeze

- STFT

- StringConcat

- Sub

- Tile

- TorchEmbedding

- TransposeMatMul

- Trilu

- Unsqueeze

- Where

- WordConvEmbedding

- Xor

- Show All Articles (134) Collapse Articles

-

- Attention

- AttnLSTM

- BatchNormalization

- BiasDropout

- BifurcationDetector

- BitmaskBiasDropout

- BitmaskDropout

- DecoderAttention

- DecoderMaskedMultiHeadAttention

- DecoderMaskedSelfAttention

- Dropout

- DynamicQuantizeLinear

- DynamicQuantizeLSTM

- EmbedLayerNormalization

- GemmaRotaryEmbedding

- GroupQueryAttention

- GRU

- LayerNormalization

- LSTM

- MicrosoftMultiHeadAttention

- QAttention

- RemovePadding

- RNN

- Sampling

- SkipGroupNorm

- SkipLayerNormalization

- SkipSimplifiedLayerNormalization

- SoftmaxCrossEntropyLoss

- SparseAttention

- TopK

- WhisperBeamSearch

- Show All Articles (15) Collapse Articles

-

-

-

-

-

-

-

-

-

-

- AdditiveAttention

- Attention

- BatchNormalization

- Bidirectional

- Conv1D

- Conv2D

- Conv1DTranspose

- Conv2DTranspose

- Conv3DTranspose

- Conv3D

- ConvLSTM1D

- ConvLSTM2D

- ConvLSTM3D

- Dense

- DepthwiseConv2D

- Embedding

- LayerNormalization

- GRU

- LSTM

- PReLU 2D

- PReLU 3D

- PReLU 4D

- PReLU 5D

- MutiHeadAttention

- SeparableConv1D

- SeparableConv2D

- MultiHeadAttention

- RNN (GRU)

- RNN (LSTM)

- RNN (SimpleRNN)

- SimpleRNN

- 1D

- 2D

- 3D

- 4D

- 5D

- 6D

- Scalar

- Show All Articles (22) Collapse Articles

-

- AdditiveAttention

- Attention

- BatchNormalization

- Conv1D

- Conv2D

- Conv1DTranspose

- Conv2DTranspose

- Bidirectional

- Conv3D

- ConvLSTM1D

- ConvLSTM2D

- ConvLSTM3D

- Conv3DTranspose

- DepthwiseConv2D

- Dense

- Embedding

- LayerNormalization

- GRU

- PReLU 2D

- PReLU 3D

- PReLU 4D

- MultiHeadAttention

- LSTM

- PReLU 5D

- SeparableConv1D

- SeparableConv2D

- SimpleRNN

- RNN (GRU)

- RNN (LSTM)

- RNN (SimpleRNN)

- 1D

- 2D

- 3D

- 4D

- 5D

- 6D

- Scalar

- Show All Articles (21) Collapse Articles

-

-

- AdditiveAttention

- Attention

- BatchNormalization

- Bidirectional

- Conv1D

- Conv2D

- Conv3D

- Conv1DTranspose

- Conv2DTranspose

- Conv3DTranspose

- ConvLSTM1D

- ConvLSTM2D

- ConvLSTM3D

- Dense

- DepthwiseConv2D

- Embedding

- GRU

- LayerNormalization

- LSTM

- MultiHeadAttention

- PReLU 2D

- PReLU 3D

- PReLU 4D

- PReLU 5D

- Resume

- SeparableConv1D

- SeparableConv2D

- SimpleRNN

- Show All Articles (12) Collapse Articles

-

-

- Dense

- Embedding

- AdditiveAttention

- Attention

- MultiHeadAttention

- Conv1D

- Conv2D

- Conv3D

- ConvLSTM1D

- ConvLSTM2D

- ConvLSTM3D

- Conv1DTranspose

- Conv2DTranspose

- Conv3DTranspose

- DepthwiseConv2D

- SeparableConv1D

- SeparableConv2D

- BatchNormalization

- LayerNormalization

- PReLU 2D

- PReLU 3D

- PReLU 4D

- PReLU 5D

- Bidirectional

- GRU

- LSTM

- RNN (GRU)

- RNN (LSTM)

- RNN (SimpleRNN)

- SimpleRNN

- PReLU 4D

- Show All Articles (15) Collapse Articles

-

- Dense

- Embedding

- AdditiveAttention

- Attention

- MultiHeadAttention

- Conv1D

- Conv2D

- Conv3D

- ConvLSTM1D

- ConvLSTM2D

- ConvLSTM3D

- Conv1DTranspose

- Conv2DTranspose

- Conv3DTranspose

- DepthwiseConv2D

- SeparableConv1D

- SeparableConv2D

- BatchNormalization

- LayerNormalization

- PReLU 2D

- PReLU 3D

- PReLU 4D

- PReLU 5D

- Bidirectional

- GRU

- LSTM

- RNN (GRU)

- RNN (LSTM)

- RNN (SimpleRNN)

- SimpleRNN

- Show All Articles (14) Collapse Articles

-

-

- Accuracy

- BinaryAccuracy

- BinaryCrossentropy

- BinaryIoU

- CategoricalAccuracy

- CategoricalCrossentropy

- CategoricalHinge

- CosineSimilarity

- FalseNegatives

- FalsePositives

- Hinge

- Huber

- IoU

- KLDivergence

- LogCoshError

- Mean

- MeanAbsoluteError

- MeanAbsolutePercentageError

- MeanIoU

- MeanRelativeError

- MeanSquaredError

- MeanSquaredLogarithmicError

- MeanTensor

- OneHotIoU

- OneHotMeanIoU

- Poisson

- Precision

- PrecisionAtRecall

- Recall

- RecallAtPrecision

- RootMeanSquaredError

- SensitivityAtSpecificity

- SparseCategoricalAccuracy

- SparseCategoricalCrossentropy

- SparseTopKCategoricalAccuracy

- Specificity

- SpecificityAtSensitivity

- SquaredHinge

- Sum

- TopKCategoricalAccuracy

- TrueNegatives

- TruePositives

- Resume

- Show All Articles (27) Collapse Articles

-

-

- Dense

- Embedding

- AdditiveAttention

- Attention

- MultiHeadAttention

- Conv1D

- Conv2D

- Conv3D

- ConvLSTM1D

- ConvLSTM2D

- ConvLSTM3D

- Conv1DTranspose

- Conv2DTranspose

- Conv3DTranspose

- DepthwiseConv2D

- SeparableConv1D

- SeparableConv2D

- BatchNormalization

- LayerNormalization

- PReLU 2D

- PReLU 3D

- PReLU 4D

- PReLU 5D

- Bidirectional

- GRU

- LSTM

- RNN (GRU)

- RNN (LSTM)

- RNN (SimpleRNN)

- SimpleRNN

- Show All Articles (14) Collapse Articles

-

- Dense

- Embedding

- AdditiveAttention

- Attention

- MultiHeadAttention

- Conv1D

- Conv2D

- Conv3D

- ConvLSTM1D

- ConvLSTM2D

- ConvLSTM3D

- Conv1DTranspose

- Conv2DTranspose

- Conv3DTranspose

- DepthwiseConv2D

- SeparableConv1D

- SeparableConv2D

- LayerNormalization

- PReLU 2D

- PReLU 3D

- PReLU 4D

- PReLU 5D

- Bidirectional

- GRU

- LSTM

- RNN (GRU)

- RNN (LSTM)

- RNN (SimpleRNN)

- SimpleRNN

- BatchNormalization

- Show All Articles (14) Collapse Articles

-

-

-

Computer Vision Toolkit

-

CUDA Toolkit

-

- Resume

- Array size

- Index Array

- Replace Subset

- Insert Into Array

- Delete From Array

- Initialize Array

- Build Array

- Concatenate Array

- Array Subset

- Min & Max

- Reshape Array

- Short Array

- Reverse 1D array

- Shuffle array

- Search In Array

- Split 1D Array

- Split 2D Array

- Rotate 1D Array

- Increment Array Element

- Decrement Array Element

- Interpolate 1D Array

- Threshold 1D Array

- Interleave 1D Array

- Decimate 1D Array

- Transpose Array

- Remove Duplicate From 1D Array

- Show All Articles (11) Collapse Articles

-

-

- Resume

- Add

- Substract

- Multiply

- Divide

- Quotient & Remainder

- Increment

- Decrement

- Add Array Element

- Multiply Array Element

- Absolute

- Round To Nearest

- Round Toward -Infinity

- Round Toward +Infinity

- Scale By Power Of Two

- Square Root

- Square

- Negate

- Reciprocal

- Sign

- Show All Articles (4) Collapse Articles

DeformConv

Description

Performs deformable convolution as described in https://arxiv.org/abs/1703.06211 and https://arxiv.org/abs/1811.11168. This operator specification supports the general N-D case. Note that most common use cases have 2D or 3D data.

Input parameters

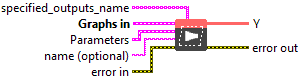

![]() specified_outputs_name : array, this parameter lets you manually assign custom names to the output tensors of a node.

specified_outputs_name : array, this parameter lets you manually assign custom names to the output tensors of a node.

![]() Graphs in : cluster, ONNX model architecture.

Graphs in : cluster, ONNX model architecture.

![]() X (heterogeneous) – T : object, input data tensor. For 2D image data, it has shape (N, C, H, W) where N is the batch size, C is the number of input channels, and H and W are the height and width. In general, the shape is (N, C, D1, D2, … , Dn) for n-dimensional data, where D1 to Dn are the spatial dimension sizes. Most common use cases have n = 2 or 3.

X (heterogeneous) – T : object, input data tensor. For 2D image data, it has shape (N, C, H, W) where N is the batch size, C is the number of input channels, and H and W are the height and width. In general, the shape is (N, C, D1, D2, … , Dn) for n-dimensional data, where D1 to Dn are the spatial dimension sizes. Most common use cases have n = 2 or 3.![]() W (heterogeneous) – T : object, weight tensor that will be used in the convolutions. It has shape (oC, C/group, kH, kW), where oC is the number of output channels and kH and kW are the kernel height and width. For more than 2 dimensions, it has shape (oC, C/group, k1, k2, … , kn).

W (heterogeneous) – T : object, weight tensor that will be used in the convolutions. It has shape (oC, C/group, kH, kW), where oC is the number of output channels and kH and kW are the kernel height and width. For more than 2 dimensions, it has shape (oC, C/group, k1, k2, … , kn).![]() offset (heterogeneous) – T : object, offset tensor denoting the offset for the sampling locations in the convolution kernel. It has shape (N, offset_group * kH * kW * 2, oH, oW) for 2D data or (N, offset_group * k1 * k2 * … * kn * n, o1, o2, … , on) for nD data. Use linear interpolationfor fractional offset values. Sampling locations outside of the padded input tensor gives zero.

offset (heterogeneous) – T : object, offset tensor denoting the offset for the sampling locations in the convolution kernel. It has shape (N, offset_group * kH * kW * 2, oH, oW) for 2D data or (N, offset_group * k1 * k2 * … * kn * n, o1, o2, … , on) for nD data. Use linear interpolationfor fractional offset values. Sampling locations outside of the padded input tensor gives zero.![]() B (optional, heterogeneous) – T : object, optional 1D bias of length oC to be added to the convolution. Default is a tensor of zeros.

B (optional, heterogeneous) – T : object, optional 1D bias of length oC to be added to the convolution. Default is a tensor of zeros.![]() mask (optional, heterogeneous) – T : object, the mask tensor to be applied to each position in the convolution kernel. It has shape (N, offset_group * kH * kW, oH, oW) for 2D data or (N, offset_group * k1 * k2 * … * kn * n, o1, o2, … , on) for nD data. Default is a tensor of ones.

mask (optional, heterogeneous) – T : object, the mask tensor to be applied to each position in the convolution kernel. It has shape (N, offset_group * kH * kW, oH, oW) for 2D data or (N, offset_group * k1 * k2 * … * kn * n, o1, o2, … , on) for nD data. Default is a tensor of ones.

![]() Parameters : cluster,

Parameters : cluster,

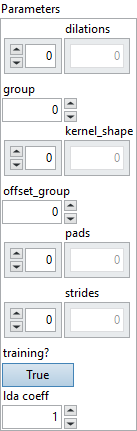

![]() dilations : array, dilation value along each spatial axis of the kernel. Default is 1 along each axis.

dilations : array, dilation value along each spatial axis of the kernel. Default is 1 along each axis.

Default value “empty”.![]() group : integer, number of groups the input and output channels, C and oC, are divided into. C and oC must both be divisible by group.

group : integer, number of groups the input and output channels, C and oC, are divided into. C and oC must both be divisible by group.

Default value “1”.![]() kernel_shape : array, shape of the convolution kernel. If not present, it is inferred from the shape of input W.

kernel_shape : array, shape of the convolution kernel. If not present, it is inferred from the shape of input W.

Default value “empty”.![]() offset_group : integer, number of groups of offset. C must be divisible by offset_group.

offset_group : integer, number of groups of offset. C must be divisible by offset_group.

Default value “1”.![]() pads : array, padding for the beginning and end along each spatial axis. The values represent the number of pixels added to the beginning and end of the corresponding axis and can take any nonnegative value. The format should be as follows: [x1_begin, x2_begin, …, x1_end, x2_end, …], where xi_begin is the number of pixels added at the beginning of axis

pads : array, padding for the beginning and end along each spatial axis. The values represent the number of pixels added to the beginning and end of the corresponding axis and can take any nonnegative value. The format should be as follows: [x1_begin, x2_begin, …, x1_end, x2_end, …], where xi_begin is the number of pixels added at the beginning of axis i and xi_end is the number of pixels added at the end of axis i. Default is 0 along each axis.

Default value “empty”.![]() strides : array, stride along each spatial axis. Default is 1 along each axis.

strides : array, stride along each spatial axis. Default is 1 along each axis.

Default value “empty”.![]() training? : boolean, whether the layer is in training mode (can store data for backward).

training? : boolean, whether the layer is in training mode (can store data for backward).

Default value “True”.![]() lda coeff : float, defines the coefficient by which the loss derivative will be multiplied before being sent to the previous layer (since during the backward run we go backwards).

lda coeff : float, defines the coefficient by which the loss derivative will be multiplied before being sent to the previous layer (since during the backward run we go backwards).

Default value “1”.

![]() name (optional) : string, name of the node.

name (optional) : string, name of the node.

Output parameters

![]() Y (heterogeneous) – T : object, output data tensor that contains the result of convolution. It has shape (N, oC, oH, oW) for 2D data or (N, oC, o1, o2, …, on) for nD data.

Y (heterogeneous) – T : object, output data tensor that contains the result of convolution. It has shape (N, oC, oH, oW) for 2D data or (N, oC, o1, o2, …, on) for nD data.

Type Constraints

T in (tensor(bfloat16), tensor(double), tensor(float), tensor(float16)) : Constrain input and output types to float tensors.