-

SOTA

-

Accelerator Toolkit

-

Deep Learning Toolkit

-

-

- Resume

- Add

- AlphaDropout

- AdditiveAttention

- Attention

- Average

- AvgPool1D

- AvgPool2D

- AvgPool3D

- BatchNormalization

- Bidirectional

- Concatenate

- Conv1D

- Conv1DTranspose

- Conv2D

- Conv2DTranspose

- Conv3D

- Conv3DTranspose

- ConvLSTM1D

- ConvLSTM2D

- ConvLSTM3D

- Dense

- Cropping1D

- Cropping2D

- Cropping3D

- DepthwiseConv2D

- Dropout

- Embedding

- Flatten

- ELU

- Exponential

- GaussianDropout

- GaussianNoise

- GlobalAvgPool1D

- GlobalAvgPool2D

- GlobalAvgPool3D

- GlobalMaxPool1D

- GlobalMaxPool2D

- GlobalMaxPool3D

- GRU

- GELU

- Input

- LayerNormalization

- LSTM

- MaxPool1D

- MaxPool2D

- MaxPool3D

- MultiHeadAttention

- HardSigmoid

- LeakyReLU

- Linear

- Multiply

- Permute3D

- Reshape

- RNN

- PReLU

- ReLU

- SELU

- Output Predict

- Output Train

- SeparableConv1D

- SeparableConv2D

- SimpleRNN

- SpatialDropout

- Sigmoid

- SoftMax

- SoftPlus

- SoftSign

- Split

- UpSampling1D

- UpSampling2D

- UpSampling3D

- ZeroPadding1D

- ZeroPadding2D

- ZeroPadding3D

- Swish

- TanH

- ThresholdedReLU

- Substract

- Show All Articles (63) Collapse Articles

-

-

-

-

- Exp

- Identity

- Abs

- Acos

- Acosh

- ArgMax

- ArgMin

- Asin

- Asinh

- Atan

- Atanh

- AveragePool

- Bernouilli

- BitwiseNot

- BlackmanWindow

- Cast

- Ceil

- Celu

- ConcatFromSequence

- Cos

- Cosh

- DepthToSpace

- Det

- DynamicTimeWarping

- Erf

- EyeLike

- Flatten

- Floor

- GlobalAveragePool

- GlobalLpPool

- GlobalMaxPool

- HammingWindow

- HannWindow

- HardSwish

- HardMax

- lrfft

- lsNaN

- Log

- LogSoftmax

- LpNormalization

- LpPool

- LRN

- MeanVarianceNormalization

- MicrosoftGelu

- Mish

- Multinomial

- MurmurHash3

- Neg

- NhwcMaxPool

- NonZero

- Not

- OptionalGetElement

- OptionalHasElement

- QuickGelu

- RandomNormalLike

- RandomUniformLike

- RawConstantOfShape

- Reciprocal

- ReduceSumInteger

- RegexFullMatch

- Rfft

- Round

- SampleOp

- Shape

- SequenceLength

- Shrink

- Sin

- Sign

- Sinh

- Size

- SpaceToDepth

- Sqrt

- StringNormalizer

- Tan

- TfldfVectorizer

- Tokenizer

- Transpose

- UnfoldTensor

- lslnf

- ImageDecoder

- Inverse

- Show All Articles (65) Collapse Articles

-

-

-

- Add

- AffineGrid

- And

- BiasAdd

- BiasGelu

- BiasSoftmax

- BiasSplitGelu

- BitShift

- BitwiseAnd

- BitwiseOr

- BitwiseXor

- CastLike

- CDist

- CenterCropPad

- Clip

- Col2lm

- ComplexMul

- ComplexMulConj

- Compress

- ConvInteger

- Conv

- ConvTranspose

- ConvTransposeWithDynamicPads

- CropAndResize

- CumSum

- DeformConv

- DequantizeBFP

- DequantizeLinear

- DequantizeWithOrder

- DFT

- Div

- DynamicQuantizeMatMul

- Equal

- Expand

- ExpandDims

- FastGelu

- FusedConv

- FusedGemm

- FusedMatMul

- FusedMatMulActivation

- GatedRelativePositionBias

- Gather

- GatherElements

- GatherND

- Gemm

- GemmFastGelu

- GemmFloat8

- Greater

- GreaterOrEqual

- GreedySearch

- GridSample

- GroupNorm

- InstanceNormalization

- Less

- LessOrEqual

- LongformerAttention

- MatMul

- MatMulBnb4

- MatMulFpQ4

- MatMulInteger

- MatMulInteger16

- MatMulIntergerToFloat

- MatMulNBits

- MaxPoolWithMask

- MaxRoiPool

- MaxUnPool

- MelWeightMatrix

- MicrosoftDequantizeLinear

- MicrosoftGatherND

- MicrosoftGridSample

- MicrosoftPad

- MicrosoftQLinearConv

- MicrosoftQuantizeLinear

- MicrosoftRange

- MicrosoftTrilu

- Mod

- MoE

- Mul

- MulInteger

- NegativeLogLikelihoodLoss

- NGramRepeatBlock

- NhwcConv

- NhwcFusedConv

- NonMaxSuppression

- OneHot

- Or

- PackedAttention

- PackedMultiHeadAttention

- Pad

- Pow

- QGemm

- QLinearAdd

- QLinearAveragePool

- QLinearConcat

- QLinearConv

- QLinearGlobalAveragePool

- QLinearLeakyRelu

- QLinearMatMul

- QLinearMul

- QLinearReduceMean

- QLinearSigmoid

- QLinearSoftmax

- QLinearWhere

- QMoE

- QOrderedAttention

- QOrderedGelu

- QOrderedLayerNormalization

- QOrderedLongformerAttention

- QOrderedMatMul

- QuantizeLinear

- QuantizeWithOrder

- Range

- ReduceL1

- ReduceL2

- ReduceLogSum

- ReduceLogSumExp

- ReduceMax

- ReduceMean

- ReduceMin

- ReduceProd

- ReduceSum

- ReduceSumSquare

- RelativePositionBias

- Reshape

- Resize

- RestorePadding

- ReverseSequence

- RoiAlign

- RotaryEmbedding

- ScatterElements

- ScatterND

- SequenceAt

- SequenceErase

- SequenceInsert

- Sinh

- Slice

- SparseToDenseMatMul

- SplitToSequence

- Squeeze

- STFT

- StringConcat

- Sub

- Tile

- TorchEmbedding

- TransposeMatMul

- Trilu

- Unsqueeze

- Where

- WordConvEmbedding

- Xor

- Show All Articles (134) Collapse Articles

-

- Attention

- AttnLSTM

- BatchNormalization

- BiasDropout

- BifurcationDetector

- BitmaskBiasDropout

- BitmaskDropout

- DecoderAttention

- DecoderMaskedMultiHeadAttention

- DecoderMaskedSelfAttention

- Dropout

- DynamicQuantizeLinear

- DynamicQuantizeLSTM

- EmbedLayerNormalization

- GemmaRotaryEmbedding

- GroupQueryAttention

- GRU

- LayerNormalization

- LSTM

- MicrosoftMultiHeadAttention

- QAttention

- RemovePadding

- RNN

- Sampling

- SkipGroupNorm

- SkipLayerNormalization

- SkipSimplifiedLayerNormalization

- SoftmaxCrossEntropyLoss

- SparseAttention

- TopK

- WhisperBeamSearch

- Show All Articles (15) Collapse Articles

-

-

-

-

-

-

-

-

-

-

- AdditiveAttention

- Attention

- BatchNormalization

- Bidirectional

- Conv1D

- Conv2D

- Conv1DTranspose

- Conv2DTranspose

- Conv3DTranspose

- Conv3D

- ConvLSTM1D

- ConvLSTM2D

- ConvLSTM3D

- Dense

- DepthwiseConv2D

- Embedding

- LayerNormalization

- GRU

- LSTM

- PReLU 2D

- PReLU 3D

- PReLU 4D

- PReLU 5D

- MutiHeadAttention

- SeparableConv1D

- SeparableConv2D

- MultiHeadAttention

- RNN (GRU)

- RNN (LSTM)

- RNN (SimpleRNN)

- SimpleRNN

- 1D

- 2D

- 3D

- 4D

- 5D

- 6D

- Scalar

- Show All Articles (22) Collapse Articles

-

- AdditiveAttention

- Attention

- BatchNormalization

- Conv1D

- Conv2D

- Conv1DTranspose

- Conv2DTranspose

- Bidirectional

- Conv3D

- ConvLSTM1D

- ConvLSTM2D

- ConvLSTM3D

- Conv3DTranspose

- DepthwiseConv2D

- Dense

- Embedding

- LayerNormalization

- GRU

- PReLU 2D

- PReLU 3D

- PReLU 4D

- MultiHeadAttention

- LSTM

- PReLU 5D

- SeparableConv1D

- SeparableConv2D

- SimpleRNN

- RNN (GRU)

- RNN (LSTM)

- RNN (SimpleRNN)

- 1D

- 2D

- 3D

- 4D

- 5D

- 6D

- Scalar

- Show All Articles (21) Collapse Articles

-

-

- AdditiveAttention

- Attention

- BatchNormalization

- Bidirectional

- Conv1D

- Conv2D

- Conv3D

- Conv1DTranspose

- Conv2DTranspose

- Conv3DTranspose

- ConvLSTM1D

- ConvLSTM2D

- ConvLSTM3D

- Dense

- DepthwiseConv2D

- Embedding

- GRU

- LayerNormalization

- LSTM

- MultiHeadAttention

- PReLU 2D

- PReLU 3D

- PReLU 4D

- PReLU 5D

- Resume

- SeparableConv1D

- SeparableConv2D

- SimpleRNN

- Show All Articles (12) Collapse Articles

-

-

- Dense

- Embedding

- AdditiveAttention

- Attention

- MultiHeadAttention

- Conv1D

- Conv2D

- Conv3D

- ConvLSTM1D

- ConvLSTM2D

- ConvLSTM3D

- Conv1DTranspose

- Conv2DTranspose

- Conv3DTranspose

- DepthwiseConv2D

- SeparableConv1D

- SeparableConv2D

- BatchNormalization

- LayerNormalization

- PReLU 2D

- PReLU 3D

- PReLU 4D

- PReLU 5D

- Bidirectional

- GRU

- LSTM

- RNN (GRU)

- RNN (LSTM)

- RNN (SimpleRNN)

- SimpleRNN

- PReLU 4D

- Show All Articles (15) Collapse Articles

-

- Dense

- Embedding

- AdditiveAttention

- Attention

- MultiHeadAttention

- Conv1D

- Conv2D

- Conv3D

- ConvLSTM1D

- ConvLSTM2D

- ConvLSTM3D

- Conv1DTranspose

- Conv2DTranspose

- Conv3DTranspose

- DepthwiseConv2D

- SeparableConv1D

- SeparableConv2D

- BatchNormalization

- LayerNormalization

- PReLU 2D

- PReLU 3D

- PReLU 4D

- PReLU 5D

- Bidirectional

- GRU

- LSTM

- RNN (GRU)

- RNN (LSTM)

- RNN (SimpleRNN)

- SimpleRNN

- Show All Articles (14) Collapse Articles

-

-

- Accuracy

- BinaryAccuracy

- BinaryCrossentropy

- BinaryIoU

- CategoricalAccuracy

- CategoricalCrossentropy

- CategoricalHinge

- CosineSimilarity

- FalseNegatives

- FalsePositives

- Hinge

- Huber

- IoU

- KLDivergence

- LogCoshError

- Mean

- MeanAbsoluteError

- MeanAbsolutePercentageError

- MeanIoU

- MeanRelativeError

- MeanSquaredError

- MeanSquaredLogarithmicError

- MeanTensor

- OneHotIoU

- OneHotMeanIoU

- Poisson

- Precision

- PrecisionAtRecall

- Recall

- RecallAtPrecision

- RootMeanSquaredError

- SensitivityAtSpecificity

- SparseCategoricalAccuracy

- SparseCategoricalCrossentropy

- SparseTopKCategoricalAccuracy

- Specificity

- SpecificityAtSensitivity

- SquaredHinge

- Sum

- TopKCategoricalAccuracy

- TrueNegatives

- TruePositives

- Resume

- Show All Articles (27) Collapse Articles

-

-

- Dense

- Embedding

- AdditiveAttention

- Attention

- MultiHeadAttention

- Conv1D

- Conv2D

- Conv3D

- ConvLSTM1D

- ConvLSTM2D

- ConvLSTM3D

- Conv1DTranspose

- Conv2DTranspose

- Conv3DTranspose

- DepthwiseConv2D

- SeparableConv1D

- SeparableConv2D

- BatchNormalization

- LayerNormalization

- PReLU 2D

- PReLU 3D

- PReLU 4D

- PReLU 5D

- Bidirectional

- GRU

- LSTM

- RNN (GRU)

- RNN (LSTM)

- RNN (SimpleRNN)

- SimpleRNN

- Show All Articles (14) Collapse Articles

-

- Dense

- Embedding

- AdditiveAttention

- Attention

- MultiHeadAttention

- Conv1D

- Conv2D

- Conv3D

- ConvLSTM1D

- ConvLSTM2D

- ConvLSTM3D

- Conv1DTranspose

- Conv2DTranspose

- Conv3DTranspose

- DepthwiseConv2D

- SeparableConv1D

- SeparableConv2D

- LayerNormalization

- PReLU 2D

- PReLU 3D

- PReLU 4D

- PReLU 5D

- Bidirectional

- GRU

- LSTM

- RNN (GRU)

- RNN (LSTM)

- RNN (SimpleRNN)

- SimpleRNN

- BatchNormalization

- Show All Articles (14) Collapse Articles

-

-

-

Computer Vision Toolkit

-

CUDA Toolkit

-

- Resume

- Array size

- Index Array

- Replace Subset

- Insert Into Array

- Delete From Array

- Initialize Array

- Build Array

- Concatenate Array

- Array Subset

- Min & Max

- Reshape Array

- Short Array

- Reverse 1D array

- Shuffle array

- Search In Array

- Split 1D Array

- Split 2D Array

- Rotate 1D Array

- Increment Array Element

- Decrement Array Element

- Interpolate 1D Array

- Threshold 1D Array

- Interleave 1D Array

- Decimate 1D Array

- Transpose Array

- Remove Duplicate From 1D Array

- Show All Articles (11) Collapse Articles

-

-

- Resume

- Add

- Substract

- Multiply

- Divide

- Quotient & Remainder

- Increment

- Decrement

- Add Array Element

- Multiply Array Element

- Absolute

- Round To Nearest

- Round Toward -Infinity

- Round Toward +Infinity

- Scale By Power Of Two

- Square Root

- Square

- Negate

- Reciprocal

- Sign

- Show All Articles (4) Collapse Articles

Attention

Description

Multi-Head Attention that can be either unidirectional (like GPT-2) or bidirectional (like BERT).

The weights for input projection of Q, K and V are merged. The data is stacked on the second dimension. Its shape is (input_hidden_size, hidden_size + hidden_size + v_hidden_size). Here hidden_size is the hidden dimension of Q and K, and v_hidden_size is that of V.

The mask_index is optional. Besides raw attention mask with shape (batch_size, total_sequence_length) or (batch_size, sequence_length, total_sequence_length) with value 0 for masked and 1 otherwise, we support other two formats: When input has right-side padding, mask_index is one dimension with shape (batch_size), where value is actual sequence length excluding padding. When input has left-side padding, mask_index has shape (2 * batch_size), where the values are the exclusive end positions followed by the inclusive start positions.

When unidirectional is 1, each token only attends to previous tokens.

Both past and present state are optional. They shall be used together, and not allowed to use only one of them. The qkv_hidden_sizes is required only when K and V have different hidden sizes.

When there is past state, hidden dimension for Q, K and V shall be the same.

The total_sequence_length is past_sequence_length + kv_sequence_length. Here kv_sequence_length is the length of K or V. For self attention, kv_sequence_length equals to sequence_length (sequence length of Q). For cross attention, query and key might have different lengths.

Input parameters

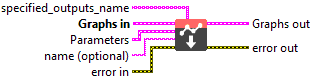

![]() specified_outputs_name : array, this parameter lets you manually assign custom names to the output tensors of a node.

specified_outputs_name : array, this parameter lets you manually assign custom names to the output tensors of a node.

![]() Graphs in : cluster, ONNX model architecture.

Graphs in : cluster, ONNX model architecture.

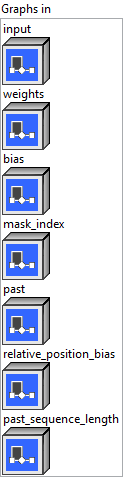

![]() input – T : object, input tensor with shape (batch_size, sequence_length, input_hidden_size).

input – T : object, input tensor with shape (batch_size, sequence_length, input_hidden_size).![]() weights – T : object, merged Q/K/V weights with shape (input_hidden_size, hidden_size + hidden_size + v_hidden_size).

weights – T : object, merged Q/K/V weights with shape (input_hidden_size, hidden_size + hidden_size + v_hidden_size).![]() bias – T : object, bias tensor with shape (hidden_size + hidden_size + v_hidden_size) for input projection.

bias – T : object, bias tensor with shape (hidden_size + hidden_size + v_hidden_size) for input projection.![]() mask_index – M : object, attention mask with shape (batch_size, 1, max_sequence_length, max_sequence_length), (batch_size, total_sequence_length) or (batch_size, sequence_length, total_sequence_length), or index with shape (batch_size) or (2 * batch_size) or (3 * batch_size + 2).

mask_index – M : object, attention mask with shape (batch_size, 1, max_sequence_length, max_sequence_length), (batch_size, total_sequence_length) or (batch_size, sequence_length, total_sequence_length), or index with shape (batch_size) or (2 * batch_size) or (3 * batch_size + 2).![]() past – T : object, past state for key and value with shape (2, batch_size, num_heads, past_sequence_length, head_size)When past_present_share_buffer is set, its shape is (2, batch_size, num_heads, max_sequence_length, head_size).

past – T : object, past state for key and value with shape (2, batch_size, num_heads, past_sequence_length, head_size)When past_present_share_buffer is set, its shape is (2, batch_size, num_heads, max_sequence_length, head_size).![]() relative_position_bias – T : object, additional add to QxK’ with shape (batch_size or 1, num_heads or 1, sequence_length, total_sequence_length).

relative_position_bias – T : object, additional add to QxK’ with shape (batch_size or 1, num_heads or 1, sequence_length, total_sequence_length).![]() past_sequence_length – M : object, when past_present_share_buffer is used, it is required to specify past_sequence_length (could be 0).

past_sequence_length – M : object, when past_present_share_buffer is used, it is required to specify past_sequence_length (could be 0).

![]() Parameters : cluster,

Parameters : cluster,

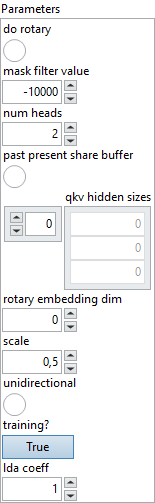

![]() do rotary : boolean, whether to use rotary position embedding.

do rotary : boolean, whether to use rotary position embedding.

Default value “False”.![]() mask filter value : float, the value to be filled in the attention mask.

mask filter value : float, the value to be filled in the attention mask.

Default value “-10000”.![]() num heads : integer, number of attention heads.

num heads : integer, number of attention heads.

Default value “2”.![]() past present share buffer : boolean, corresponding past and present are same tensor, its size is (2, batch_size, num_heads, max_sequence_length, head_size).

past present share buffer : boolean, corresponding past and present are same tensor, its size is (2, batch_size, num_heads, max_sequence_length, head_size).

Default value “False”.![]() qkv hidden sizes : array, hidden dimension of Q, K, V: hidden_size, hidden_size and v_hidden_size.

qkv hidden sizes : array, hidden dimension of Q, K, V: hidden_size, hidden_size and v_hidden_size.

Default value “empty”.![]() rotary embedding dim : integer, dimension of rotary embedding. Limited to 32, 64 or 128.

rotary embedding dim : integer, dimension of rotary embedding. Limited to 32, 64 or 128.

Default value “0”.![]() scale : float, custom scale will be used if specified.

scale : float, custom scale will be used if specified.

Default value “0.5”.![]() unidirectional : boolean, whether every token can only attend to previous tokens.

unidirectional : boolean, whether every token can only attend to previous tokens.

Default value “False”.![]() training? : boolean, whether the layer is in training mode (can store data for backward).

training? : boolean, whether the layer is in training mode (can store data for backward).

Default value “True”.![]() lda coeff : float, defines the coefficient by which the loss derivative will be multiplied before being sent to the previous layer (since during the backward run we go backwards).

lda coeff : float, defines the coefficient by which the loss derivative will be multiplied before being sent to the previous layer (since during the backward run we go backwards).

Default value “1”.

![]() name (optional) : string, name of the node.

name (optional) : string, name of the node.

Output parameters

![]() Graphs out : cluster, ONNX model architecture.

Graphs out : cluster, ONNX model architecture.

![]() output – T : object, 3D output tensor with shape (batch_size, sequence_length, v_hidden_size).

output – T : object, 3D output tensor with shape (batch_size, sequence_length, v_hidden_size).![]() present – T : object, past state for key and value with shape (2, batch_size, num_heads, total_sequence_length, head_size). If past_present_share_buffer is set, its shape is (2, batch_size, num_heads, max_sequence_length, head_size), while effective_seq_length = (past_sequence_length + kv_sequence_length).

present – T : object, past state for key and value with shape (2, batch_size, num_heads, total_sequence_length, head_size). If past_present_share_buffer is set, its shape is (2, batch_size, num_heads, max_sequence_length, head_size), while effective_seq_length = (past_sequence_length + kv_sequence_length).

Type Constraints

T in (tensor(float), tensor(float16)) : Constrain input and output types to float tensors.

M in (tensor(int32)) : Constrain mask index to integer types.