All release notes are available at this page .

| Release Notes V1.5.0 |

Date of release February 2024 |

General Notes:

- Optimization for GPU execution in Load and Save functionalities.

- Revised memory management to prevent leaks in tensor processing.

- Enhanced metric accuracy and support for various data types.

- Introduced new GPU compatible layers and activation functions.

Bug Fixes:

- Fixed multi-input layer concatenation issue affecting predecessors.

- Resolved an issue where multiple layers sharing the same layer_name caused conflicts during the creation of the complete graph. An error message has been introduced to alert the user of the conflict and its origin, thereby preventing the ambiguity that previously led to graph compilation errors. This update ensures unique identification for each layer, facilitating a smoother graph construction process.

- HDF5 Loading Issue:Addressed a loading issue related to optimizer parameters when using TensorFlow. The core of the problem was the absence of a shift register within the loop, which resulted in parameters being processed but not properly recorded. This fix ensures that all parameters are now correctly loaded and stored during the execution cycle.

- Accuracy Metric Calculation Update:Refined the accuracy metric computation method. Previously, the calculation relied on counts of True Positives (TP), True Negatives (TN), False Negatives (FN), and False Positives (FP). The updated approach directly compares the predicted values (y_pred) to the true values (y_true), tallying the number of correct predictions. This count is then divided by the total number of predictions to yield the accuracy rate, streamlining the process and aligning it more closely with standard accuracy definitions.

- Binary IOU Metric EnhancementWe have revised the Binary Intersection over Union (IOU) metric computation to include a threshold comparison. Previously, y_pred was not compared against a threshold to classify values as 0 (below threshold) or 1 (above threshold). This comparison has now been integrated, and similarly applied to y_true for consistency and ease of use. It is important for users to be aware that with these adjustments, the resulting values will differ from those provided by Keras.

- GPU Softmax Operation Update: ‘channels_last’ ConfigurationPreviously, when attempting to perform a softmax operation on the last axis (n-1) using a GPU, the operation was incorrectly applied to axis 1. This issue has been resolved. However, it should be noted that while the softmax function now correctly operates on the last axis, attempting to apply softmax on any axis other than 1 or n-1 is still not functioning as intended. Further adjustments will be required to extend support for softmax operations on alternative axes.

-

General Bug Fixes Across LayersMultiple layers within the framework had encountered issues that have now been resolved. These corrections span a variety of functionalities and ensure enhanced stability and reliability of the models. This update addresses the underlying problems, ensuring that each layer performs as expected without errors, significantly improving the user experience and model accuracy.

- Fix for Setting Execution Type Before Adding Layers to the GraphResolved an issue where setting the execution type (set_exec) for a model, followed by adding additional layers, would result in the newly added layers not fully acknowledging the specified execution type. This discrepancy affected the initialization of weights and momentums, leading to inconsistencies during model compilation. Now, layers added after setting the execution type will correctly adhere to the established execution environment, ensuring uniform behavior across the entire model and preventing initialization errors.

Improvements:

- GPU Parallelism Enhancement: New VIs for Graph Management

To manage GPU parallelism, we have introduced two new VIs: fork_graph and create_shared. These VIs enable the generation of multiple versions of the same model, allowing them to be executed in parallel. This feature significantly improves the utilization of GPU resources and enhances the efficiency of parallel computing tasks.

- Optimizer Momentum Customization

The momentum settings of optimizers can now be retrieved and set for each individual layer. This enhancement provides users with greater control over the training process, allowing for fine-tuning of the optimizer’s momentum to achieve optimal convergence rates.

- H5 File Load/Save Update The load and save functionality for H5 files has been updated. It now supports H5 files from HAIBAL 1, HAIBAL 2, and TensorFlow, with improved handling for HAIBAL 2 and TensorFlow that includes a more comprehensive set of parameters during the saving and loading processes.

- GPU-Accelerated Forward/Loss Input Processing

We have streamlined the process of inputting data into the Forward/Loss functions when using the Model with GPU acceleration. It is now possible to directly send CUDA pointers within the Forward or Loss functions without the need to convert inputs into LabVIEW arrays first. This optimization reduces data preprocessing overhead and further leverages the GPU’s capabilities for improved performance. - GPU Convolution Activation Function FixAddressed an issue where the ELU, Sigmoid, Swish, and TanH activation functions were not operating correctly on GPU. These functions are now fully functional when utilizing GPU acceleration, ensuring that users can employ a wider range of activation functions for their deep learning models with the expected performance benefits of GPU computation.

- Metrics Update for GPU Compatibility:Following the modification of the loss function, it was observed that the metrics ceased to function correctly on GPU environments. To address this, GPU-compatible versions of the metrics have been developed and are now being implemented. This ensures that the performance metrics will operate reliably across both CPU and GPU setups, accommodating users who leverage GPU acceleration for their computations.

- Metrics Update: True Positives, True Negatives, False Positives, and False NegativesWe have updated the comparison logic for y_true to streamline usability. Please note that with this update, the values for True Positives, True Negatives, False Positives, and False Negatives will no longer match those from Keras directly. This change is designed to enhance the intuitive use of the metrics within our system.

- Enhancements to AddToGraph/Define FunctionalityLayers that contain internal classes have been updated to include two versions of the add_to_graph method:

Simplified Version: Mirroring the add_to_graph approach from HAIBAL 1, this version uses enumerators to select parameters, streamlining the process for users who prefer straightforward configuration.

Advanced Version: A more complex iteration has been introduced, offering the ability to parameterize internal classes such as Optimizers, Initializers, and Regularizers. This version caters to users requiring deeper customization and precise control over the configuration of their models.

These enhancements provide flexibility in defining the behavior of layers, accommodating both users seeking simplicity and those needing detailed customization options.

LabVIEW compatibility versions

LabVIEW64 2020SP1, LabVIEW64 2021SP1, LabVIEW64 2023Q3, LabVIEW64 2024Q1 are supported with this release.

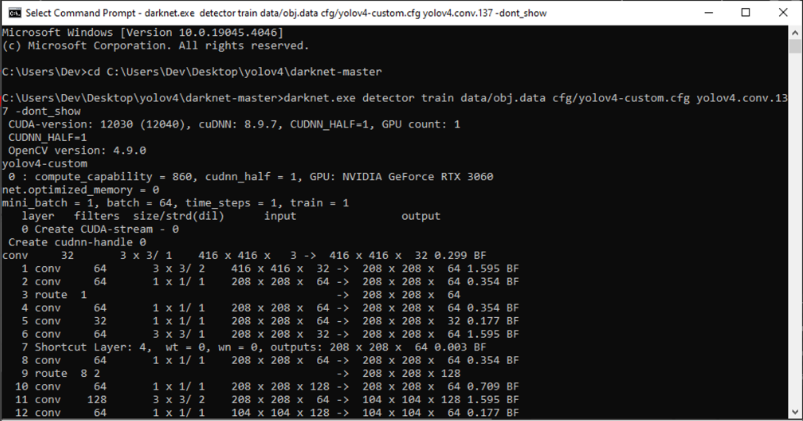

Version of CUDA API

CUDA 12.3 & Cudnn 8.9.0

Use GIM our unified installation platform to make HAIBAL working properly with Cuda (do not install cuda by yourselve our tool is here to simplify your life !!).