-

SOTA

-

Accelerator Toolkit

-

Deep Learning Toolkit

-

-

- Resume

- Add

- AlphaDropout

- AdditiveAttention

- Attention

- Average

- AvgPool1D

- AvgPool2D

- AvgPool3D

- BatchNormalization

- Bidirectional

- Concatenate

- Conv1D

- Conv1DTranspose

- Conv2D

- Conv2DTranspose

- Conv3D

- Conv3DTranspose

- ConvLSTM1D

- ConvLSTM2D

- ConvLSTM3D

- Dense

- Cropping1D

- Cropping2D

- Cropping3D

- DepthwiseConv2D

- Dropout

- Embedding

- Flatten

- ELU

- Exponential

- GaussianDropout

- GaussianNoise

- GlobalAvgPool1D

- GlobalAvgPool2D

- GlobalAvgPool3D

- GlobalMaxPool1D

- GlobalMaxPool2D

- GlobalMaxPool3D

- GRU

- GELU

- Input

- LayerNormalization

- LSTM

- MaxPool1D

- MaxPool2D

- MaxPool3D

- MultiHeadAttention

- HardSigmoid

- LeakyReLU

- Linear

- Multiply

- Permute3D

- Reshape

- RNN

- PReLU

- ReLU

- SELU

- Output Predict

- Output Train

- SeparableConv1D

- SeparableConv2D

- SimpleRNN

- SpatialDropout

- Sigmoid

- SoftMax

- SoftPlus

- SoftSign

- Split

- UpSampling1D

- UpSampling2D

- UpSampling3D

- ZeroPadding1D

- ZeroPadding2D

- ZeroPadding3D

- Swish

- TanH

- ThresholdedReLU

- Substract

- Show All Articles (63) Collapse Articles

-

-

-

-

- Exp

- Identity

- Abs

- Acos

- Acosh

- ArgMax

- ArgMin

- Asin

- Asinh

- Atan

- Atanh

- AveragePool

- Bernouilli

- BitwiseNot

- BlackmanWindow

- Cast

- Ceil

- Celu

- ConcatFromSequence

- Cos

- Cosh

- DepthToSpace

- Det

- DynamicTimeWarping

- Erf

- EyeLike

- Flatten

- Floor

- GlobalAveragePool

- GlobalLpPool

- GlobalMaxPool

- HammingWindow

- HannWindow

- HardSwish

- HardMax

- lrfft

- lsNaN

- Log

- LogSoftmax

- LpNormalization

- LpPool

- LRN

- MeanVarianceNormalization

- MicrosoftGelu

- Mish

- Multinomial

- MurmurHash3

- Neg

- NhwcMaxPool

- NonZero

- Not

- OptionalGetElement

- OptionalHasElement

- QuickGelu

- RandomNormalLike

- RandomUniformLike

- RawConstantOfShape

- Reciprocal

- ReduceSumInteger

- RegexFullMatch

- Rfft

- Round

- SampleOp

- Shape

- SequenceLength

- Shrink

- Sin

- Sign

- Sinh

- Size

- SpaceToDepth

- Sqrt

- StringNormalizer

- Tan

- TfldfVectorizer

- Tokenizer

- Transpose

- UnfoldTensor

- lslnf

- ImageDecoder

- Inverse

- Show All Articles (65) Collapse Articles

-

-

-

- Add

- AffineGrid

- And

- BiasAdd

- BiasGelu

- BiasSoftmax

- BiasSplitGelu

- BitShift

- BitwiseAnd

- BitwiseOr

- BitwiseXor

- CastLike

- CDist

- CenterCropPad

- Clip

- Col2lm

- ComplexMul

- ComplexMulConj

- Compress

- ConvInteger

- Conv

- ConvTranspose

- ConvTransposeWithDynamicPads

- CropAndResize

- CumSum

- DeformConv

- DequantizeBFP

- DequantizeLinear

- DequantizeWithOrder

- DFT

- Div

- DynamicQuantizeMatMul

- Equal

- Expand

- ExpandDims

- FastGelu

- FusedConv

- FusedGemm

- FusedMatMul

- FusedMatMulActivation

- GatedRelativePositionBias

- Gather

- GatherElements

- GatherND

- Gemm

- GemmFastGelu

- GemmFloat8

- Greater

- GreaterOrEqual

- GreedySearch

- GridSample

- GroupNorm

- InstanceNormalization

- Less

- LessOrEqual

- LongformerAttention

- MatMul

- MatMulBnb4

- MatMulFpQ4

- MatMulInteger

- MatMulInteger16

- MatMulIntergerToFloat

- MatMulNBits

- MaxPoolWithMask

- MaxRoiPool

- MaxUnPool

- MelWeightMatrix

- MicrosoftDequantizeLinear

- MicrosoftGatherND

- MicrosoftGridSample

- MicrosoftPad

- MicrosoftQLinearConv

- MicrosoftQuantizeLinear

- MicrosoftRange

- MicrosoftTrilu

- Mod

- MoE

- Mul

- MulInteger

- NegativeLogLikelihoodLoss

- NGramRepeatBlock

- NhwcConv

- NhwcFusedConv

- NonMaxSuppression

- OneHot

- Or

- PackedAttention

- PackedMultiHeadAttention

- Pad

- Pow

- QGemm

- QLinearAdd

- QLinearAveragePool

- QLinearConcat

- QLinearConv

- QLinearGlobalAveragePool

- QLinearLeakyRelu

- QLinearMatMul

- QLinearMul

- QLinearReduceMean

- QLinearSigmoid

- QLinearSoftmax

- QLinearWhere

- QMoE

- QOrderedAttention

- QOrderedGelu

- QOrderedLayerNormalization

- QOrderedLongformerAttention

- QOrderedMatMul

- QuantizeLinear

- QuantizeWithOrder

- Range

- ReduceL1

- ReduceL2

- ReduceLogSum

- ReduceLogSumExp

- ReduceMax

- ReduceMean

- ReduceMin

- ReduceProd

- ReduceSum

- ReduceSumSquare

- RelativePositionBias

- Reshape

- Resize

- RestorePadding

- ReverseSequence

- RoiAlign

- RotaryEmbedding

- ScatterElements

- ScatterND

- SequenceAt

- SequenceErase

- SequenceInsert

- Sinh

- Slice

- SparseToDenseMatMul

- SplitToSequence

- Squeeze

- STFT

- StringConcat

- Sub

- Tile

- TorchEmbedding

- TransposeMatMul

- Trilu

- Unsqueeze

- Where

- WordConvEmbedding

- Xor

- Show All Articles (134) Collapse Articles

-

- Attention

- AttnLSTM

- BatchNormalization

- BiasDropout

- BifurcationDetector

- BitmaskBiasDropout

- BitmaskDropout

- DecoderAttention

- DecoderMaskedMultiHeadAttention

- DecoderMaskedSelfAttention

- Dropout

- DynamicQuantizeLinear

- DynamicQuantizeLSTM

- EmbedLayerNormalization

- GemmaRotaryEmbedding

- GroupQueryAttention

- GRU

- LayerNormalization

- LSTM

- MicrosoftMultiHeadAttention

- QAttention

- RemovePadding

- RNN

- Sampling

- SkipGroupNorm

- SkipLayerNormalization

- SkipSimplifiedLayerNormalization

- SoftmaxCrossEntropyLoss

- SparseAttention

- TopK

- WhisperBeamSearch

- Show All Articles (15) Collapse Articles

-

-

-

-

-

-

-

-

-

-

- AdditiveAttention

- Attention

- BatchNormalization

- Bidirectional

- Conv1D

- Conv2D

- Conv1DTranspose

- Conv2DTranspose

- Conv3DTranspose

- Conv3D

- ConvLSTM1D

- ConvLSTM2D

- ConvLSTM3D

- Dense

- DepthwiseConv2D

- Embedding

- LayerNormalization

- GRU

- LSTM

- PReLU 2D

- PReLU 3D

- PReLU 4D

- PReLU 5D

- MutiHeadAttention

- SeparableConv1D

- SeparableConv2D

- MultiHeadAttention

- RNN (GRU)

- RNN (LSTM)

- RNN (SimpleRNN)

- SimpleRNN

- 1D

- 2D

- 3D

- 4D

- 5D

- 6D

- Scalar

- Show All Articles (22) Collapse Articles

-

- AdditiveAttention

- Attention

- BatchNormalization

- Conv1D

- Conv2D

- Conv1DTranspose

- Conv2DTranspose

- Bidirectional

- Conv3D

- ConvLSTM1D

- ConvLSTM2D

- ConvLSTM3D

- Conv3DTranspose

- DepthwiseConv2D

- Dense

- Embedding

- LayerNormalization

- GRU

- PReLU 2D

- PReLU 3D

- PReLU 4D

- MultiHeadAttention

- LSTM

- PReLU 5D

- SeparableConv1D

- SeparableConv2D

- SimpleRNN

- RNN (GRU)

- RNN (LSTM)

- RNN (SimpleRNN)

- 1D

- 2D

- 3D

- 4D

- 5D

- 6D

- Scalar

- Show All Articles (21) Collapse Articles

-

-

- AdditiveAttention

- Attention

- BatchNormalization

- Bidirectional

- Conv1D

- Conv2D

- Conv3D

- Conv1DTranspose

- Conv2DTranspose

- Conv3DTranspose

- ConvLSTM1D

- ConvLSTM2D

- ConvLSTM3D

- Dense

- DepthwiseConv2D

- Embedding

- GRU

- LayerNormalization

- LSTM

- MultiHeadAttention

- PReLU 2D

- PReLU 3D

- PReLU 4D

- PReLU 5D

- Resume

- SeparableConv1D

- SeparableConv2D

- SimpleRNN

- Show All Articles (12) Collapse Articles

-

-

- Dense

- Embedding

- AdditiveAttention

- Attention

- MultiHeadAttention

- Conv1D

- Conv2D

- Conv3D

- ConvLSTM1D

- ConvLSTM2D

- ConvLSTM3D

- Conv1DTranspose

- Conv2DTranspose

- Conv3DTranspose

- DepthwiseConv2D

- SeparableConv1D

- SeparableConv2D

- BatchNormalization

- LayerNormalization

- PReLU 2D

- PReLU 3D

- PReLU 4D

- PReLU 5D

- Bidirectional

- GRU

- LSTM

- RNN (GRU)

- RNN (LSTM)

- RNN (SimpleRNN)

- SimpleRNN

- PReLU 4D

- Show All Articles (15) Collapse Articles

-

- Dense

- Embedding

- AdditiveAttention

- Attention

- MultiHeadAttention

- Conv1D

- Conv2D

- Conv3D

- ConvLSTM1D

- ConvLSTM2D

- ConvLSTM3D

- Conv1DTranspose

- Conv2DTranspose

- Conv3DTranspose

- DepthwiseConv2D

- SeparableConv1D

- SeparableConv2D

- BatchNormalization

- LayerNormalization

- PReLU 2D

- PReLU 3D

- PReLU 4D

- PReLU 5D

- Bidirectional

- GRU

- LSTM

- RNN (GRU)

- RNN (LSTM)

- RNN (SimpleRNN)

- SimpleRNN

- Show All Articles (14) Collapse Articles

-

-

- Accuracy

- BinaryAccuracy

- BinaryCrossentropy

- BinaryIoU

- CategoricalAccuracy

- CategoricalCrossentropy

- CategoricalHinge

- CosineSimilarity

- FalseNegatives

- FalsePositives

- Hinge

- Huber

- IoU

- KLDivergence

- LogCoshError

- Mean

- MeanAbsoluteError

- MeanAbsolutePercentageError

- MeanIoU

- MeanRelativeError

- MeanSquaredError

- MeanSquaredLogarithmicError

- MeanTensor

- OneHotIoU

- OneHotMeanIoU

- Poisson

- Precision

- PrecisionAtRecall

- Recall

- RecallAtPrecision

- RootMeanSquaredError

- SensitivityAtSpecificity

- SparseCategoricalAccuracy

- SparseCategoricalCrossentropy

- SparseTopKCategoricalAccuracy

- Specificity

- SpecificityAtSensitivity

- SquaredHinge

- Sum

- TopKCategoricalAccuracy

- TrueNegatives

- TruePositives

- Resume

- Show All Articles (27) Collapse Articles

-

-

- Dense

- Embedding

- AdditiveAttention

- Attention

- MultiHeadAttention

- Conv1D

- Conv2D

- Conv3D

- ConvLSTM1D

- ConvLSTM2D

- ConvLSTM3D

- Conv1DTranspose

- Conv2DTranspose

- Conv3DTranspose

- DepthwiseConv2D

- SeparableConv1D

- SeparableConv2D

- BatchNormalization

- LayerNormalization

- PReLU 2D

- PReLU 3D

- PReLU 4D

- PReLU 5D

- Bidirectional

- GRU

- LSTM

- RNN (GRU)

- RNN (LSTM)

- RNN (SimpleRNN)

- SimpleRNN

- Show All Articles (14) Collapse Articles

-

- Dense

- Embedding

- AdditiveAttention

- Attention

- MultiHeadAttention

- Conv1D

- Conv2D

- Conv3D

- ConvLSTM1D

- ConvLSTM2D

- ConvLSTM3D

- Conv1DTranspose

- Conv2DTranspose

- Conv3DTranspose

- DepthwiseConv2D

- SeparableConv1D

- SeparableConv2D

- LayerNormalization

- PReLU 2D

- PReLU 3D

- PReLU 4D

- PReLU 5D

- Bidirectional

- GRU

- LSTM

- RNN (GRU)

- RNN (LSTM)

- RNN (SimpleRNN)

- SimpleRNN

- BatchNormalization

- Show All Articles (14) Collapse Articles

-

-

-

Computer Vision Toolkit

-

CUDA Toolkit

-

- Resume

- Array size

- Index Array

- Replace Subset

- Insert Into Array

- Delete From Array

- Initialize Array

- Build Array

- Concatenate Array

- Array Subset

- Min & Max

- Reshape Array

- Short Array

- Reverse 1D array

- Shuffle array

- Search In Array

- Split 1D Array

- Split 2D Array

- Rotate 1D Array

- Increment Array Element

- Decrement Array Element

- Interpolate 1D Array

- Threshold 1D Array

- Interleave 1D Array

- Decimate 1D Array

- Transpose Array

- Remove Duplicate From 1D Array

- Show All Articles (11) Collapse Articles

-

-

- Resume

- Add

- Substract

- Multiply

- Divide

- Quotient & Remainder

- Increment

- Decrement

- Add Array Element

- Multiply Array Element

- Absolute

- Round To Nearest

- Round Toward -Infinity

- Round Toward +Infinity

- Scale By Power Of Two

- Square Root

- Square

- Negate

- Reciprocal

- Sign

- Show All Articles (4) Collapse Articles

SparseAttention

Description

Block Sparse Attention used in Phi-3-small (https://arxiv.org/pdf/2404.14219). It is inspired by Sparse Transformers (https://arxiv.org/pdf/1904.10509) and BigBird (https://arxiv.org/pdf/2007.14062).

block_mask can be used to configure sparse layout for different head. When number of sparse layout is 1, all heads have same sparse layout. Otherwise, different layouts are used cyclically. For example, given 4 layouts (S0, S1, S2, S3), 8 heads will have layouts like (S0, S1, S2, S3, S0, S1, S2, S3).

The block_row_indices and block_col_indices are the CSR representation of block mask. The block_col_indices might contain paddings at the right side when different layout has different number of non-zeros in block mask.

An example of block mask with 2 layouts where each layout is 4 x 4 blocks: [[[1, 0, 0, 0], [1, 1, 0, 0], [0, 1, 1, 0], [0, 1, 1, 1]],

[[1, 0, 0, 0],

[1, 1, 0, 0],

[1, 1, 1, 0],

[1, 0, 1, 1]]]

The corresponding CSR format: block_col_indices = [[0, 0, 1, 1, 2, 1, 2, 3, -1], [0, 0, 1, 0, 1, 2, 0, 2, 3]] block_row_indices = [[0, 1, 3, 5, 8], [0, 1, 3, 6, 9]]

When do_rotary is True, cos_cache and sin_cache are required. Note that the maximum sequence length supported by cos or sin cache can be different from the maximum sequence length used by kv cache.

Only supports unidirectional attention with cache of past key and value in linear buffers.

For performance, past_key and present_key share same memory buffer, and past_value and present_value too.

Input parameters

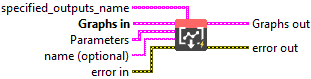

![]() specified_outputs_name : array, this parameter lets you manually assign custom names to the output tensors of a node.

specified_outputs_name : array, this parameter lets you manually assign custom names to the output tensors of a node.

![]() Graphs in : cluster, ONNX model architecture.

Graphs in : cluster, ONNX model architecture.

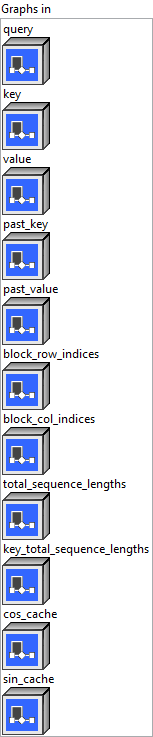

![]() query (heterogeneous) – T : object, query with shape (batch_size, sequence_length, num_heads * head_size), or packed QKV with shape is(batch_size, sequence_length, d) where d is (num_heads + 2 * kv_num_heads) * head_size.

query (heterogeneous) – T : object, query with shape (batch_size, sequence_length, num_heads * head_size), or packed QKV with shape is(batch_size, sequence_length, d) where d is (num_heads + 2 * kv_num_heads) * head_size.![]() key (optional, heterogeneous) – T : object, key with shape (batch_size, sequence_length, kv_num_heads * head_size).

key (optional, heterogeneous) – T : object, key with shape (batch_size, sequence_length, kv_num_heads * head_size).![]() value (optional, heterogeneous) – T : object, value with shape (batch_size, sequence_length, kv_num_heads * head_size).

value (optional, heterogeneous) – T : object, value with shape (batch_size, sequence_length, kv_num_heads * head_size).![]() past_key (heterogeneous) – T : object, key cache with shape (batch_size, kv_num_heads, max_cache_sequence_length, head_size).

past_key (heterogeneous) – T : object, key cache with shape (batch_size, kv_num_heads, max_cache_sequence_length, head_size).![]() past_value (heterogeneous) – T : object, value cache with shape (batch_size, kv_num_heads, max_cache_sequence_length, head_size).

past_value (heterogeneous) – T : object, value cache with shape (batch_size, kv_num_heads, max_cache_sequence_length, head_size).![]() block_row_indices (heterogeneous) – M : object, the row indices of CSR format of block mask with shape (num_layout, max_blocks + 1).The num_heads is divisible by num_layout, and max_blocks is max_sequence_length / sparse_block_size.

block_row_indices (heterogeneous) – M : object, the row indices of CSR format of block mask with shape (num_layout, max_blocks + 1).The num_heads is divisible by num_layout, and max_blocks is max_sequence_length / sparse_block_size.![]() block_col_indices (heterogeneous) – M : object, the col indices of CSR format of block mask with shape (num_layout, max_nnz_blocks).The max_nnz_blocks is the maximum number of non-zeros per layout in block mask.

block_col_indices (heterogeneous) – M : object, the col indices of CSR format of block mask with shape (num_layout, max_nnz_blocks).The max_nnz_blocks is the maximum number of non-zeros per layout in block mask.![]() total_sequence_lengths (heterogeneous) – M : object, scalar tensor of maximum total sequence length (past_sequence_length + sequence_length) among keys.

total_sequence_lengths (heterogeneous) – M : object, scalar tensor of maximum total sequence length (past_sequence_length + sequence_length) among keys.![]() key_total_sequence_lengths (heterogeneous) – M : object, 1D tensor with shape (batch_size) where each value is total sequence length of key excluding paddings.

key_total_sequence_lengths (heterogeneous) – M : object, 1D tensor with shape (batch_size) where each value is total sequence length of key excluding paddings.![]() cos_cache (optional, heterogeneous) – T : object, cos cache of rotary with shape (max_rotary_sequence_length, head_size / 2).

cos_cache (optional, heterogeneous) – T : object, cos cache of rotary with shape (max_rotary_sequence_length, head_size / 2).![]() sin_cache (optional, heterogeneous) – T : object, sin cache of rotary with shape (max_rotary_sequence_length, head_size / 2).

sin_cache (optional, heterogeneous) – T : object, sin cache of rotary with shape (max_rotary_sequence_length, head_size / 2).

![]() Parameters : cluster,

Parameters : cluster,

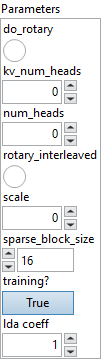

![]() do rotary : boolean, whether to use rotary position embedding.

do rotary : boolean, whether to use rotary position embedding.

Default value “False”.![]() kv_num_heads : integer, number of attention heads for key and value.

kv_num_heads : integer, number of attention heads for key and value.

Default value “0”.![]() num_heads : integer, number of attention heads for query.

num_heads : integer, number of attention heads for query.

Default value “0”.![]() rotary_interleaved : boolean, rotary use interleaved pattern or not.

rotary_interleaved : boolean, rotary use interleaved pattern or not.

Default value “False”.![]() scale : float, scaling factor applied prior to softmax. The default value is 1/sqrt(head_size).

scale : float, scaling factor applied prior to softmax. The default value is 1/sqrt(head_size).

Default value “0”.![]() sparse_block_size : enum, number of tokens per sparse block.

sparse_block_size : enum, number of tokens per sparse block.

Default value “16”.![]() training? : boolean, whether the layer is in training mode (can store data for backward).

training? : boolean, whether the layer is in training mode (can store data for backward).

Default value “True”.![]() lda coeff : float, defines the coefficient by which the loss derivative will be multiplied before being sent to the previous layer (since during the backward run we go backwards).

lda coeff : float, defines the coefficient by which the loss derivative will be multiplied before being sent to the previous layer (since during the backward run we go backwards).

Default value “1”.

![]() name (optional) : string, name of the node.

name (optional) : string, name of the node.

Output parameters

![]() Graphs out : cluster, ONNX model architecture.

Graphs out : cluster, ONNX model architecture.

![]() output (heterogeneous) – T : object, 3D output tensor with shape (batch_size, sequence_length, num_heads * head_size).

output (heterogeneous) – T : object, 3D output tensor with shape (batch_size, sequence_length, num_heads * head_size).![]() present_key (heterogeneous) – T : object, updated key cache with shape (batch_size, kv_num_heads, max_cache_sequence_length, head_size).

present_key (heterogeneous) – T : object, updated key cache with shape (batch_size, kv_num_heads, max_cache_sequence_length, head_size).![]() present_value (heterogeneous) – T : object, updated value cache with shape (batch_size, kv_num_heads, max_cache_sequence_length, head_size).

present_value (heterogeneous) – T : object, updated value cache with shape (batch_size, kv_num_heads, max_cache_sequence_length, head_size).

Type Constraints

T in (tensor(float), tensor(float16), tensor(bfloat16)) : Constrain input and output to float tensors.

M in (tensor(int32)) : Constrain integer type.