- This topic has 6 replies, 2 voices, and was last updated February 3 by .

-

Topic

-

Hi, Youssef.

Hope you are doing well. I have some time to compare performance on v1.1.0 vs 1.2.0 on GPU and strange staff happened.

Before you published 1.2.0 with example to work on GPU I managed to figure out how to do it with v 1.1.0 and I got expected results – 15-30 ms for GPU step_time and 80-90ms for CPU.

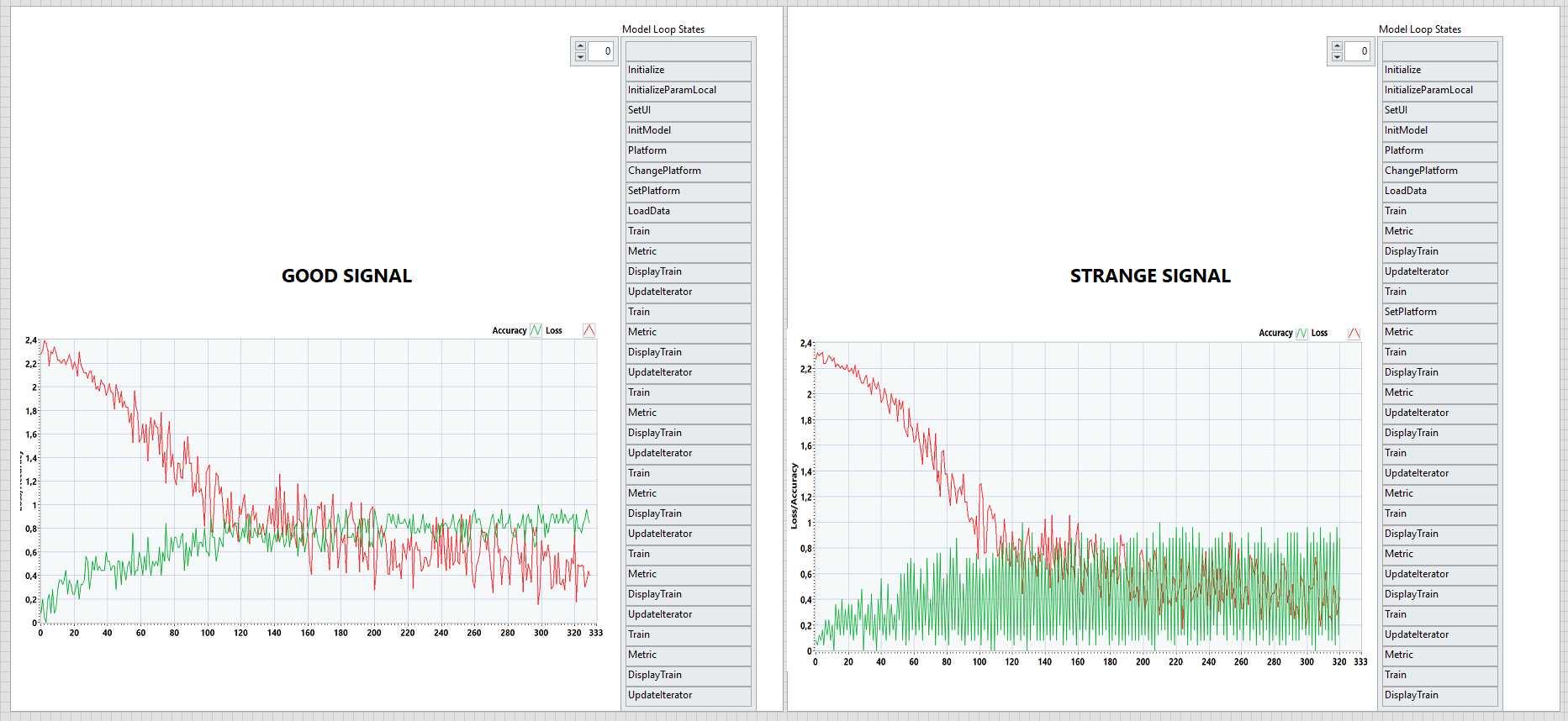

Both GPU/CPU tended to converge accordingly (increase accuracy and decrease loss)

After upgrading to v1.2.0 more or less same cycle time was demonstrated, but in attached example with GPU activation loss function convergence did not occurs.

due to the fact that it is just GPU/CPU functionality, I am not sending you VI – it is the same as per example, (just GPU/CPU switch change to visible).

I also have video taped screen – let me know if you’d like to get it – too big to attach to mail.

Best regards and good luck

Vlady Kaplan

- You must be logged in to reply to this topic.