- This topic has 3 replies, 2 voices, and was last updated February 28 by .

-

Topic

-

Dear Youssef,

meanwhile I want to work a bit on other UNet architectures. The U2Net recommended by you, UNet++ or e.g. Attention UNet.

Attention UNet is probably the easiest to implement at first, I think.

That’s why I started doing it.

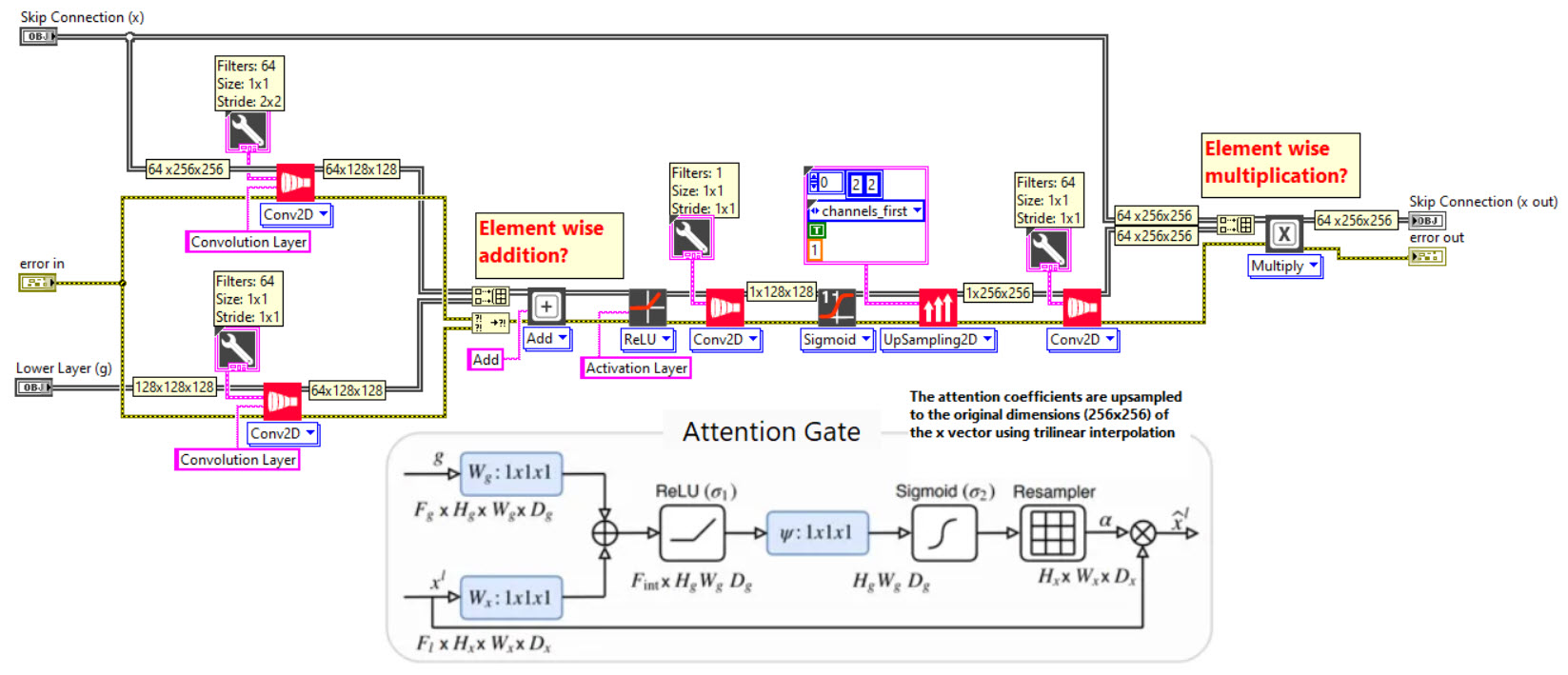

I just have a quick question if you have a little time in the next few days. I tried to program an Attention Gate for this (from the link below).https://towardsdatascience.com/a-detailed-explanation-of-the-attention-u-net-b371a5590831

My question is. Is that okay or is it better (or easier) to use the Haibal Multi Input “Attention” or “Additive Attention”?

Thank you for your patience

- You must be logged in to reply to this topic.