Introduction

In the dynamic world of deep learning, Keras, TensorFlow, PyTorch, and HAIBAL stand as prominent contenders, each with its unique contributions. Keras, a user-friendly neural network API in Python, excels in rapid prototyping; TensorFlow, a Google Brain creation, boasts scalability and performance; PyTorch, from Facebook’s AI Research lab, offers dynamic computation. Amidst these major players, HAIBAL emerges as an exciting newcomer, a cutting-edge deep learning toolkit crafted for the LabVIEW programming language, bridging the gap between deep learning and LabVIEW applications. This article explores the comparative strengths, usability, language support, community engagement, and performance of each framework, empowering readers to discover the perfect fit for their deep learning ventures. Get ready to unlock the possibilities and explore HAIBAL’s potential to revolutionize deep learning in the LabVIEW ecosystem.

Methodology of Comparison: “Six-Point Comparative Analysis of Frameworks”

Embark on an insightful journey as we unravel the key distinctions between Keras, TensorFlow, HAIBAL, and PyTorch, comparing them across seven crucial aspects: Level API, Speed, Architecture, Dataset, Debugging, Ease of Development, and Ease of Integration into complete systems. Through this comprehensive analysis, you will gain a comprehensive understanding of each framework’s unique attributes, empowering you to make informed decisions for your deep learning endeavors. Let’s explore the diverse landscapes of these powerful tools and pave the way for groundbreaking innovations in the world of artificial intelligence.

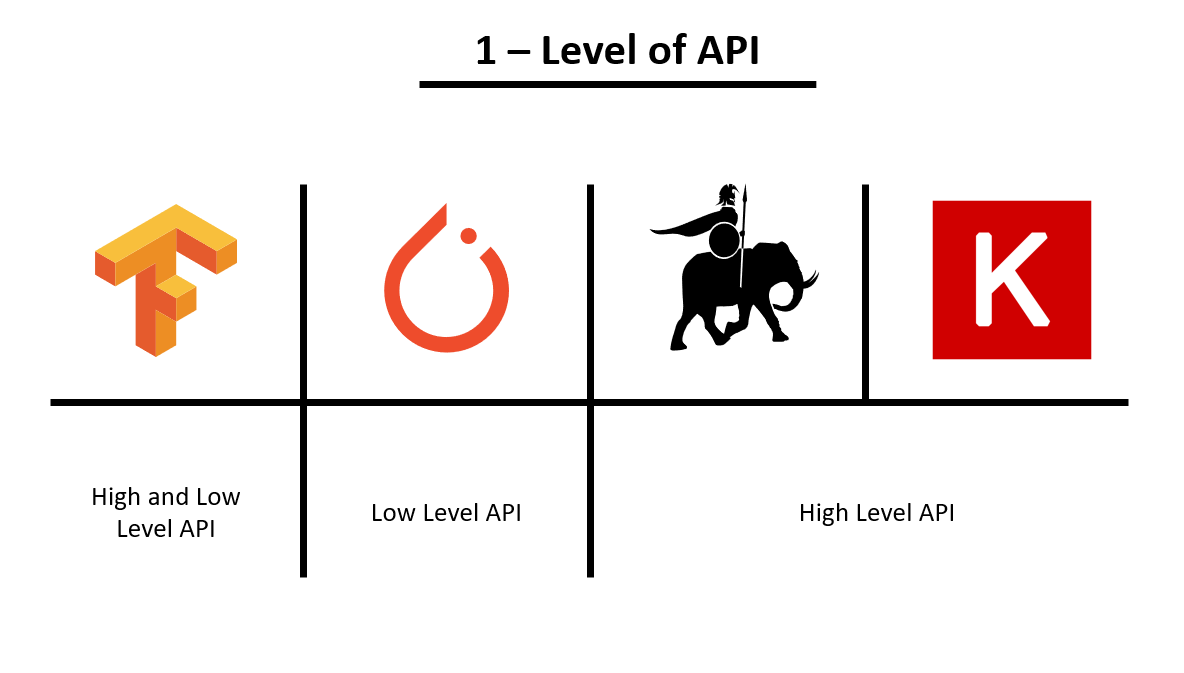

1 – Level API

The Level API of a deep learning framework refers to the level of abstraction it provides to users while building and customizing their neural network models. A “High Level API” offers a more user-friendly and abstracted approach, simplifying model development and reducing the need for intricate details. On the other hand, a “Low Level API” grants users more control and flexibility, allowing them to interact directly with the framework’s components and fine-tune every aspect of their models.

Comparing the frameworks in terms of Level API, TensorFlow stands out as a unique player, offering both High and Low Level APIs. Its High Level API, originally integrated with Keras as tf.keras, provides an easy-to-use interface, ideal for rapid prototyping and quick implementation of standard models. For more advanced users, TensorFlow’s Low Level API allows fine-grained control over model architecture and training process, making it suitable for research and complex model development.

Keras, in its essence, is designed to be a High Level API, focusing on simplicity and ease of use. It abstracts many complexities, enabling users to create models with minimal lines of code. PyTorch, on the other hand, leans towards a Low Level API, providing a dynamic computation graph and a more hands-on approach, appealing to researchers and developers seeking in-depth customization.

As for HAIBAL, it currently operates as a High Level API, offering an intuitive and LabVIEW-friendly environment for deep learning. However, the development team aims to embrace a more versatile approach, moving towards Tensorflow’s footsteps by introducing enhancements that will transform HAIBAL into both a High and Low Level API. This upcoming shift will grant users greater control and open up exciting possibilities for advanced deep learning tasks within the LabVIEW ecosystem.

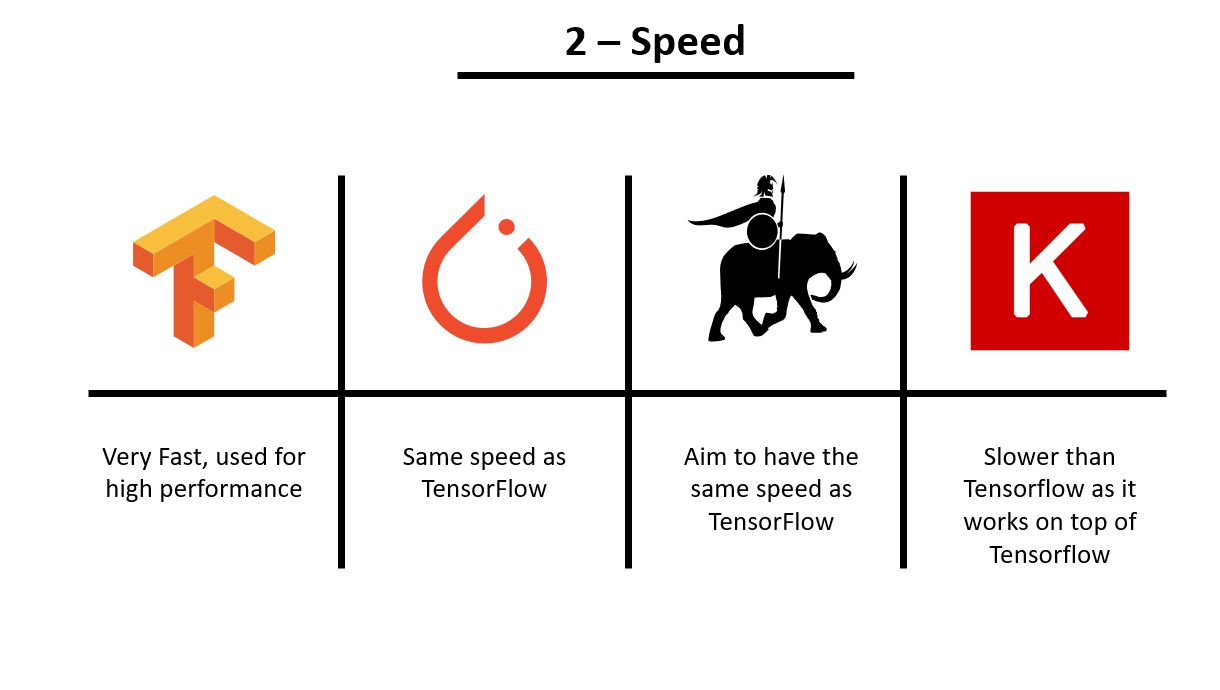

2 – Speed

When it comes to speed, the four frameworks demonstrate varying performance characteristics. TensorFlow has long been lauded for its high-performance capabilities, particularly with the optimization of its Low Level API. TensorFlow’s graph optimization and distribution mechanisms enable efficient utilization of hardware resources, making it a top choice for large-scale deep learning tasks.

Keras, as an interface to TensorFlow and other backends, inherits TensorFlow’s speed, benefitting from its performance optimizations. PyTorch, while highly efficient and fast, has historically faced some performance challenges compared to TensorFlow due to its dynamic computation graph.

HAIBAL, on the other hand, positions itself as a rapid and performant deep learning toolkit on the LabVIEW platform. Although currently demonstrating good speed, its development team aspires to match TensorFlow’s velocity by leveraging advancements and optimizations, making it a compelling contender in the world of high-speed deep learning frameworks.

As HAIBAL aims to achieve performance parity with TensorFlow, users can anticipate even greater acceleration in model training and inference within the LabVIEW environment, making HAIBAL an increasingly viable option for time-critical and resource-intensive applications.

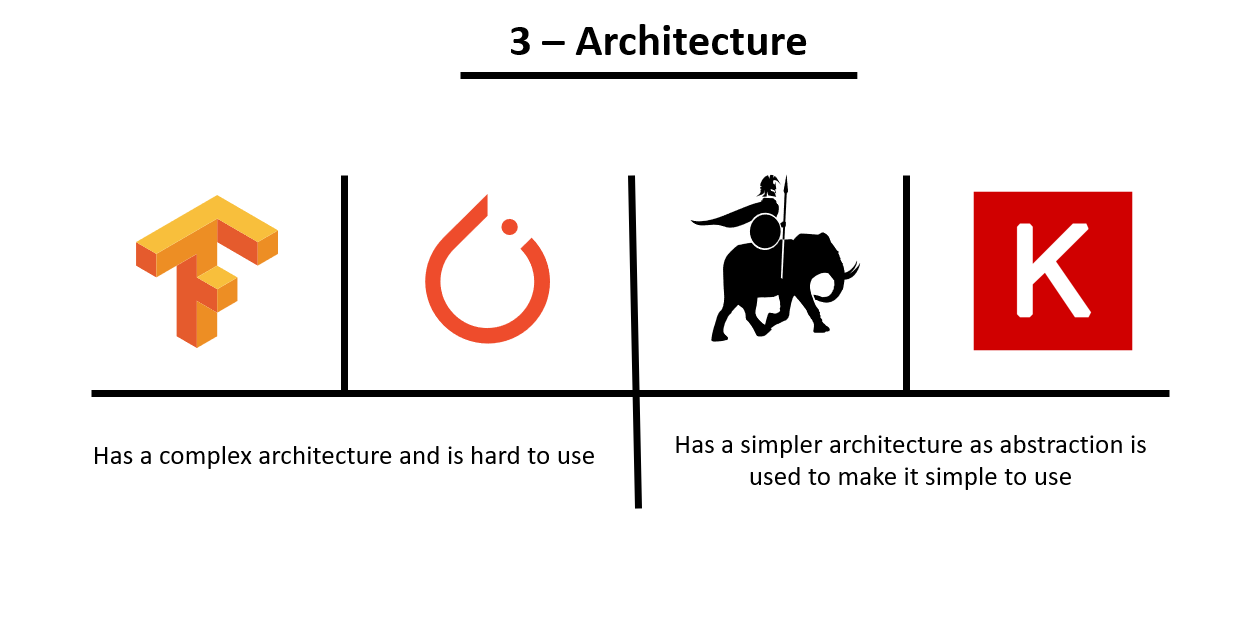

3 – Architecture

In examining the architecture of the deep learning frameworks, we find variations that influence their ease of use and flexibility. TensorFlow’s static computation graph can sometimes lead to complexity during model construction, requiring a predefined structure before implementation. Similarly, PyTorch’s dynamic computation graph, while offering flexibility, demands users to manage the model’s structure at runtime, leading to potential intricacies during development.

In contrast, HAIBAL showcases a remarkably user-friendly approach by capitalizing on LabVIEW’s graphical flowchart nature. The visual design enabled by LabVIEW’s G language ensures an intuitive and streamlined model creation process, making HAIBAL an accessible choice, especially for LabVIEW users. Combining this intuitive approach with HAIBAL’s High-Level API further simplifies the development experience, allowing users to effortlessly construct deep learning models without the burden of intricate coding.

Thus, HAIBAL’s architecture shines as an efficient and time-saving option, granting users a seamless experience while embracing the power of deep learning within the LabVIEW ecosystem.

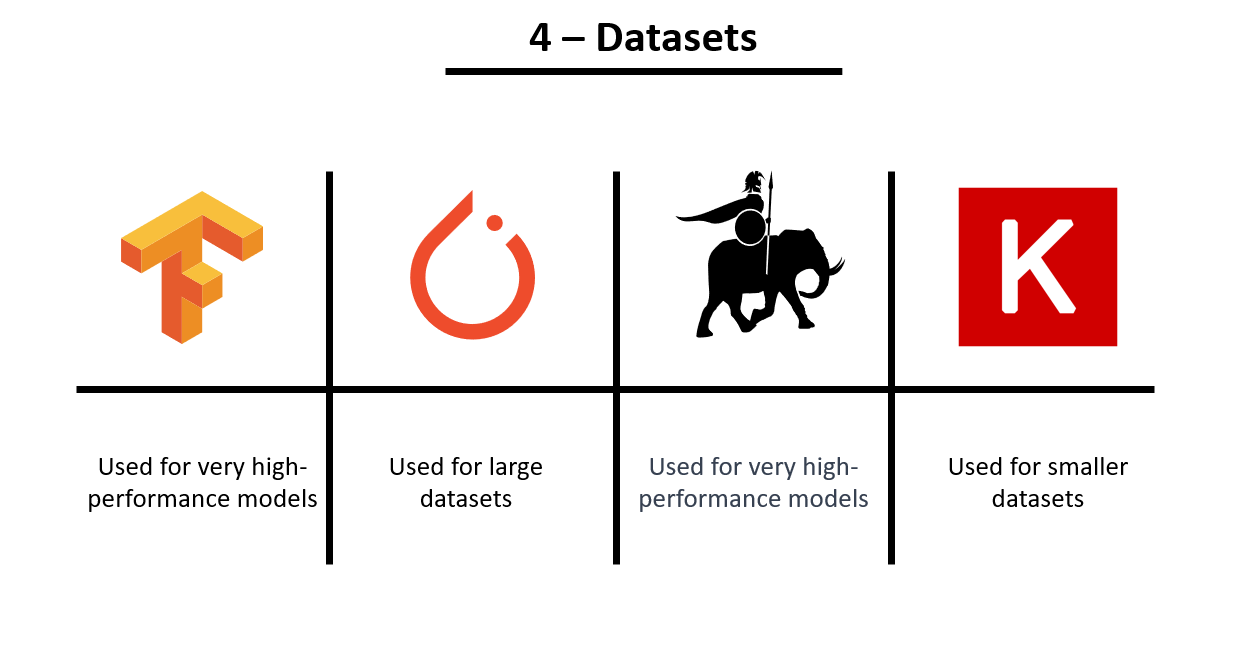

4 – Datasets

The choice of deep learning framework can significantly impact the handling of datasets and the ease of debugging. Each framework has its strengths when it comes to dataset size capabilities.

PyTorch has garnered a reputation for its suitability in handling large datasets. Its dynamic computation graph and efficient memory management make it well-suited for processing extensive and diverse datasets, catering to the needs of researchers and practitioners dealing with large-scale projects.

TensorFlow and HAIBAL excel in handling models with high-performance requirements. TensorFlow’s graph optimization and distribution mechanisms enable efficient computation on powerful hardware, making it a preferred choice for performance-critical tasks. Similarly, HAIBAL’s focus on speed and performance empowers users to execute deep learning models with accelerated efficiency within the LabVIEW environment.

On the other hand, Keras is known for its ease of use and rapid prototyping capabilities, making it an excellent fit for smaller datasets and quick experimentation. It allows users to create and test models with minimal effort, catering to projects where large-scale datasets are not the primary focus.

As users consider their dataset size and debugging requirements, PyTorch becomes an attractive choice for large-scale projects, TensorFlow and HAIBAL shine in high-performance scenarios, while Keras proves efficient for smaller datasets and swift model iteration. The right framework selection can significantly impact data processing efficiency, ultimately leading to successful deep learning projects.

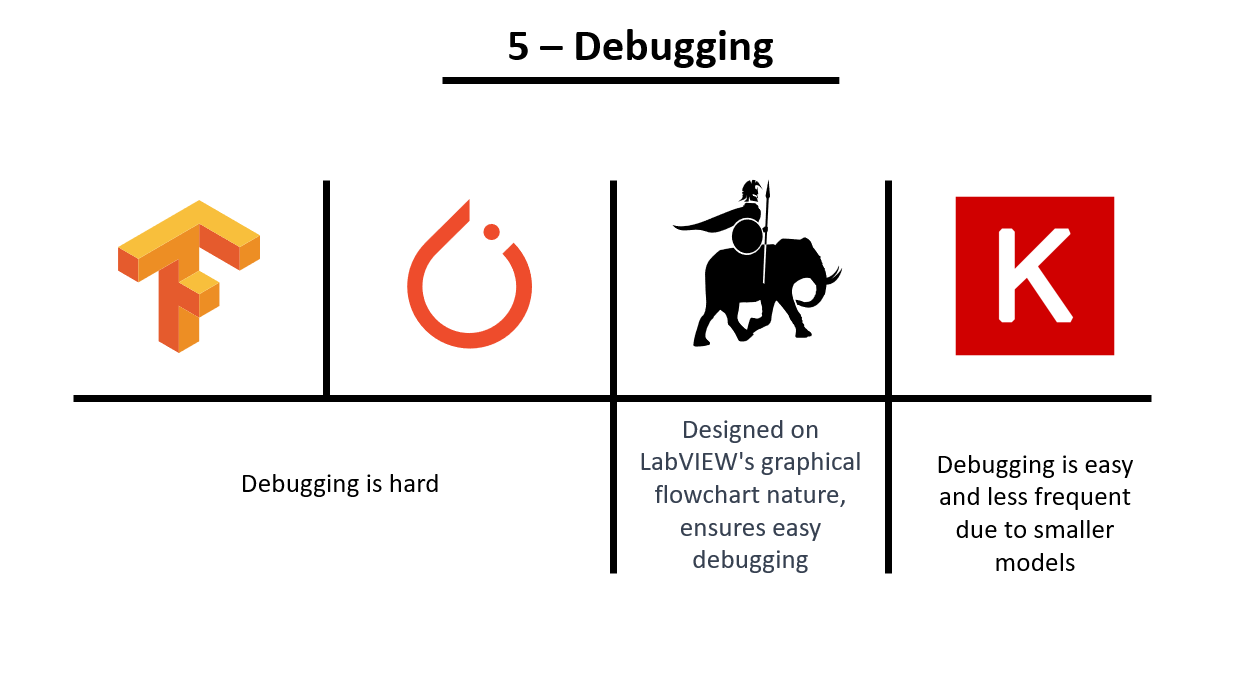

5 – Debugging

When it comes to debugging deep learning models, TensorFlow and PyTorch can present challenges due to their complex nature and intricate codebase. Debugging in these frameworks may require substantial effort and expertise, especially when dealing with large-scale projects and intricate models.

In stark contrast, HAIBAL’s unique advantage lies in its seamless debugging process, facilitated by the LabVIEW environment’s graphical flowchart language. The visual representation of the model’s architecture through flowcharts simplifies the identification and resolution of issues, providing a more intuitive debugging experience. Users familiar with LabVIEW can leverage its user-friendly interface to effortlessly trace and rectify errors, significantly reducing the time and complexity associated with debugging.

6 – Ease of Development

The ease of development is a crucial aspect when selecting a deep learning framework, as it directly impacts productivity and the learning curve for users. Each framework offers a distinct development experience based on its API design and underlying architecture.

TensorFlow and PyTorch, with their comprehensive and technical nature, can prove challenging for beginners and those with limited experience in deep learning. Users may need to invest more time in understanding the intricate details of these frameworks, making the development process less straightforward, particularly for newcomers.

Keras, known for its high-level abstraction, streamlines the development process, allowing users to quickly build and experiment with neural network models. Its intuitive API design and user-friendly syntax reduce the learning curve, making it an excellent choice for developers seeking a straightforward and accessible approach to deep learning.

In the realm of HAIBAL, its ease of development stands out significantly. As a high-level API built within the LabVIEW ecosystem, HAIBAL capitalizes on LabVIEW’s graphical flowchart nature, enabling a remarkably intuitive and efficient development experience. Users, regardless of their deep learning expertise, can quickly grasp the framework’s functionality and construct complex models with ease. This inherent simplicity further boosts productivity, enabling users to focus on their research or application domain rather than grappling with the complexities of the framework.

7 – Integration into complete systems

The integration of deep learning models into complex systems is a critical consideration, as it directly impacts the efficiency, cost, and ease of implementation. From the perspective of a LabVIEW architect, the choice of deep learning framework plays a vital role in this regard.

When using frameworks like TensorFlow, PyTorch, or Keras, which rely on Python’s syntactic language, challenges may arise when integrating deep learning models into a complete system. The Python language, while powerful, may prove to be inherently difficult for some team members, requiring more extensive qualifications and a steeper learning curve. Consequently, larger teams and additional resources may be necessary, leading to increased costs during integration.

In contrast, LabVIEW’s graphical essence proves to be highly efficient, especially when integrating inferences from deep learning models into complex systems. The graphical nature of LabVIEW is more intuitive, simpler, and faster to learn compared to traditional syntactic languages like Python. This inherent efficiency significantly reduces the complexities of integration and empowers LabVIEW architects to seamlessly incorporate deep learning models into any intricate system.

By leveraging LabVIEW’s graphical flowchart language and HAIBAL’s High-Level API, developers can swiftly construct, integrate, and deploy deep learning models without the need for extensive coding expertise. This streamlined integration process drastically reduces development costs and minimizes potential hurdles, enabling LabVIEW users to unlock the full potential of deep learning within their complex systems.

In conclusion, the compatibility of HAIBAL with LabVIEW’s graphical essence ensures a harmonious and cost-effective integration of deep learning models into complete systems. This unique advantage positions HAIBAL as an ideal choice for LabVIEW architects seeking to leverage the power of deep learning without incurring unnecessary complexities or excessive costs.

Conclusion

Graiphic software management platformIn the ever-evolving landscape of deep learning, Keras, TensorFlow, PyTorch, and HAIBAL have each carved out their unique niches, offering distinct advantages and capabilities. However, it is essential not to underestimate the revolutionary technology of LabVIEW, which was developed to accelerate project development and boasts significant advantages over traditional syntactic languages. LabVIEW’s influence on the American industry over the past two decades has been profound, and the recent acquisition of National Instruments by Emerson signals a potential acceleration of this phenomenon.

HAIBAL’s timely emergence into this ever-changing world showcases its potential to revolutionize LabVIEW’s capabilities further. Leveraging the power of LabVIEW’s graphical flowchart nature, HAIBAL complements the platform, making debugging effortless and enhancing the development process. As an academic tool, HAIBAL shines, facilitating a deeper understanding of deep learning theory. The interactivity of the G language sets it apart, enabling researchers and engineers to stream changes to model parameters effortlessly. This interactivity fosters rapid prototyping, empowering academia to explore novel ideas more effectively.

In conclusion, HAIBAL’s integration with LabVIEW and its continuous development are testaments to its potential in the field of deep learning. As LabVIEW accelerates and propels industry advancements, HAIBAL serves as a powerful and timely addition to the LabVIEW ecosystem, catering to researchers, engineers, and academia with its ease of use, interactivity, and commitment to continuous improvement. In the dynamic and transformative realm of deep learning, HAIBAL paves the way for innovative strides in the LabVIEW domain and beyond.

HAIBAL, the LabVIEW deep learning toolkit is now available within GIM plateform (Graiphic software management platform), download it now !