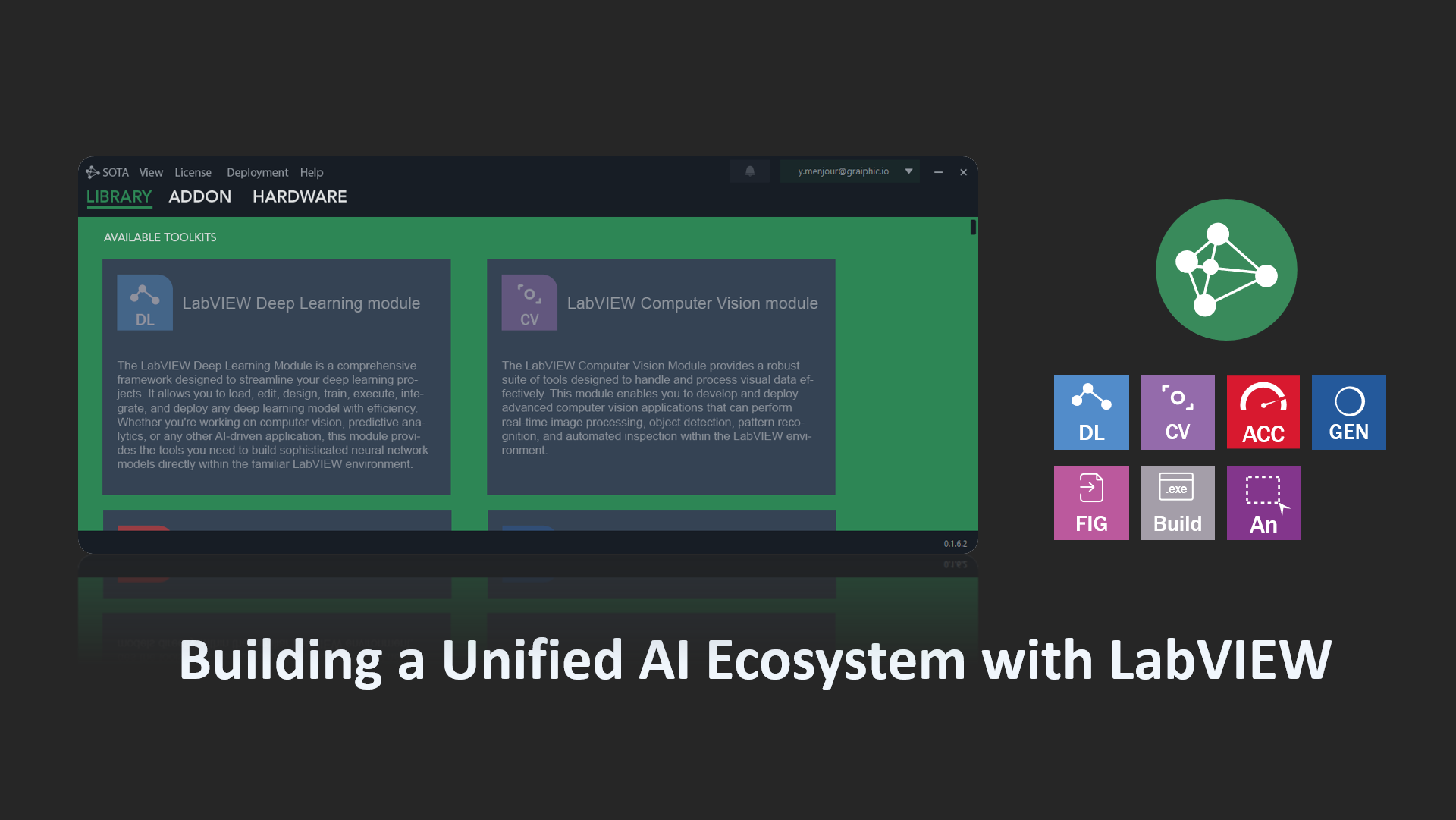

Welcome to SOTA: The Next Generation of AI Development Toolkits

As we bid farewell to our beloved toolkits HAIBAL, TIGR, PERRINE, and the GIM installation solution, we are excited to introduce you to the future of AI development: SOTA, a nod to “state of the art.” We at Graiphic are always thrilled to bring you such news, enhancing the development ecosystem in the world of deep learning, automation, and embedded systems.

Here’s a glimpse of what awaits you in the coming weeks with our new deep learning module:

- Full compatibility with existing frameworks: Keras, TensorFlow, PyTorch, ONNX.

- Impressive performance: The new LabVIEW deep learning tools will allow executions 50 times faster than the previous generation (HAIBAL 1.0) and 20% faster than PyTorch.

- Extended hardware support: CUDA, TensorRT for NVIDIA, Rocm for AMD, OneAPI for Intel.

- Maximum modularity: Define your own layers and loss functions.

- Graph neural networks: Complete and advanced integration.

- Annotation tools: An annotator as efficient as Roboflow, integrated into our software suite.

- Model visualization: Utilizing Netron for graphical model summaries.

- Generative AI: Complete library for execution, fine-tuning, and RAG setup for Llama 3, Phi 3, Florence-2 models.

- Reinforcement Learning: Introducing a wide range of algorithms including DPG, DQN, DDQN, DDQG, Dual DQN, Dual DDQN, PPO, A2C, A3C, SAC, and TD3, with more to follow.

With the arrival of SOTA, you can enjoy one-click installation with configuration guaranteed in less than 3 minutes.

Server Infrastructure and Cloud Integration

Our new server infrastructure for professional online license management is here! We are also introducing the Graiphic Cloud, enabling the sharing of models and environments for Reinforcement Learning. Imagine installing and testing games like Mario, Doom, or Atari in under 2 minutes directly within LabVIEW.

Advanced Annotation Tool

Simultaneously, we are launching a comprehensive annotation tool for computer vision, akin to Roboflow. This tool, integrated within the SOTA environment, will allow for image data processing and fine-tuning of computer vision models for object classification or segmentation. Our latest tests show YOLO 8 achieving 16 ms LabVIEW inference on a local PC.

Transition from HAIBAL, TIGR, PERRINE, and GIM

SOTA is a comprehensive suite of LabVIEW tools and toolkits that will replace our existing solutions. It includes:

- A deep learning toolkit to replace HAIBAL (LinkedIn page here),

- A computer vision toolkit to replace TIGR (LinkedIn page here),

- A GPU computation toolkit to replace PERRINE (LinkedIn page here).

These LabVIEW development toolkits will be accompanied by innovative tools such as FIG (File Importator and Generator), and the Annotator, which will allow image annotation or importing complete image datasets for training computer vision models. This integrated Roboflow will be part of our SOTA development environment. More tools and toolkits will follow later this year.

Goodbye GIM, Hello SOTA!

We also say goodbye to GIM, which has served us well, and welcome SOTA, a new tool reminiscent of STEAM, allowing easy one-click downloading and installation of all our tools and toolkits. Moreover, you can download examples with models and reinforcement learning environments with example algorithms for training models!

Future Developments

Of course, we have a plan for the future! New developments will include:

- A Robotics Module for LabVIEW, inspired by ROS (Robot Operating System), allowing robotics enthusiasts to control any type of robot (industrial, drone, etc.).

- An Industrial Module, which will be a collection of acquisition libraries for LabVIEW, enabling easy integration of most precision hardware on the market (this project is still in the planning stages and will be revisited later).

- A Tool for SLM (Small Language Models) and LLM (Large Language Models), inspired by LangChain, will also be coming soon. This tool will allow users to import, edit, fine-tune, execute, and integrate any language model easily within LabVIEW.

In summary, a lot of work lies ahead, and we are targeting a release by October.