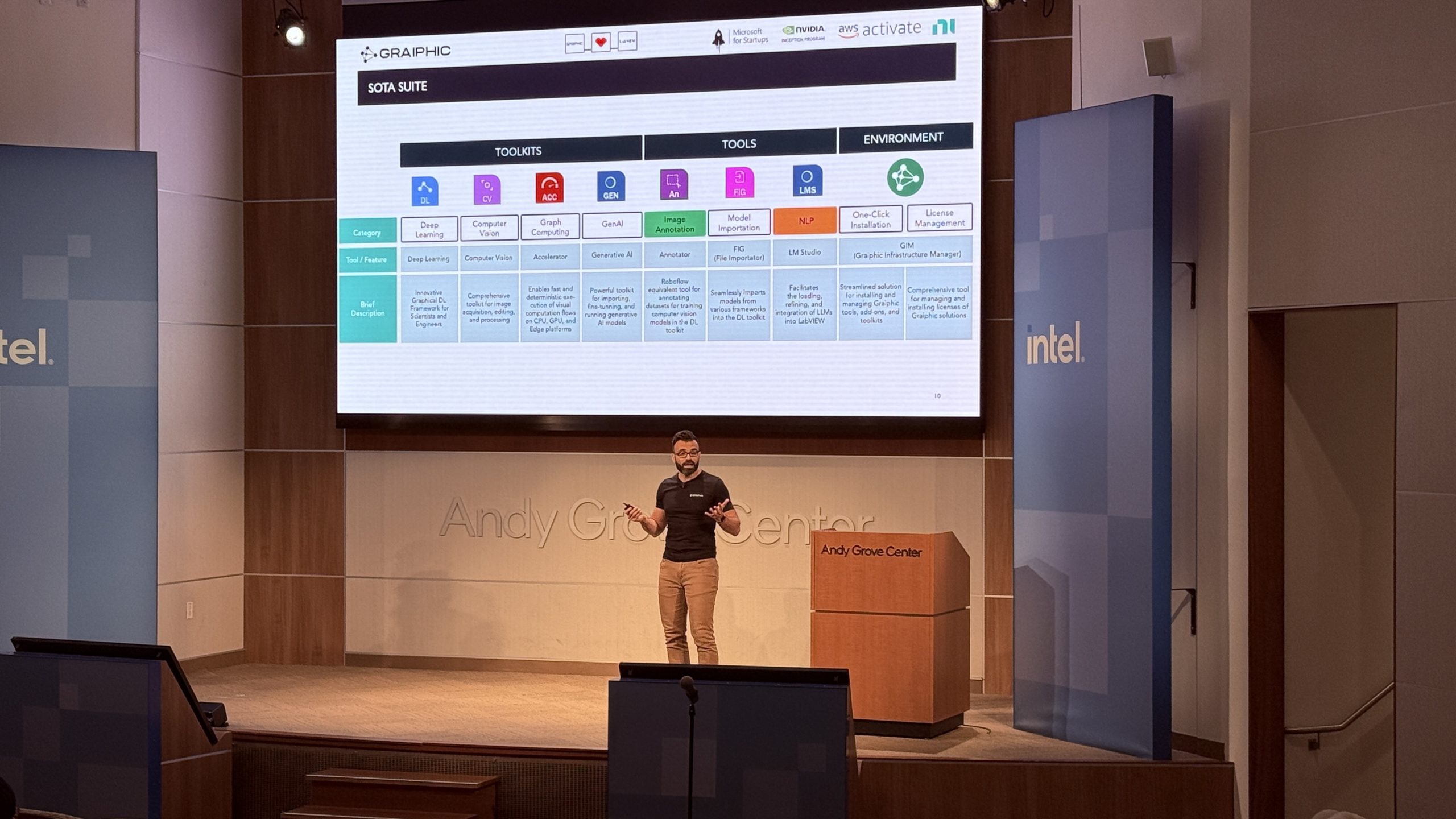

As we have recently decided to better communicate on the HAIBAL project by making a weekly status, I will start this first progress report by talking about the overall progress of the project.

HAIBAL deep learning library for LabVIEW project started in July 2021, almost a year ago and due to financial concerns had to be stopped last November and December before starting again. It has been 10 months that we have been working on this magnificent challenge.

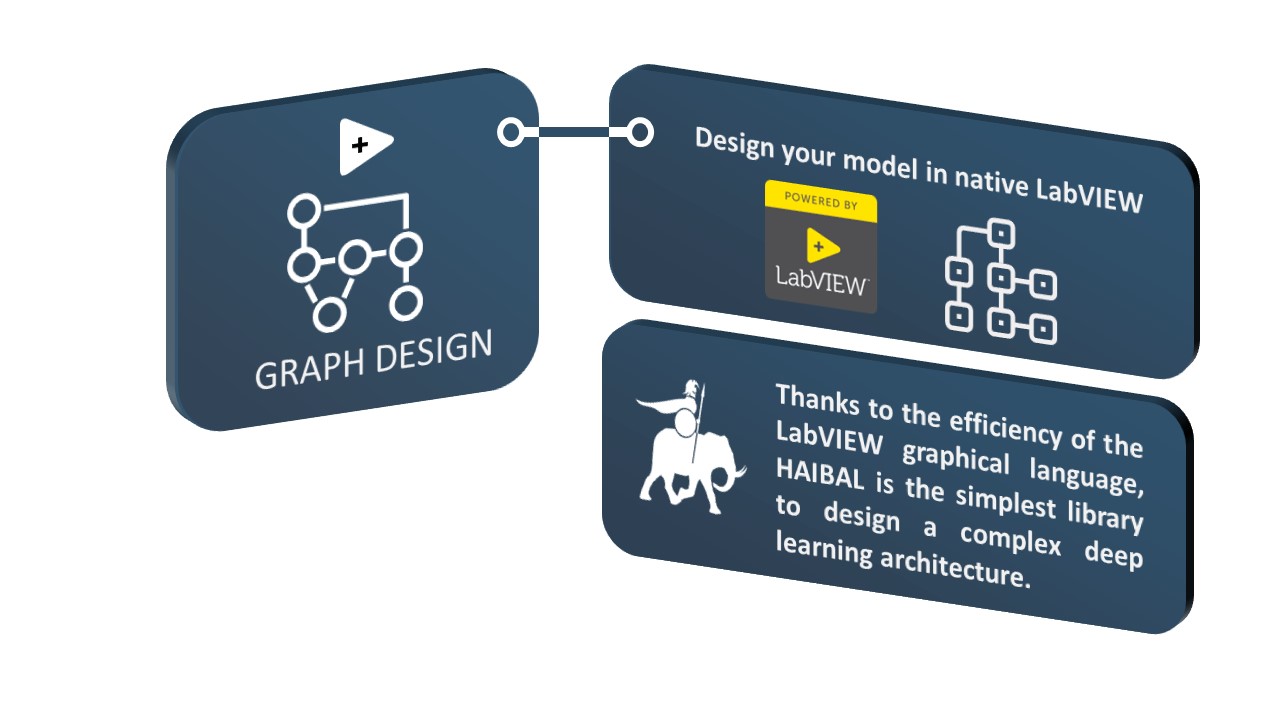

ARCHITECTURE DESIGN WITH OBJECT

The first determining step for us was to develop an object architecture for the whole project.

A HAIBAL model is an object, it contains its graph, its weights, its loss functions and all its parameters.

To be able to use the model you just have to execute its forward method.

In the same way, in order to train this model, we will also use the loss and backward methods.

STATUS OF THE LAYER DEVELOPED IN NATIVE LABVIEW

- 16 activation functions (ELU, Exponential, GELU, HardSigmoid, LeakyReLU, Linear, PRELU, ReLU, SELU, Sigmoid, SoftMax, SoftPlus, SoftSign, Swish, TanH, ThresholdedReLU)

- 84 functional layers (Dense, Conv, MaxPool, RNN, Dropout…)

- 14 loss functions (BinaryCrossentropy, BinaryCrossentropyWithLogits, Crossentropy, CrossentropyWithLogits, Hinge, Huber, KLDivergence, LogCosH, MeanAbsoluteError, MeanAbsolutePercentage, MeanSquare, MeanSquareLog, Poisson, SquaredHinge)

- 15 initialization functions (Constant, GlorotNormal, GlorotUniform, HeNormal, HeUniform, Identity, LeCunNormal, LeCunUniform, Ones, Orthogonal, RandomNormal, Random,Uniform, TruncatedNormal, VarianceScaling, Zeros)

- 7 optimizers (Adagrad, Adam, Inertia, Nadam, Nesterov, RMSProp, SGD)

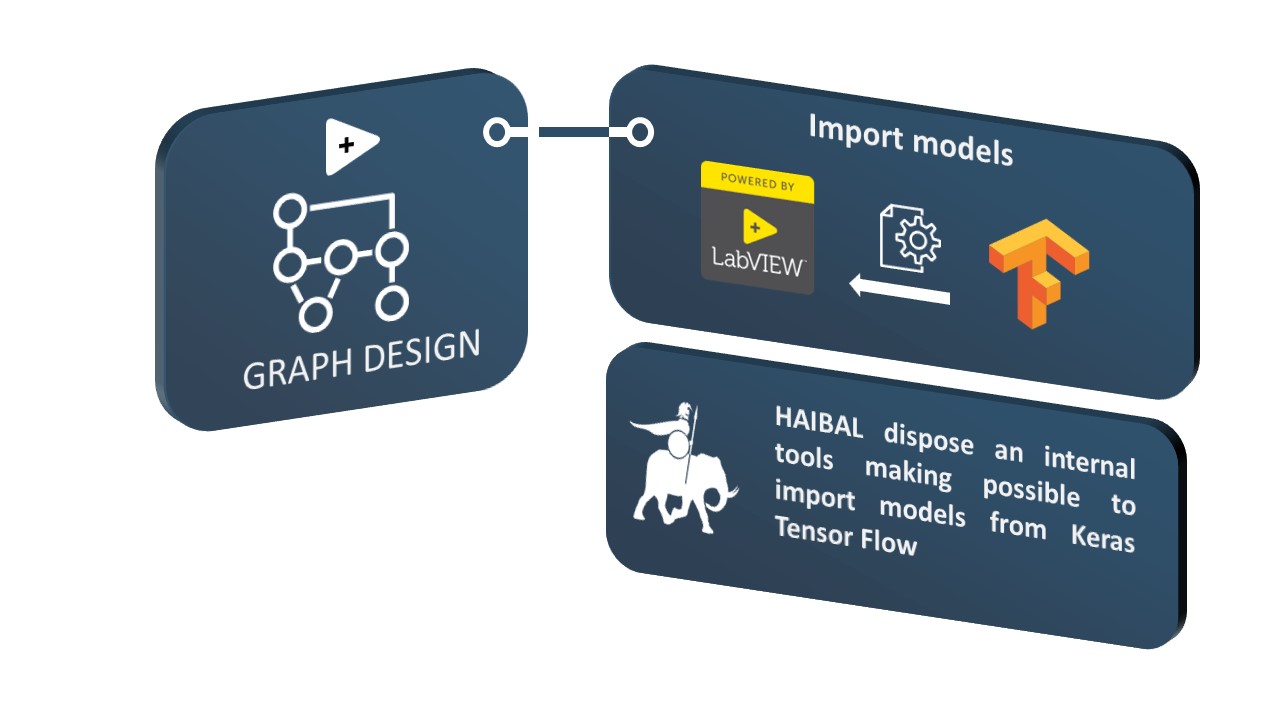

COMPATIBILITY WITH OTHER DEEP LEARNING LIBRARIES

Last January, we developed a converter to convert Keras HDF5 backup files into HAIBAL objects. This allows us to easily import any Keras model. We should do the same for meta pytorch in the next months to make HAIBAL fully compatible with the main existing libraries.

MEMORY MANAGER

This month we finished working on our memory manager which is our system for managing the memory of the platforms. It will allow us to optimize the creation of memory space before operations. The memory manager works according to two distinct techniques. The first one favors the minimal use of memory but can lead to a slower execution while the second one will allocate more memory but will be more optimized in terms of execution speed.