A New Era in Open-Source: Qwen 2.5 VL Instruct 7B’s Rapid Rise

Open-source large language models (LLMs) are evolving at a remarkable pace, and among the latest standouts, Chinese models like Qwen are quickly gaining attention. Developed by Alibaba Cloud’s research team, Qwen 2.5 VL Instruct 7B is a 7-billion-parameter multimodal model designed to process text and images while offering robust instruction-following features. Many researchers already see Qwen as a strong contender against major Western models such as Meta’s LLaMA or Mistral AI.

Qwen 2.5 VL Instruct 7B: Origins and Core Advantages

Qwen 2.5 VL Instruct 7B is part of Alibaba’s broader Qwen initiative, aiming to deliver powerful yet accessible multimodal solutions. Key highlights include:

Advanced Multilingual Support

Beyond European languages, Qwen accommodates Japanese, Korean, Arabic, and Vietnamese. This versatility makes it highly suitable for diverse global applications, including multilingual document translation and cross-lingual knowledge extraction.

Efficient Instruction-Following

Qwen 2.5 VL Instruct 7B stands out for its capacity to follow detailed instructions. With minimal prompt engineering, it can align responses to specific formats, answer structured questions, and even generate step-by-step explanations.

Robust Training Paradigms

Alibaba Cloud’s research team employs a sophisticated training regimen that incorporates massive datasets of textual and visual inputs. This approach bolsters the model’s adaptability to real-world tasks, from chat-based assistance to image analysis.

These technical achievements have positioned Qwen 2.5 VL Instruct 7B as one of the most capable open-source LLMs available, matching or exceeding the performance of comparable Western models on several multimodal benchmarks.

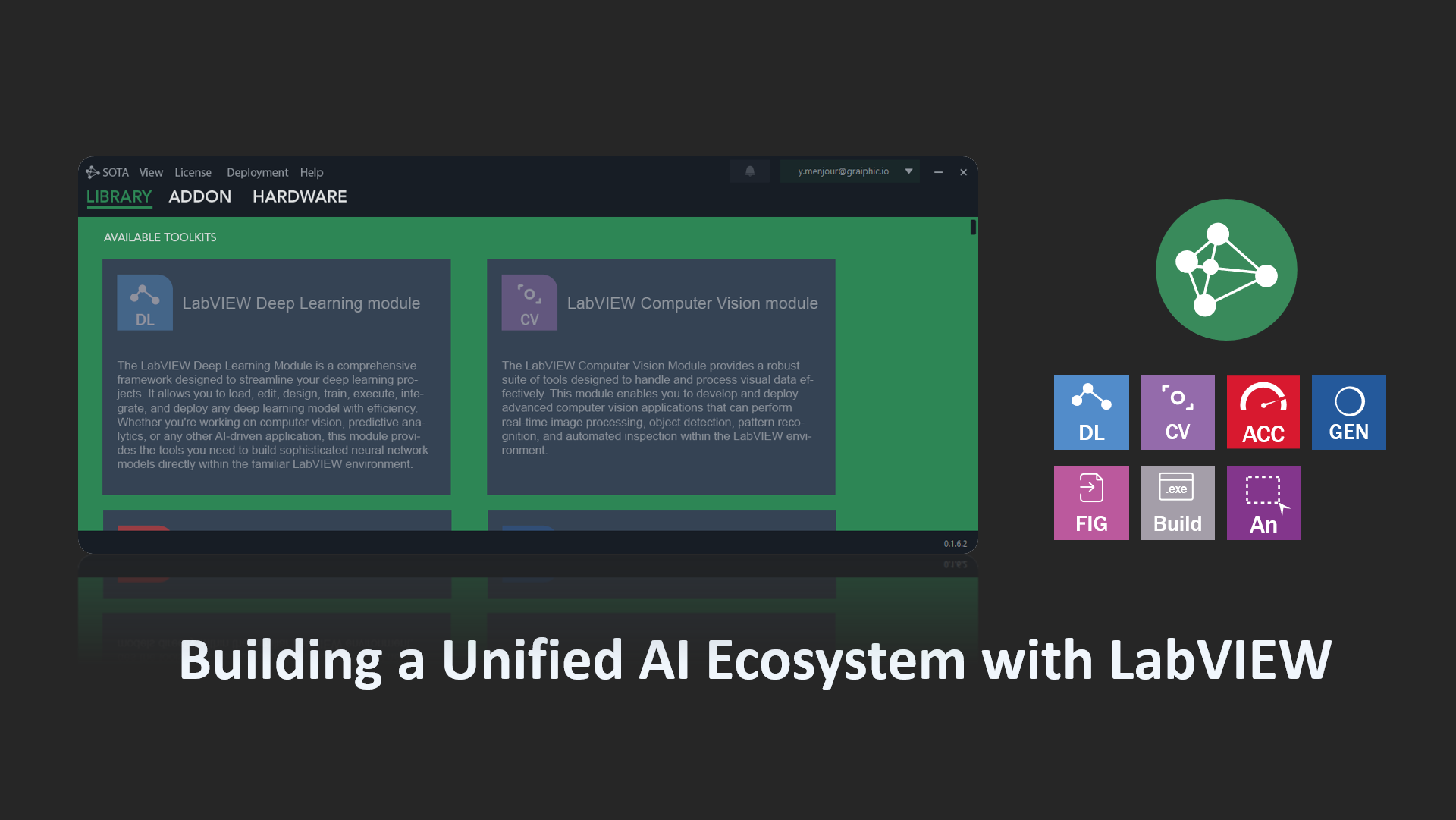

SOTA and the GenAI Toolkit: Integrating Qwen into LabVIEW

Graiphic has focused on bridging AI with industrial engineering by creating solutions like the GenAI Toolkit, which allows LabVIEW developers to seamlessly adopt GGUF LLM models—including multimodal ones like Qwen. Through SOTA, Graiphic’s AI framework, LabVIEW can now:

-

Load and Execute GGUF LLM Models

Engineers can deploy Qwen directly inside their LabVIEW projects, eliminating the need to switch to external environments or programming languages. -

Enhance Industrial Control and Data Analysis

By combining real-time data acquisition with advanced LLM inference, users can build sophisticated automation pipelines without leaving LabVIEW. -

Unlock Latest AI Features

Generative capabilities, such as text-to-image synthesis or visual understanding, become accessible with minimal configuration, broadening the scope of AI-driven solutions in fields like predictive maintenance or process optimization.

The Growing Influence of Chinese Open-Source Models

While many in the global AI community anticipate a potential LLaMA 3 from Meta, China has rapidly established itself as a leader in open-source LLM innovation. Models like Qwen 2.5 VL and DeepSeek R1 reportedly match or outperform established Western competitors, specifically in multimodal processing and multilingual tasks.

This shift highlights China’s increasing role in shaping next-generation AI platforms. As more software infrastructures evolve to support new models, including integration into LabVIEW via SOTA and the GenAI Toolkit, Chinese open-source LLMs are poised to redefine research, development, and industrial deployment strategies worldwide.

Now Available on SOTA: How to Install Qwen 2.5 VL in 3 Steps

Qwen 2.5 VL is now available directly within the SOTA ecosystem! To get started:

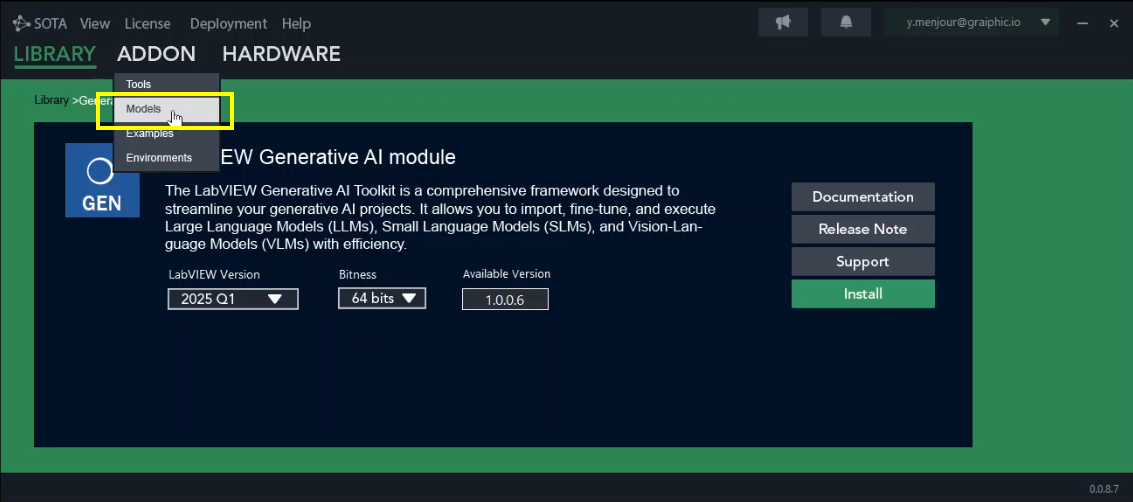

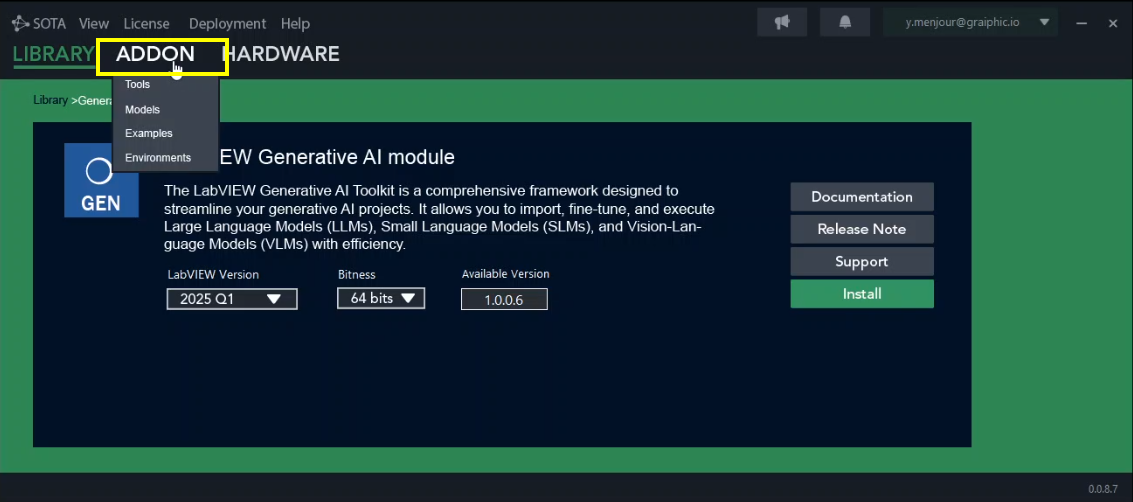

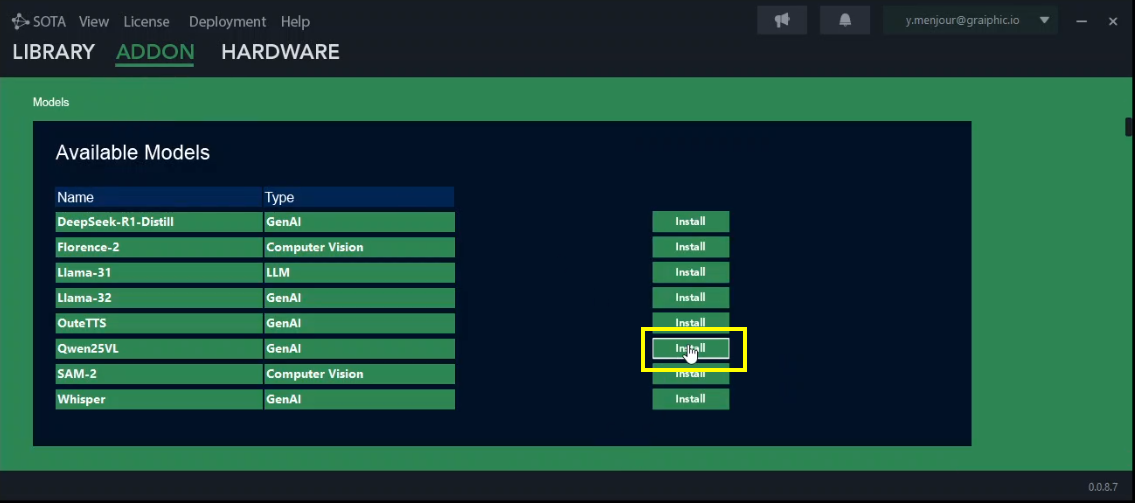

Step 1: Open the ADDON Menu

Navigate to the ADDON section in the SOTA interface.

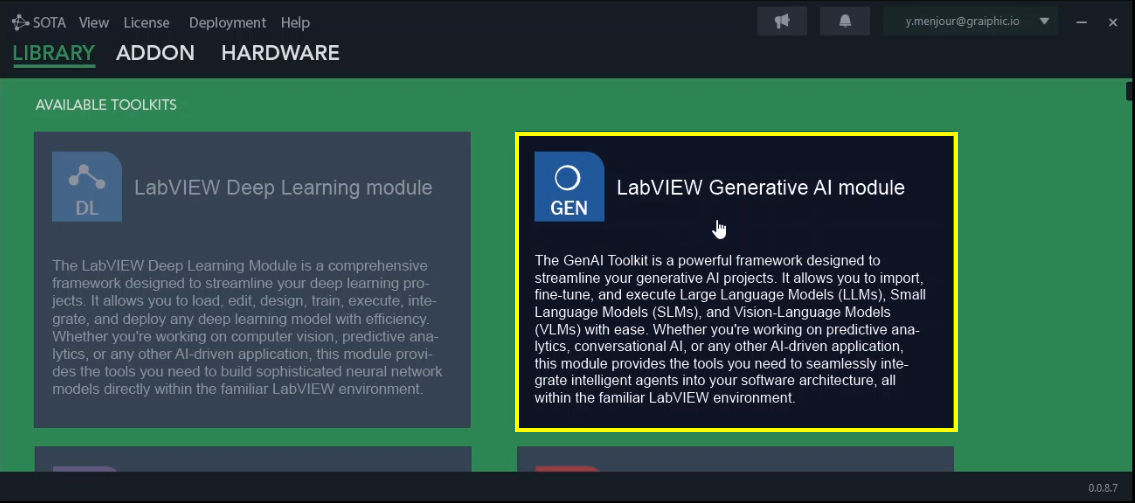

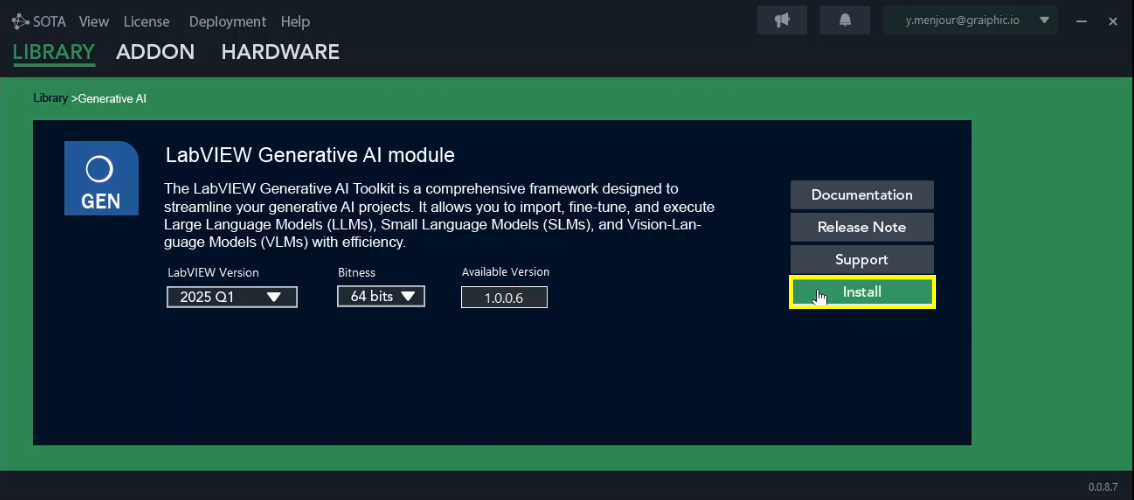

Step 2: Go to “Models” and Select Qwen 2.5VL

Click on Models, and in the list of available models, select Qwen25VL, then click Install.

Step 3: Make Sure GenAI Toolkit is Installed

Head over to the GenAI section under the Library, and hit Install to enable full compatibility with Qwen and other GGUF models.

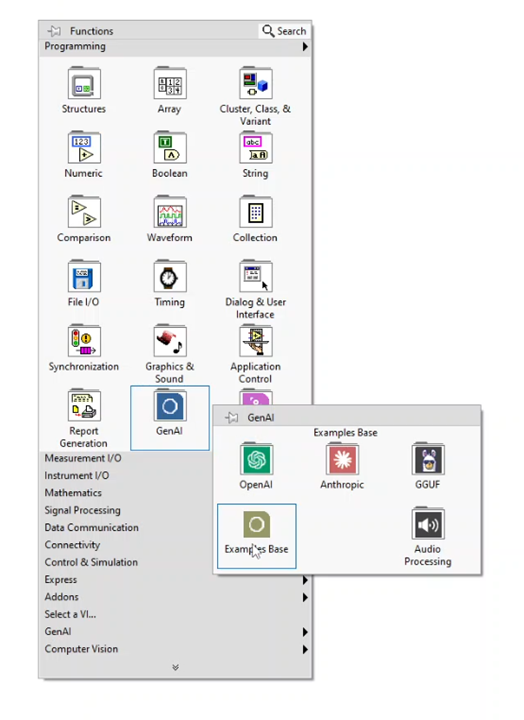

You can now load Qwen directly into LabVIEW by using the example loader provided in the GenAI Toolkit palette. Simply open LabVIEW, navigate to the GenAI Toolkit examples, and launch the demo reader to initialize the Qwen 2.5 VL model effortlessly. This makes it easier than ever to experiment with advanced multimodal AI inside your native LabVIEW environment.

Enjoy!

You’re now ready to integrate one of the most powerful multimodal models into your LabVIEW workflow. Whether it’s for industrial applications, research, or educational demos, Qwen 2.5 VL brings world-class AI capabilities right inside your engineering stack.

The integration of Qwen 2.5 VL Instruct 7B into LabVIEW through Graiphic’s GenAI Toolkit marks a significant step in adopting LLMs in industrial and scientific environments. The rise of Chinese models, combined with solutions like SOTA, paves the way for a new generation of embedded, autonomous, and highly efficient AI applications.

With the rapid evolution of open-source AI, one question remains: Who will dominate the next generation of open-source LLMs?