Open-source language models are undergoing a true revolution, and among the latest advancements, Chinese models like Qwen are clearly standing out. Developed by the Alibaba Cloud team, Qwen 2 VL Instruct 7B is a 7-billion-parameter model designed to process both text and images, with advanced instruction-following and multimodal vision capabilities.

Origins and Features of Qwen 2 VL Instruct 7B

Qwen 2 VL 7B is one of many models in Alibaba’s Qwen lineup, gradually establishing itself as a serious alternative to Western LLMs. This multimodal model integrates several notable technical improvements:

- Advanced understanding of images and text, thanks to its optimized architecture for multimodal processing.

- Multilingual support, covering not only European languages but also Japanese, Korean, Arabic, and Vietnamese.

- Intelligent management of visual and textual inputs, facilitating integration into diverse AI applications.

With these advancements, Qwen 2 VL Instruct 7B positions itself as one of the most powerful models today, especially in the open-source domain, which has been dominated by alternatives like Meta’s LLaMA or Mistral AI.

Integrating Qwen 2 VL 7B into LabVIEW with SOTA and the GenAI Toolkit

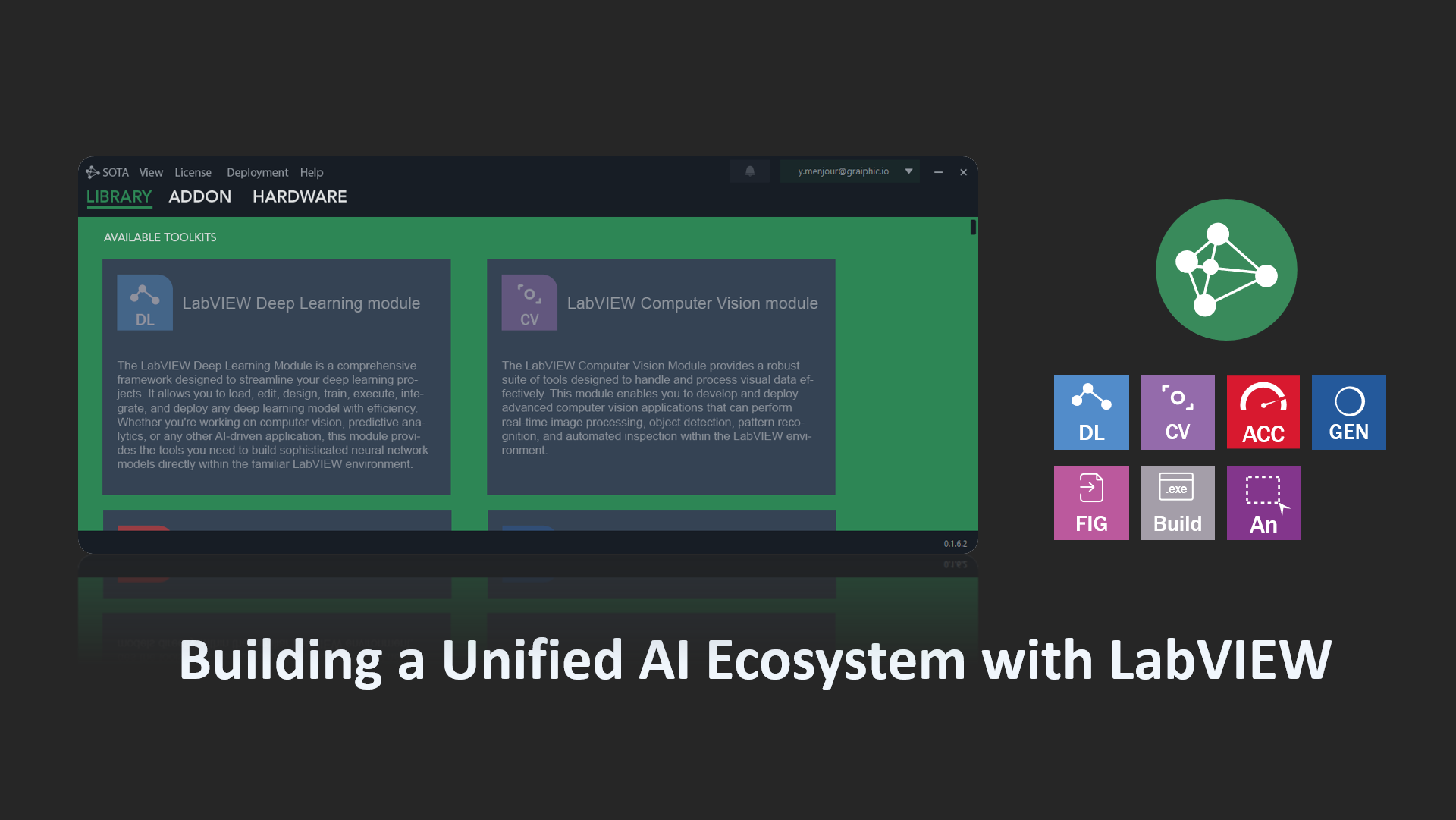

At Graiphic, we have always sought to democratize access to advanced AI for engineers and automation researchers. That’s why we developed the GenAI Toolkit, a new solution that allows LabVIEW developers to easily leverage GGUF LLM models, including multimodal models like Qwen.

With SOTA, our AI framework for LabVIEW, it is now possible to:

- Load and run any GGUF LLM model directly in LabVIEW.

- Integrate these models into analysis and industrial control workflows without leaving the LabVIEW environment.

- Use the latest AI generative innovations without having to code in Python or C++.

The GenAI Toolkit thus brings a new dimension to LLM utilization, enabling seamless integration into supervision, predictive maintenance, and many other applications.

The Rise of Chinese Open-Source Models

While the AI community still awaits news of a potential LLaMA 3 from Meta, China is clearly taking the lead in open-source LLMs. Models like Qwen 2 VL and DeepSeek R1 are showing performances equivalent to or even surpassing Western alternatives, particularly in multimodal capabilities and multilingual understanding.

This Chinese dominance in open-source LLMs could redefine the market dynamics, especially as software infrastructures quickly adapt to these new models, as demonstrated by their integration into LabVIEW via SOTA and the GenAI Toolkit.

The integration of Qwen 2 VL Instruct 7B into LabVIEW through Graiphic’s GenAI Toolkit marks a significant step in adopting LLMs in industrial and scientific environments. The rise of Chinese models, combined with solutions like SOTA, paves the way for a new generation of embedded, autonomous, and highly efficient AI applications.

With the rapid evolution of open-source AI, one question remains: Who will dominate the next generation of open-source LLMs?