From “G” to a Unified Ecosystem: The Evolution of Our Vision

Our project, initially named “G” in homage to LabVIEW’s graphical language, has evolved far beyond its original ambitions and scope. Today, this name no longer reflects the scale or depth of the progress made over the past three years. It is important to emphasize that our primary goal is no longer just to offer a high-performance deep learning framework under LabVIEW, but rather to highlight the innovation and unique value of our unified and comprehensive ecosystem: SOTA. We now aim to provide a full catalog of AI solutions.

Expanding from HAIBAL to FIG and Beyond

At first, we developed HAIBAL, an easy-to-use and regularly updated deep learning library. This project was initially focused on creating a LabVIEW-specific toolkit, inspired by standards like Keras. However, increasing demand led us to create a tool named FIG (File Importer and Generator), designed to make it easy to import models from Keras, thus facilitating the migration of existing projects into our environment.

Meeting Market Demands with TIGR Vision and GIM

Recognizing market needs, we quickly expanded our offering by developing TIGR Vision, a tool specialized in image display and manipulation, providing a comprehensive range of functionalities for computer vision. Our journey also led to the creation of GIM (Graphic Infrastructure Manager), designed to simplify the distribution of our solutions while ensuring independence from third-party platforms.

Innovating with PERRINE and Annotator: Pushing Boundaries in AI

In the same spirit of innovation, we developed the PERRINE toolkit, which provides optimized GPU data processing, ensuring efficient pre- and post-processing of data before and after model execution on GPUs using HAIBAL. The positive reception of these developments motivated us to further enrich our catalog, including the ongoing development of Annotator, a high-performance annotation tool for computer vision.

Preparing for the Future with LM Studio

Meanwhile, the growing interest in Large Language Models (LLMs), Small Language Models (SLMs), and Visual Language Models (VLMs) has led us to consider future development of LM Studio, which will facilitate the customization and deployment of these models.

Embracing ONNX Runtime for Greater Performance and Flexibility

Our key innovation addresses a fundamental need: to offer user-friendly tools that cover all AI development requirements, available at no extra cost, easy to deploy, and regularly updated. Convinced of the upcoming optimization challenges and aware of HAIBAL’s limitations, we decided to refocus our technological efforts this year with a complete overhaul. The core of this transformation is our adoption of ONNX Runtime, a high-performance open-source runtime. This guarantees inference optimization, broad compatibility with frameworks like PyTorch and TensorFlow, and straightforward deployment.

Enhancements to FIG and GIM: Boosting Compatibility and Usability

Significant updates have also been made to FIG, which now offers full compatibility with both PyTorch and TensorFlow, as well as the integration of NETRON, providing modern model visualization. As for GIM, it has undergone a major update: in addition to managing installations, it will now handle online licensing via a proprietary cloud infrastructure. It will also allow one-click downloads of ready-to-use models, and its new user-friendly interface, inspired by modern best practices like Adobe Cloud or Steam, will enhance the user experience.

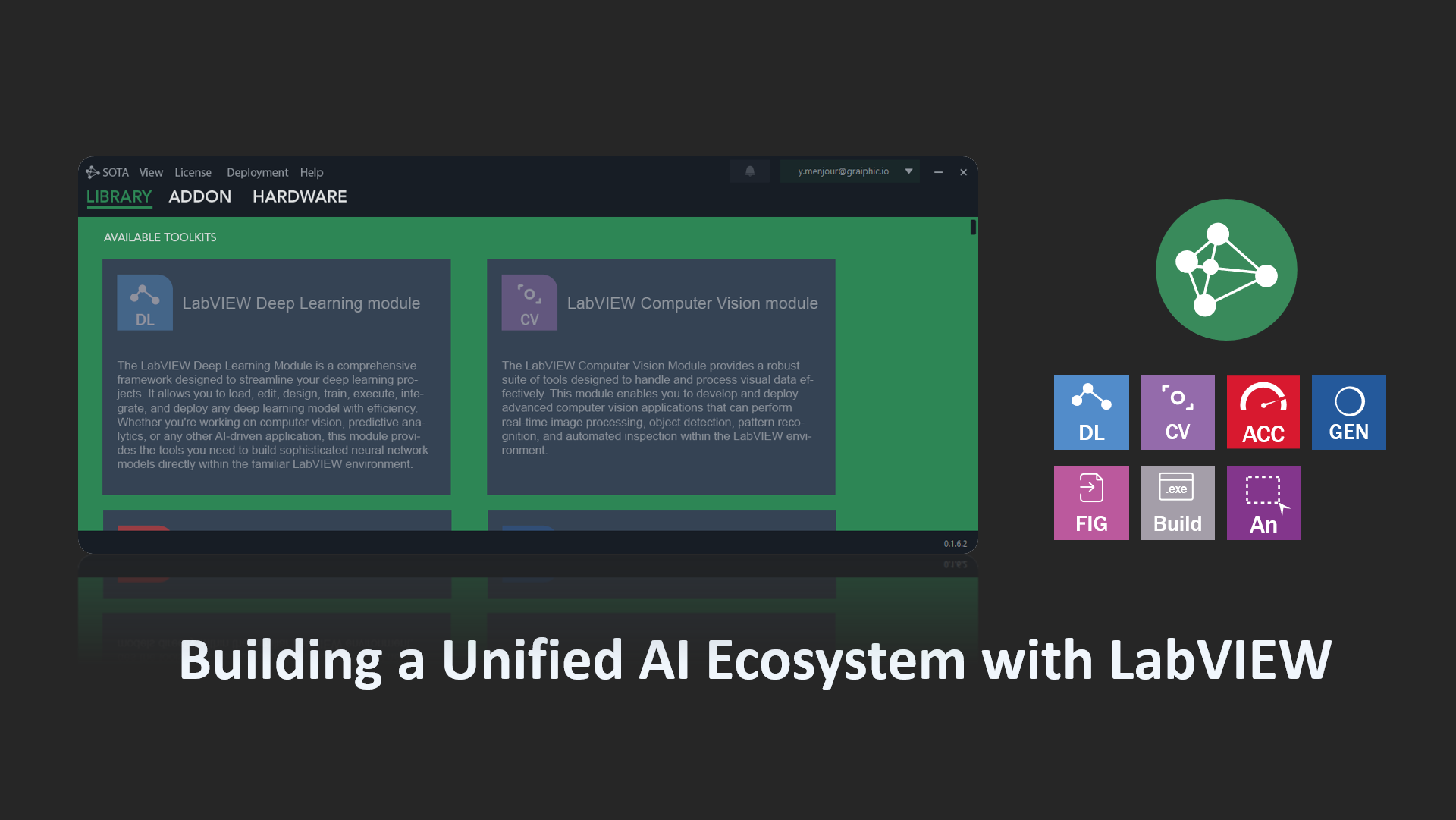

A Rebranding for Clarity and Simplicity

Finally, given the scale of the upcoming transformations, we have decided to implement a global rebranding of our solutions: HAIBAL will become the Deep Learning Toolkit, TIGR will become the Computer Vision Toolkit, and PERRINE will be renamed the GPU Accelerator. This initiative aims to clarify and simplify the identification of each of our solutions. All of these innovations will be launched in October 2024.

Integration into LabVIEW

With the LabVIEW deep learning module, LabVIEW users will soon be able to benefit from advanced features to run models like Llama 3.1. This module will enable these models to operate on LabVIEW, providing top-tier performance for complex computer vision tasks. Key features offered by this toolkit include:

- Full compatibility with existing frameworks: Keras, TensorFlow, PyTorch, ONNX.

- Impressive performance: The new LabVIEW deep learning tools will allow executions 50 times faster than the previous generation and 20% faster than PyTorch.

- Extended hardware support: CUDA, TensorRT for NVIDIA, Rocm for AMD, OneAPI for Intel.

- Maximum modularity: Define your own layers and loss functions.

- Graph neural networks: Complete and advanced integration.

- Annotation tools: An annotator as efficient as Roboflow, integrated into our software suite.

- Model visualization: Utilizing Netron for graphical model summaries.

- Generative AI: Complete library for execution, fine-tuning, and RAG setup for Llama 3 and Phi 3 models.