Taking advantage of the significant benefits of LabVIEW compared to traditional syntactic languages, namely the rapid implementation of agents by integrating the model into an efficient and adapted software architecture, we are excited to announce the development of a new generation of modules and tools for LabVIEW focused on robotics, AI, and automation.

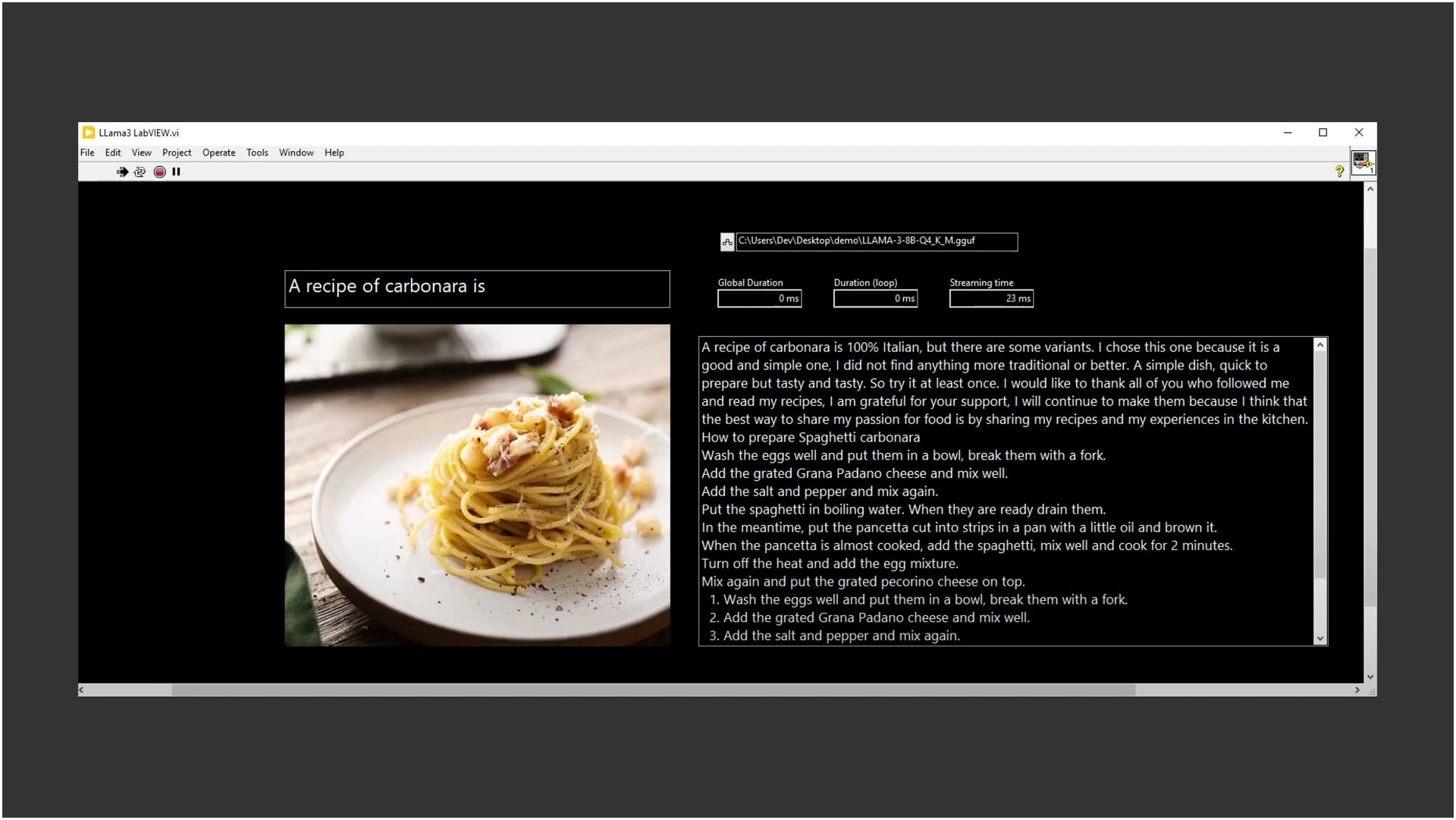

Released in April 18, 2024, we are thrilled to announce the arrival of the LLaMA 3 model on LabVIEW today.

To enable its functionality within LabVIEW, we are utilizing the LabVIEW Deep Learning Module to execute inferences. This integration allows us to harness the power of LLaMA 3’s advanced large language models (LLMs) and generative AI capabilities, enhancing our performance in AI-driven automation and robotics.

With this integration, users can leverage LLaMA 3’s robust features, including improved natural language understanding, efficient processing, and the ability to generate sophisticated outputs with high accuracy. This opens up new possibilities for innovation in various fields such as autonomous systems, smart manufacturing, and intelligent control systems.

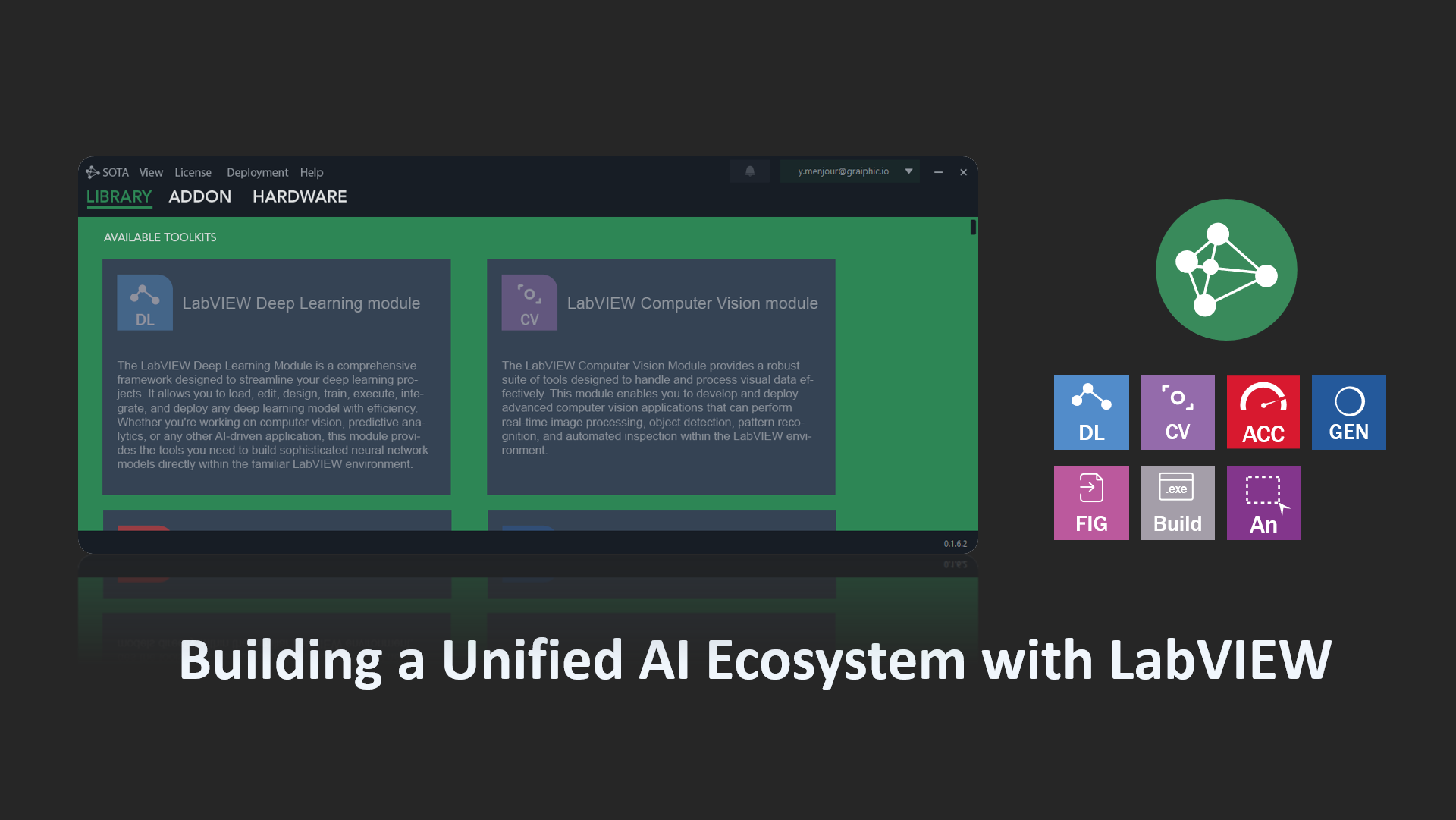

Our new set of modules and tools aims to simplify the deployment of sophisticated AI applications within LabVIEW, providing a seamless interface and enhanced performance. We believe this will significantly accelerate development cycles and bring advanced generative AI capabilities to a broader range of applications and users.

Integration into LabVIEW

With the LabVIEW deep learning module, LabVIEW users will soon be able to benefit from advanced features to run models like YOLO V8 OBB. This module will enable these models to operate on LabVIEW, providing top-tier performance for complex computer vision tasks. Key features offered by this toolkit include:

- Full compatibility with existing frameworks: Keras, TensorFlow, PyTorch, ONNX.

- Impressive performance: The new LabVIEW deep learning tools will allow executions 50 times faster than the previous generation (HAIBAL 1.0) and 20% faster than PyTorch.

- Extended hardware support: CUDA, TensorRT for NVIDIA, Rocm for AMD, OneAPI for Intel.

- Maximum modularity: Define your own layers and loss functions.

- Graph neural networks: Complete and advanced integration.

- Annotation tools: An annotator as efficient as Roboflow, integrated into our software suite.

- Model visualization: Utilizing Netron for graphical model summaries.

- Generative AI: Complete library for execution, fine-tuning, and RAG setup for Llama 3, Phi 3, Florence-2 models.

Example Video

With these advancements, integrating YOLO v8 OBB into your LabVIEW projects has never been easier, enabling you to leverage cutting-edge computer vision capabilities directly within the LabVIEW environment. Whether you are working on a robotics project, an AI-driven application, or an automation task, the new modules will streamline your workflow and enhance your project’s performance.

Conclusion

With the upcoming next-generation LabVIEW Deep Learning module, LLaMA 3 will be available as an example. This integration will excel in tasks such as natural language understanding, generative AI outputs, and efficient processing for AI-driven applications in robotics, automation, and more. This powerful combination promises to unlock new potentials in developing sophisticated and intelligent systems, pushing the boundaries of what is achievable in these fields.