For the first time ever, we have successfully trained a YOLO 11 model entirely within LabVIEW, using our custom Deep Learning Toolkit from the SOTA suite—without reusing a single line of Ultralytics’ source code.

This is a major breakthrough: until now, all training workflows for YOLO models relied heavily on the proprietary codebase from Ultralytics, which is governed by the AGPL-3.0 license. This license imposes strict conditions: any software using their training code (even just to fine-tune a model) must open-source its own codebase under the same license. That includes all downstream products using those models. To avoid this, companies must purchase a commercial license from Ultralytics.

We’ve broken that dependency. By building a fully ONNX Runtime–based training pipeline for YOLO 11, we’re providing a sovereign, license-free alternative—suitable for industrial, academic, and research contexts that require confidentiality and control.

Achieving this milestone required overcoming numerous technical challenges:

-

Implementing missing ONNX layers with full backpropagation support: We added backward computation for several layers that were either missing or incomplete in ONNX Runtime, including

Sum,ReduceMax,GlobalMaxPool, and evenAtan, ensuring end-to-end differentiability. -

Extending custom loss functions: ONNX Runtime originally provided only basic loss function support. We enabled the definition of fully customizable loss functions and contributed around ten new standard loss types that were previously unavailable.

-

Full pipeline conversion to ONNX: Porting the entire YOLO training pipeline to ONNX required deep refactoring, especially to ensure shape compatibility, model graph integrity, and training consistency between forward and backward passes.

-

Gradient and variant clipping: We had to implement advanced clipping strategies to manage gradients and intermediate variable values, improving training stability—especially in dynamic or branching architectures.

-

“Stop gradient” support (PyTorch equivalent:

tensor.detach()): One of the most technically demanding aspects was implementing the equivalent ofdetach()from PyTorch within ONNX Runtime. This feature allows developers to cancel backpropagation on a branch of the model graph, essential for certain exotic training flows (e.g., reinforcement learning, hybrid architectures). ONNX did not support this natively—we built it. -

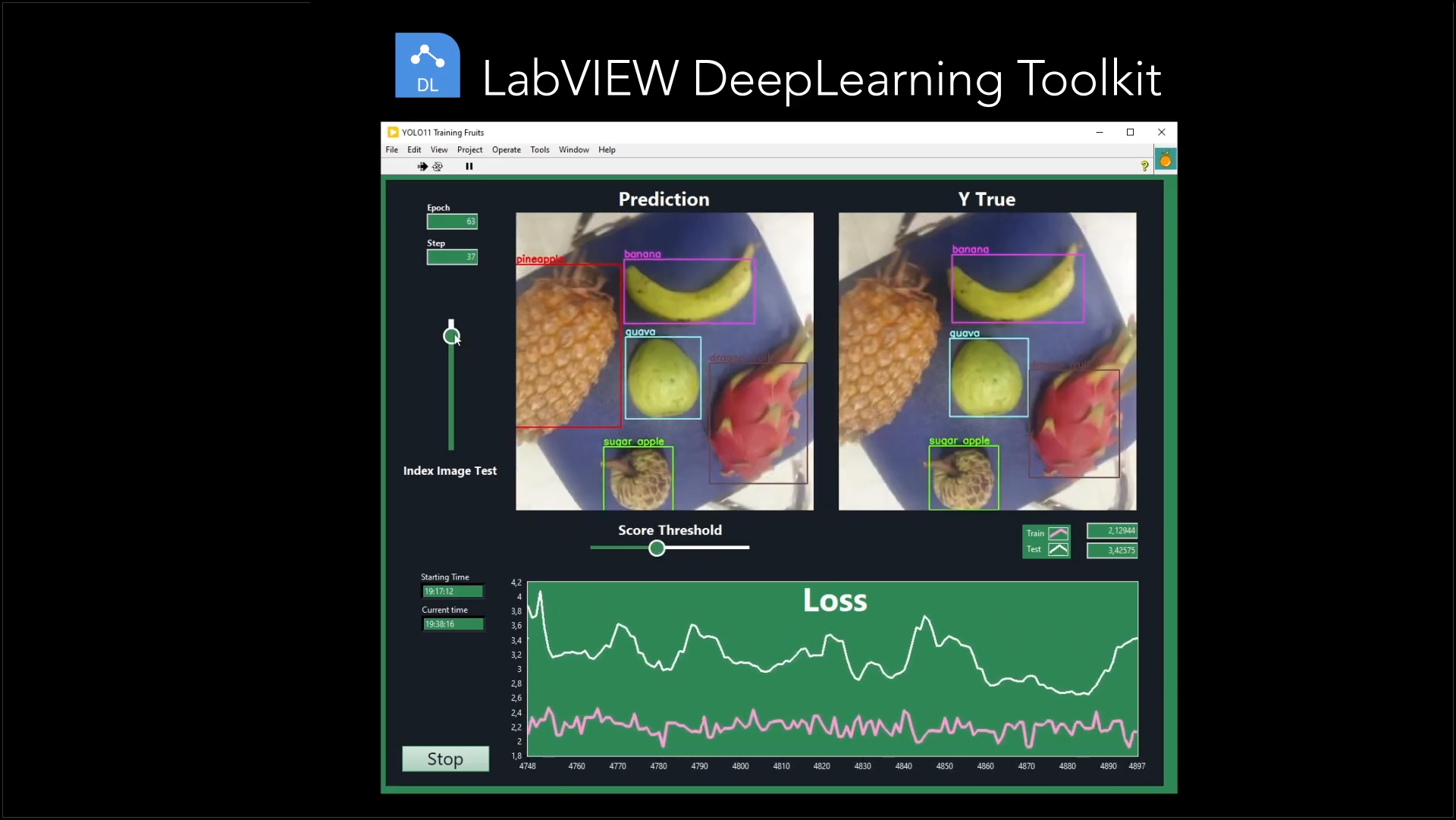

Real-time training visualization: By combining ONNX Runtime with LabVIEW, we achieved live visualization of training dynamics—allowing us to track model evolution at each iteration, which is exceptionally valuable for debugging and scientific insight.

Looking Ahead

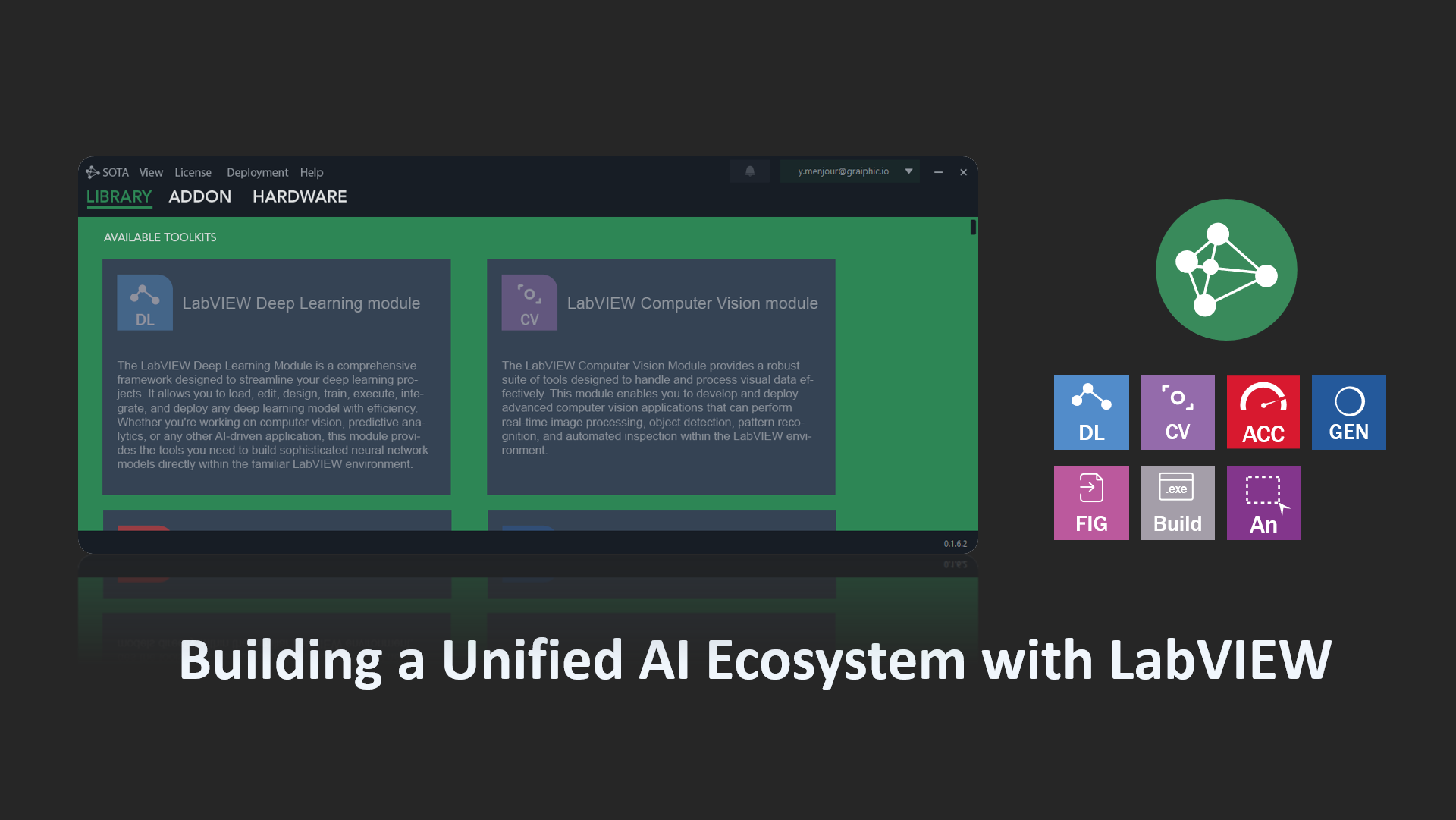

The result: Graiphic’s Deep Learning Toolkit for LabVIEW is now a true alternative to Python-based training stacks like PyTorch or TensorFlow, entirely within the ONNX ecosystem. This is a world-first, opening the door to high-performance model training in environments where Python is not an option.

We’re already working on the next milestones: training YOLO 11 variants for segmentation and oriented bounding boxes—always with a focus on modularity, compliance, and industrial usability.