Hook

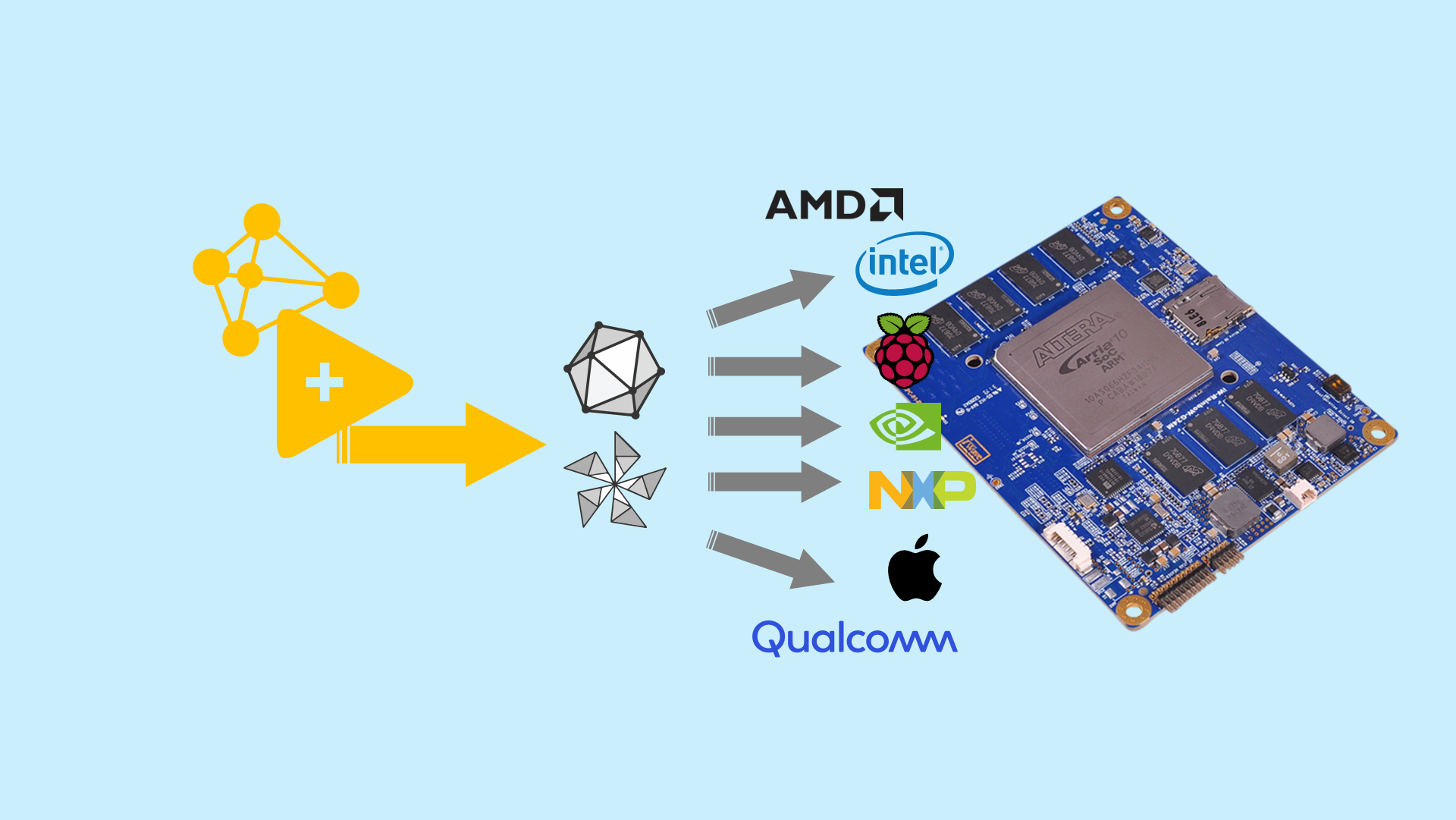

What if LabVIEW became a universal designer for software-and-hardware graphs: you sketch a flow in the IDE, export a single ONNX file, and

run it on any SoC—from Raspberry Pi to GPUs and FPGAs—without shipping the LabVIEW runtime?

What if that same graph could sense, decide, and actuate (GPIO, DMA, timers, sync) through ONNX Runtime and dedicated Execution Providers?

One graph to perceive, decide, and act—portable across hardware.

In two sentences

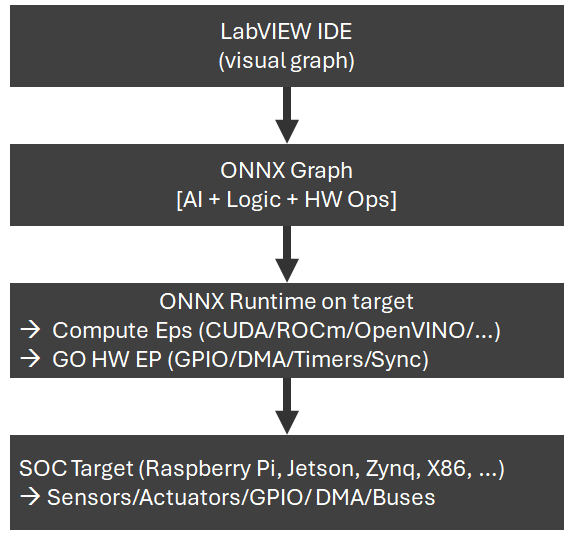

- LabVIEW is the visual editor that authors an ONNX graph extended with hardware operators (GO HW).

- ONNX Runtime is the universal executor that partitions and maps the graph onto the target via Execution Providers (EPs).

Why now

AI meets physical systems everywhere: robots, test benches, edge devices. Teams want one portable representation that captures AI, control logic,

and low-level I/O together. ONNX standardized AI inference. Graiphic’s next step is bringing hardware into the graph (GO HW) and

using LabVIEW to author it.

Outcome: less glue code, stronger traceability, homogeneous deployments—no more 32/64-bit wars and

no proprietary runtime on the target.

The idea, step by step

- Design in LabVIEW: build a graph mixing standard ONNX ops (math/control/AI) plus

com.graiphic.hardwareops (DMARead,GPIOSet,HWDelay,WaitSync, …). - Validate on PC: simulate/emulate, run unit tests.

- Partition with ONNX Runtime: send compute heavy parts to EPs (CUDA/ROCm/oneDNN/OpenVINO/…); route GO HW ops to a Hardware EP.

- Deploy the same

.onnxto the target (SoC, GPU, FPGA, VPU…).

Case study: Raspberry Pi as first public target

Goal: blink a LED via GPIO when an AI detection fires—all inside a single graph.

- Capture: a node reads frames.

- Inference: a light ONNX model detects the object.

- Decision: ONNX control nodes (

If/Loop) drive the logic. - Actuation:

GPIOSettoggles the LED withHWDelay+WaitSyncfor deterministic timing.

Deployment: copy the .onnx to Raspberry Pi; ONNX Runtime loads compute EPs (CPU/available accelerators) plus the GO HW EP for GPIO/Timers;

the same file runs, with no LabVIEW runtime on the device.

Energy efficiency and inference efficiency (maximum optimization)

Principle: place each operation where it consumes the least energy for the required performance, and remove useless work.

- Right-sized placement: heavy layers to GPU/NPU; light control to CPU; I/O ops to GO HW EP close to the metal. Less data motion → less energy.

- Zero-copy & DMA:

DMARead/Writeavoid redundant copies; streaming/alignment attributes cut memory traffic. - Operator fusion & scheduling: fuse kernels when possible; co-schedule I/O and compute to reduce stalls.

- Quantization & compilation: INT8/FP16 models and vendor compilers (TensorRT/TVM/oneDNN backends, where applicable) minimize FLOPs and memory.

- Event-driven I/O:

WaitSyncand hardware timers avoid busy polling, lowering idle power. - Thermal/power awareness: expose power-state hints; back-pressure framing maintains throughput without throttling.

Net effect: lower Joules per inference and higher inferences per watt while keeping deterministic I/O timing.

What this changes for teams

- Strong portability: one artifact from simulation to field deployment.

- Testability: each operator—AI, logic, I/O—is traceable and versioned.

- Interoperability: EPs bridge to vendor stacks (CUDA/XRT/Level Zero/…).

- Performance-per-watt: pick the optimal target without rewriting logic.

- Toward certifiability: future ONNX safety profiles with timing/contract attributes for regulated domains.

Potential markets & applications

- Industrial automation: deterministic control with embedded AI (vision-guided pick-and-place, inspection, condition monitoring).

- Test & measurement: portable test recipes; I/O encoded as graph nodes; reproducible benches across vendors.

- Robotics & AMRs: unified perception-control stacks, from prototype to fleet deployment.

- Aerospace/defense: portable mission graphs; clear timing and I/O contracts.

- Smart energy & utilities: edge anomaly detection with direct control over relays/meters.

- Agritech: camera-based detection + actuator control on low-power SoCs.

- Medical (non-diagnostic devices): embedded assistants and motor control with determinism.

- Smart buildings & IoT: sensor fusion and actuation under tight energy budgets.

How it works at runtime

ONNX Runtime partitions the graph: AI parts to compute EPs; GO HW ops to the Hardware EP.

Each GO HW operator has formal inputs/outputs/attributes/contracts and maps to vendor APIs (CUDA, XRT/Vitis, oneAPI/Level Zero, etc.).

The result is one executable graph that orchestrates AI + logic + physical I/O.

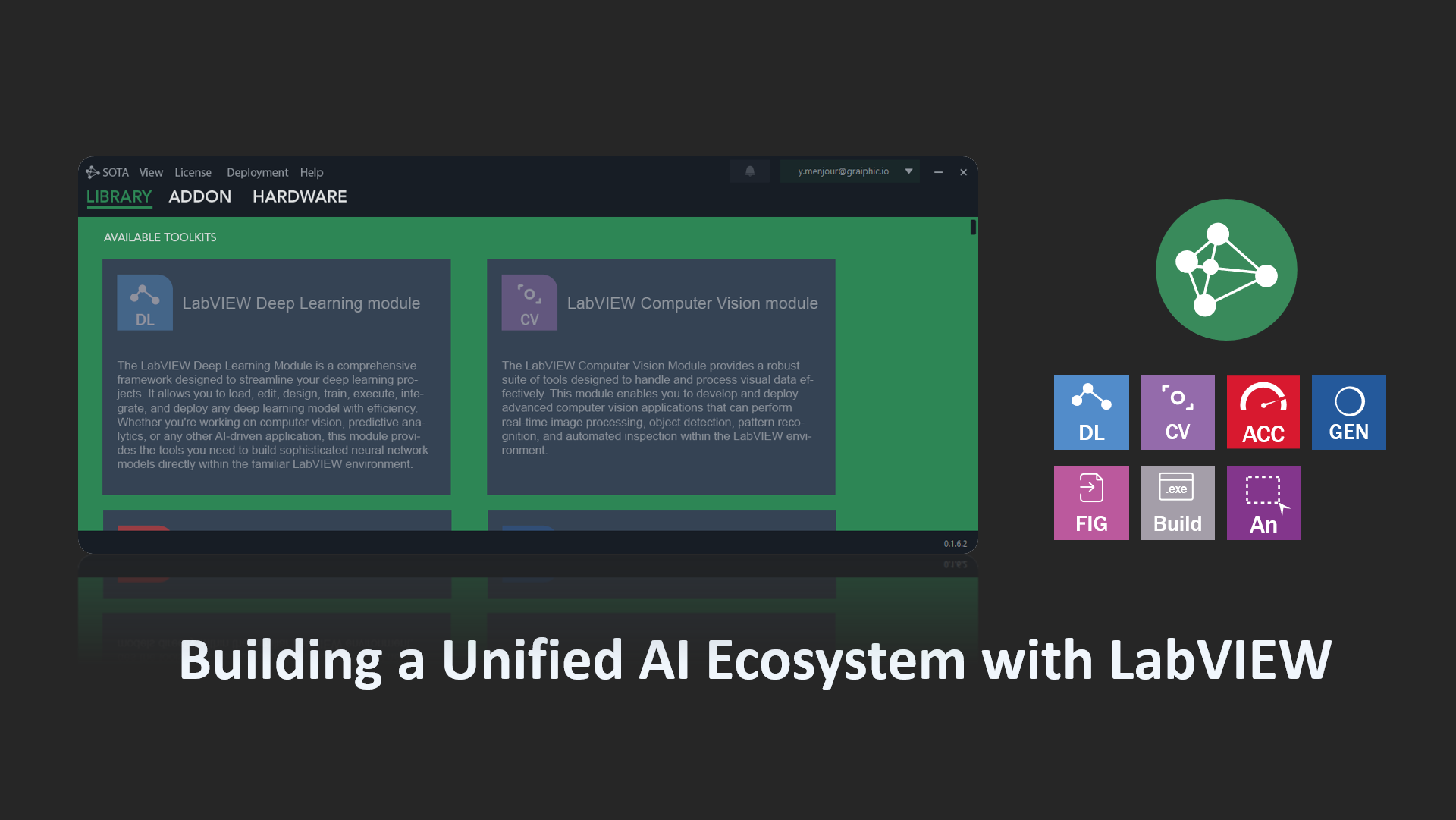

Roadmap

- Past: SOTA, Deep Learning Toolkit, Accelerator Toolkit.

- Now: ONNX GO HW—hardware operators + dedicated EP.

- v1: Raspberry Pi public demos.

- Next: Jetson, Zynq, x86 real-time, safety-oriented profiles, buses (CAN/SPI/I²C), HIL.

Quick FAQ

What if a HW node isn’t supported on a target?

Configurable policy: explicit error or software fallback with clear diagnostics.

What if the hardware can’t meet the requested contract?

The runtime raises a contract violation; rescheduling or an alternative path can be selected.

Is this real-time capable?

GO HW operators carry timing/sync attributes. The EP maps to low-level primitives (timers, events, DMA) to reduce jitter; profiling/tracing is built-in.

Does LabVIEW run on the device?

No. LabVIEW stays on the engineering station (design/validation). The device runs ONNX Runtime and the required EPs.

Call for partners

We are opening a technology door:

- LabVIEW → ONNX as the design pipeline.

- ONNX Runtime as the execution OS for graphs.

- GO HW as the portable hardware language.

If you want to co-build Raspberry Pi demos, contribute targets, or bring vendor EPs, reach out:

hello@graiphic.io.

Resources:

ONNX ·

ONNX Runtime ·

Graiphic videos ·

Articles

Graiphic’s word: we’re doing this—and we’ll catalog the hardware

This isn’t a thought experiment. We will ship a working pipeline and maintain a public catalog of supported targets and features.

Rollout will be staged: CPU-only first, then common accelerators, then extended I/O stacks. Vendor EPs and community adapters are welcome.

Initial, non-exhaustive catalog (planned & staged)

- CPUs: x86-64 (Intel/AMD), ARM64 (Cortex-A53/A72/A76/Neoverse N-class).

- SoCs (Edge/Linux): Raspberry Pi 5 (BCM2712), Raspberry Pi 4 (BCM2711), Rockchip RK3588/3566, NXP i.MX 8 family, TI Sitara AM62/AM64.

- NVIDIA Jetson: Nano/Xavier/Orin families.

- GPUs: NVIDIA (Turing/Ampere/Ada/Hopper), AMD (RDNA/CDNA via ROCm where available), Intel (Xe/ARC via oneAPI/Level Zero where available).

- FPGAs/ACAP: AMD-Xilinx Zynq-7000 & Zynq UltraScale+ MPSoC, Versal; Intel Agilex/Stratix (via vendor toolchains).

- VPUs/NPUs (edge accelerators): Intel Movidius Myriad X, Google Coral Edge TPU, NVDLA-based blocks, selected ARM NPUs where vendor bridges exist.

- Buses & I/O: GPIO, I²C, SPI, UART, PWM, CAN (progressive enablement per target).

Availability depends on EPs and adapters per platform; we’ll publish capability matrices and test suites with each release.