By persevering, we can achieve anything. It’s hot but we are getting there.

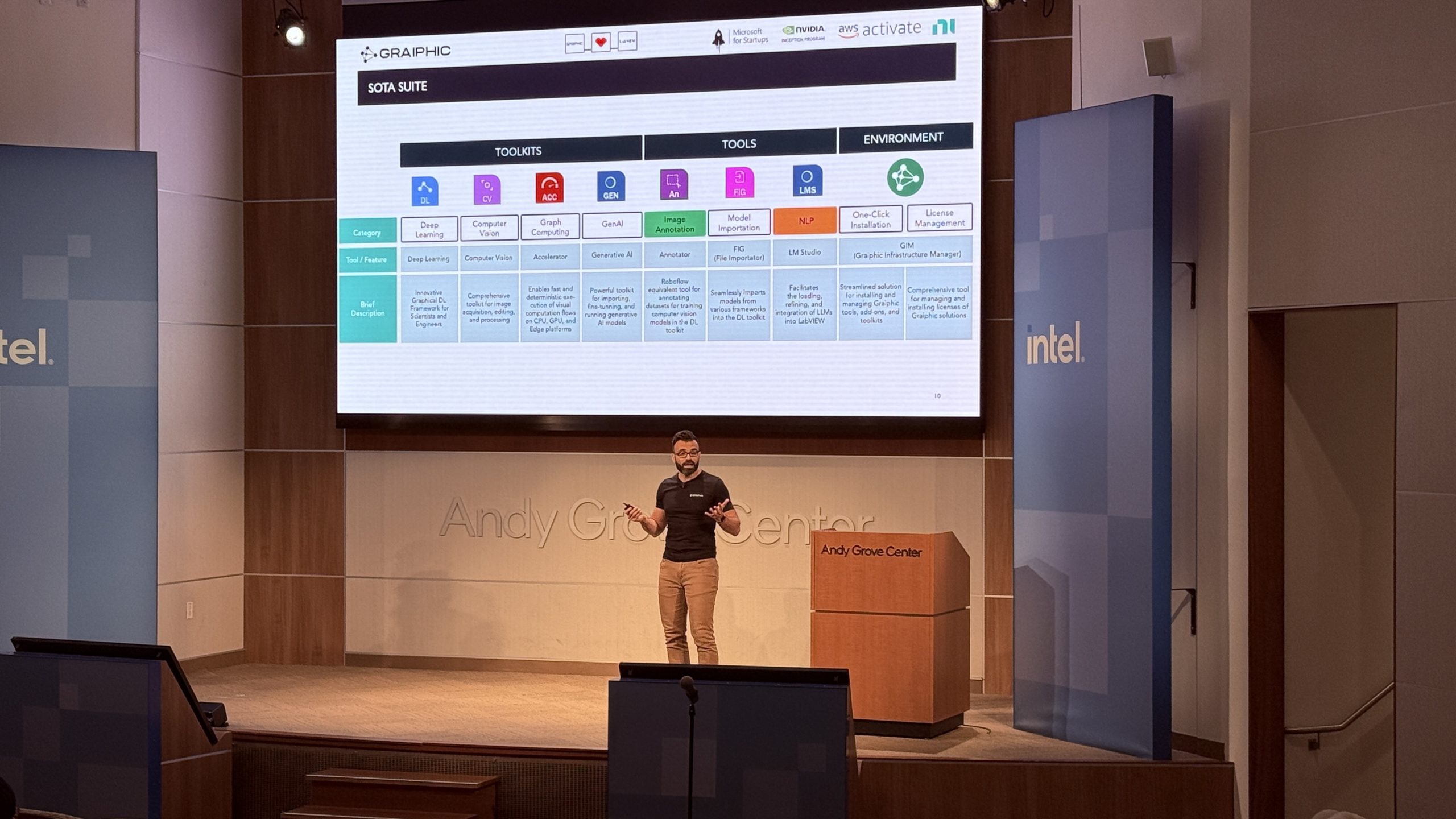

8 months ago, PyTorch (Meta) and Keras (Google) already existed, and yet we decided to write our own AI library. It’s now done, the HAIBAL project is alive!

Self-declared outsider, we are aware of our delay that we will catch up.

We can now import and edit any model coming from Keras allowing our users to have access to it on LabVIEW. Inter-portability with PyTorch will arrive in 2022. We will also be able to export our models in any format in the long term.

The main axes of development for us will be full portability from and to Keras/PyTorch as well as the deployment of HAIBAL on the majority of platforms (CUDA, OpenCL, FPGA Xilinx, Intel).

It would be a shame to do things halfway.

HAIBAL is a library developed entirely under LabVIEW, hyper modular and integrating perfectly into all industrial systems.

HAIBAL IN A FEW FIGURES

- 16 activation functions (ELU, Exponential, GELU, HardSigmoid, LeakyReLU, Linear, PRELU, ReLU, SELU, Sigmoid, SoftMax, SoftPlus, SoftSign, Swish, TanH, ThresholdedReLU)

- 84 functional layers (Dense, Conv, MaxPool, RNN, Dropout…)

- 14 loss functions (BinaryCrossentropy, BinaryCrossentropyWithLogits, Crossentropy, CrossentropyWithLogits, Hinge, Huber, KLDivergence, LogCosH, MeanAbsoluteError, MeanAbsolutePercentage, MeanSquare, MeanSquareLog, Poisson, SquaredHinge)

- 15 initialization functions (Constant, GlorotNormal, GlorotUniform, HeNormal, HeUniform, Identity, LeCunNormal, LeCunUniform, Ones, Orthogonal, RandomNormal, Random,Uniform, TruncatedNormal, VarianceScaling, Zeros)

- 7 optimizers (Adagrad, Adam, Inertia, Nadam, Nesterov, RMSProp, SGD)

A YouTube training channel, a complete documentation under GitHub and a website are in progress.

WORKING IN PROGRESS & COMING SOON

This work is titanic and believe me it makes us happy that you encourage us in it. (it boosts us). In short, we are doing our best to release this library as soon as possible.

Still a little patience …