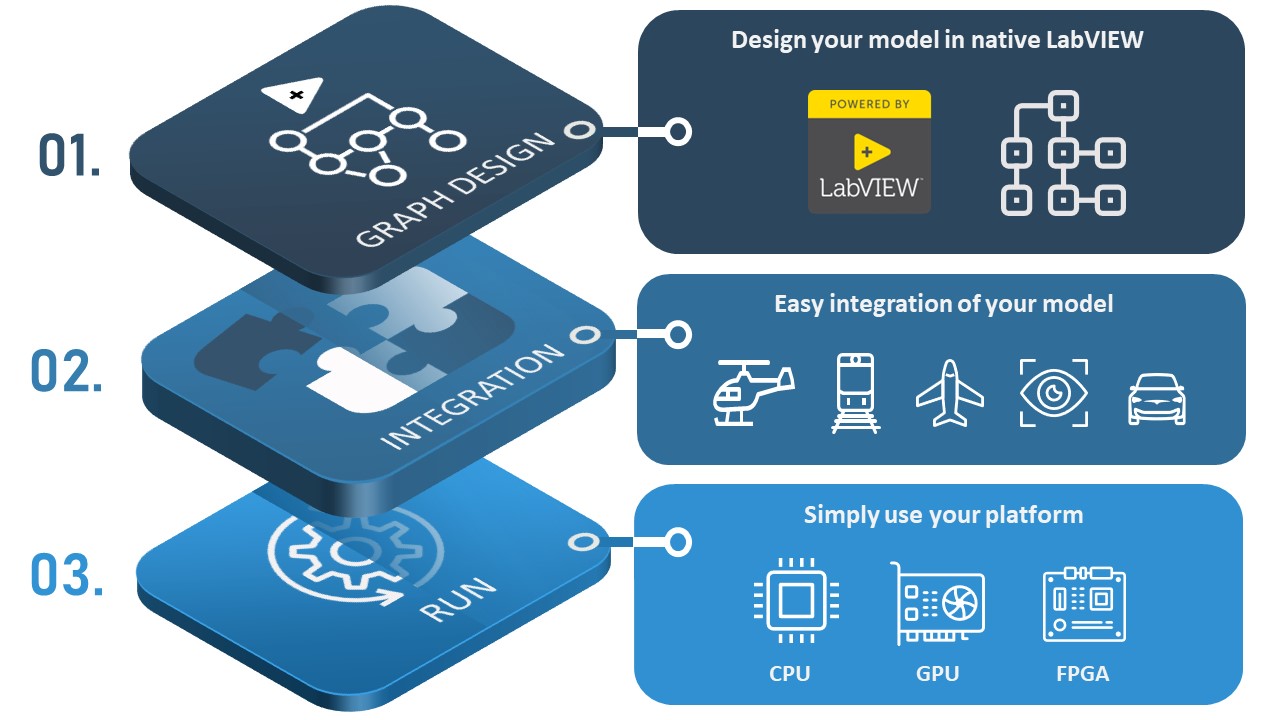

The LabVIEW HAIBAL software library includes a complete basic development kit to seamlessly create accelerated deep learning applications in just 3 steps.

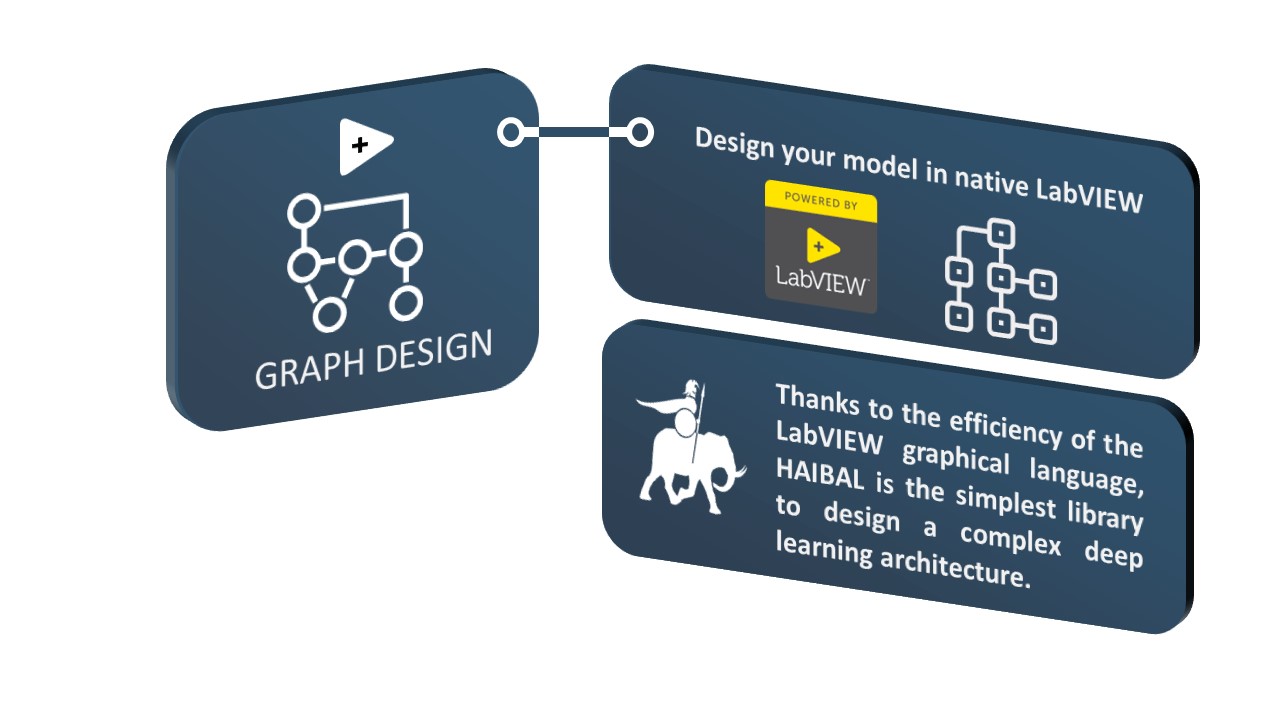

GRAPH DESIGN STEP

In the first step, we define the model. This step offers two possibilities to our customers: design directly in LabVIEW the architecture of the deep neural network or import and convert into “HAIBAL object” an existing Keras TensorFlow model (compatibility with PyTorch will be available in a few months).

Any HAIBAL object can then be saved, loaded or edited. When editing, the user will be able to modify the architecture of the neural network under LabVIEW.

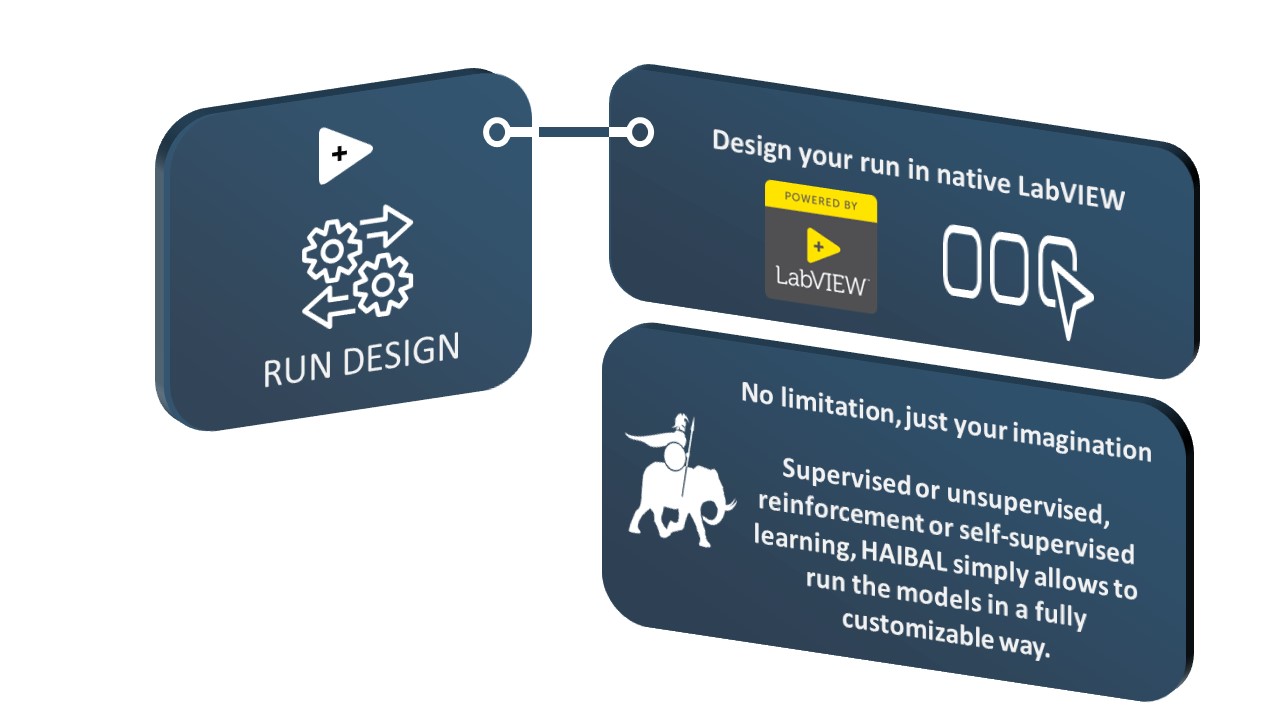

A RUN DESIGN INSIDE THE GRAPH DESIGN

Run design aim to allow users to choose how runing the model

INTEGRATION PART

The second step consists in integrating the graph model in the flow. At this stage, we propose to run it as a predictive function directly in any engineering system project.

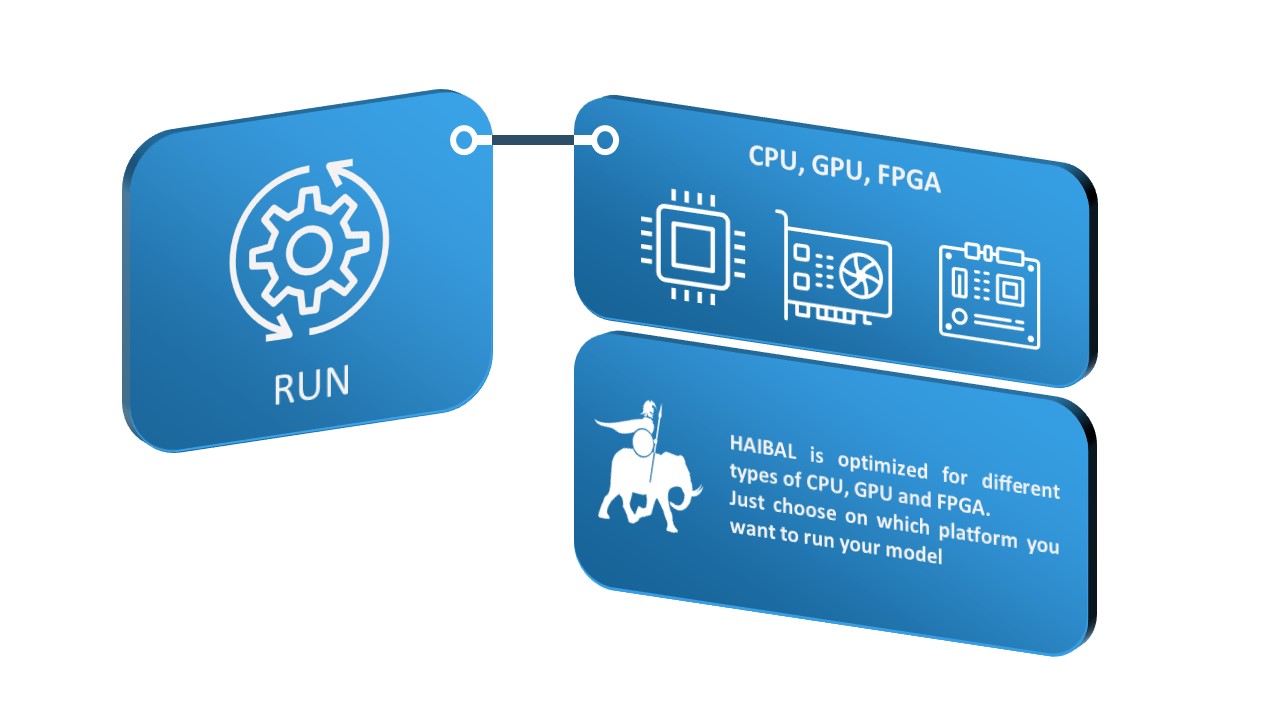

CHOOSE YOUR PLATEFORM

The last step, even cooler, you just have to choose the platform on which you want to run your HAIBAL project.

NATIVE LABVIEW AND THE OTHERS PLATEFORMS

Our first approach was to develop the whole project in native LabVIEW.

By doing this, our idea is to follow the performance evolutions of LabVIEW brought by the Nationals Instruments teams in the speed of code execution, resource management, loop optimizations or improvements in operations (BLAS…).

We won’t hide you that we like NI so it seemed natural to us. 🥰

C++ CODE OPTIMISATION

Nevertheless, LabVIEW still has a long way to go and also in order to overcome some of its shortcomings we have also developed a c++ layer that can bring serious improvements in speed when executing a model.

In this way we follow Intel OneAPI and the latest DPC++ compilator.

CUDA COMPATIBILITY

For NVIDIA GPU, CUDA will be implemented in the first release of HAIBAL.

WORK IN PROGRESS & COMING SOON

Work is far to be finished and we still have a lot of development to do. In short, we are doing our best to release the first version of our library as soon as possible.

Still a little patience …