A LITTLE HISTORY

In 2016 Redmon, Divvala, Girschick and Farhadi revolutionized object detection with a paper titled: You Only Look Once: Unified, Real-Time Object Detection. In the paper they introduced a new approach to object detection — The feature extraction and object localization were unified into a single monolithic block. Furthermore — the localization and classification heads were also united. Their single-stage architecture, named YOLO (You Only Look Once) results in a very fast inference time.

DATASET USED TO TRAIN THE MODEL : COCO (MICROSOFT COMMON OBJECTS IN CONTEXT)

The MS COCO (Microsoft Common Objects in Context) dataset is a large-scale object detection, segmentation, key-point detection, and captioning dataset. The dataset consists of 328K images.

The dataset has annotations for panoptic: full scene segmentation, with 80 thing categories (such as person, bicycle, elephant) and a subset of 91 stuff categories (grass, sky, road).

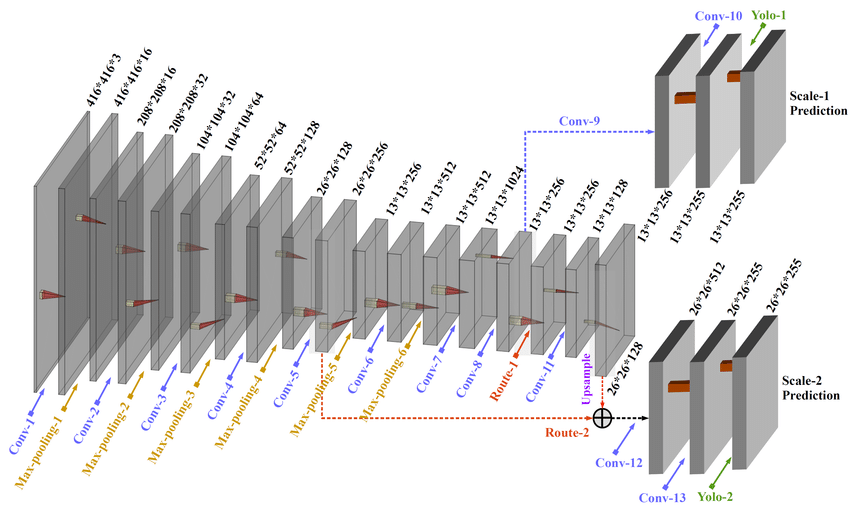

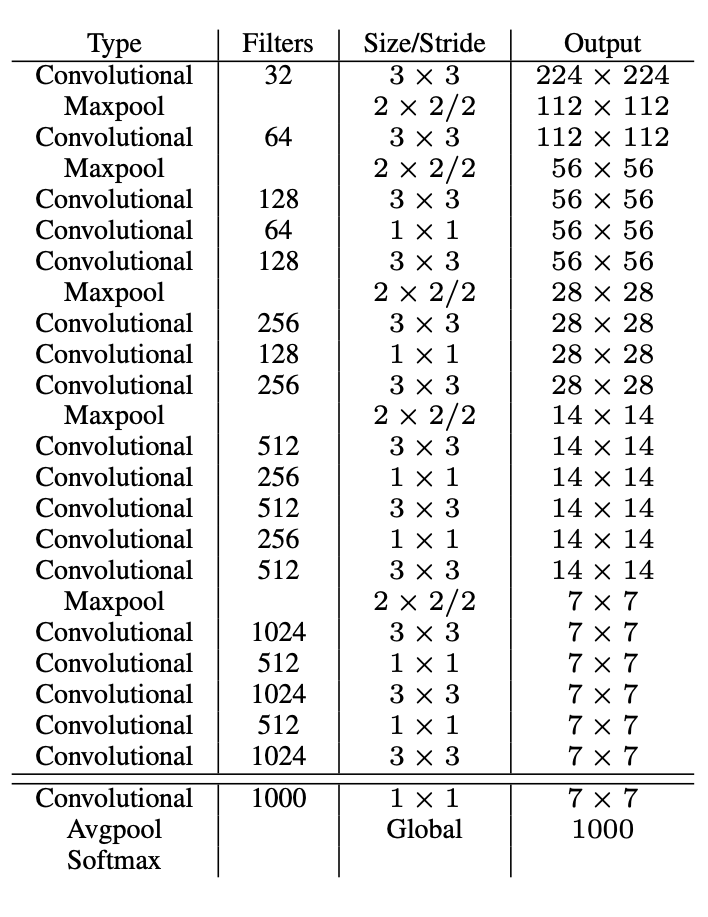

THE ARCHITECTURE

Inspired by ResNet and FPN (Feature-Pyramid Network) architectures, Tiny YOLO-V3 feature extractor, called Darknet-19 and 2 prediction heads (like FPN) — each processing the image at a different spatial compression.

IMPORTING FROM HDF5 SAVE KERAS FORMAT A TINY YOLOV3

HAIBAL Library can import all HDF5 saved file from Keras Library. As application, we import a Tiny Yolov3 exemple from GitHub and use it on our HAIBAL LabVIEW DeepLearning Library.

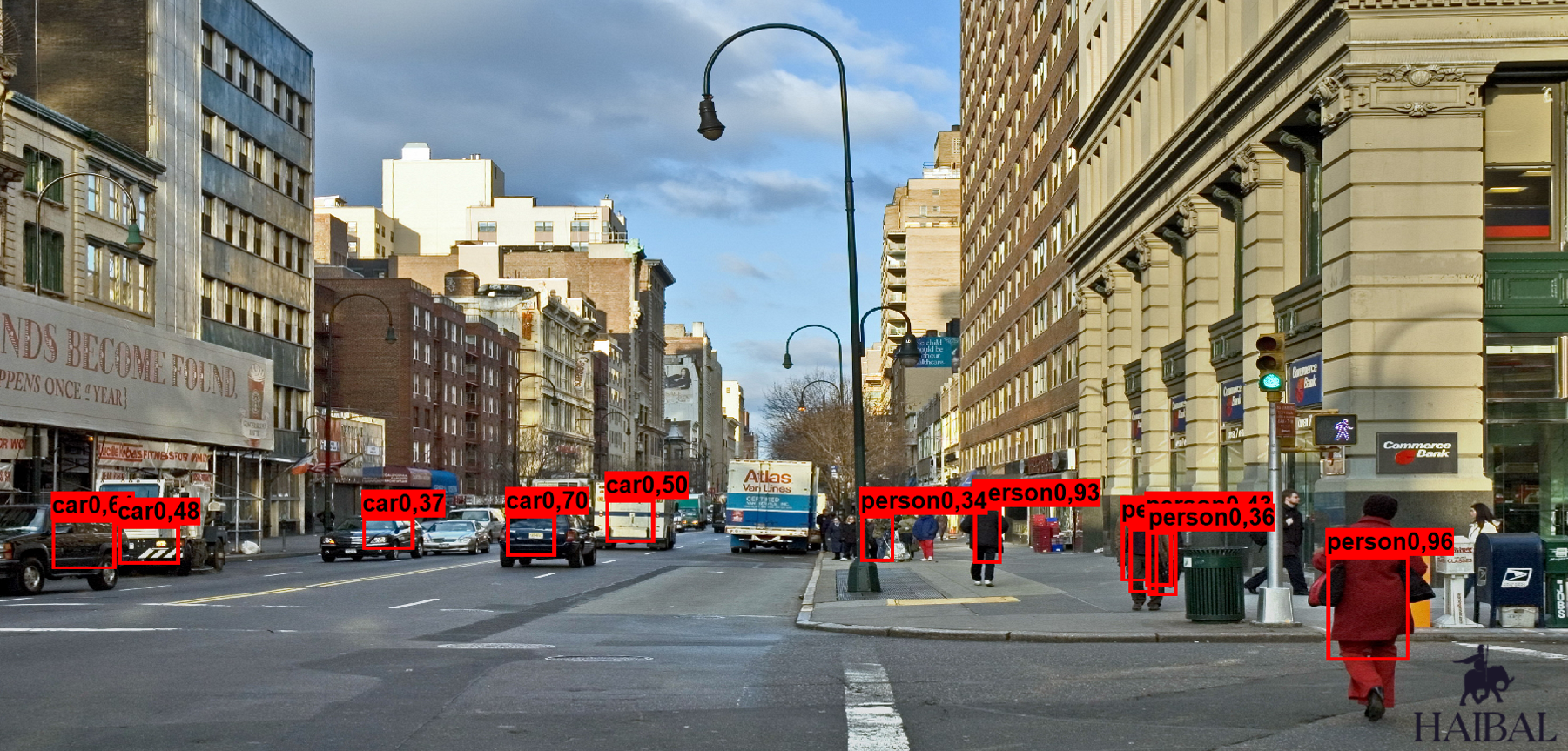

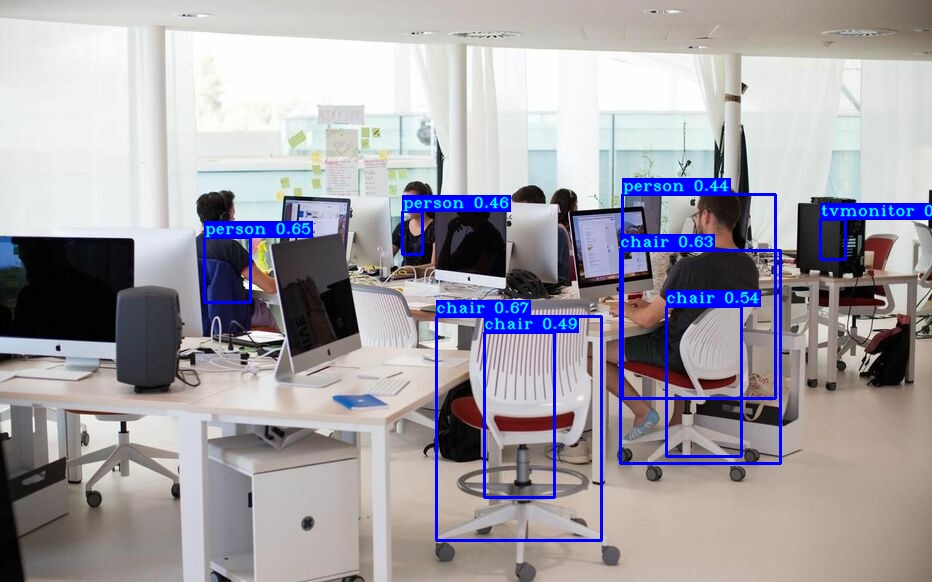

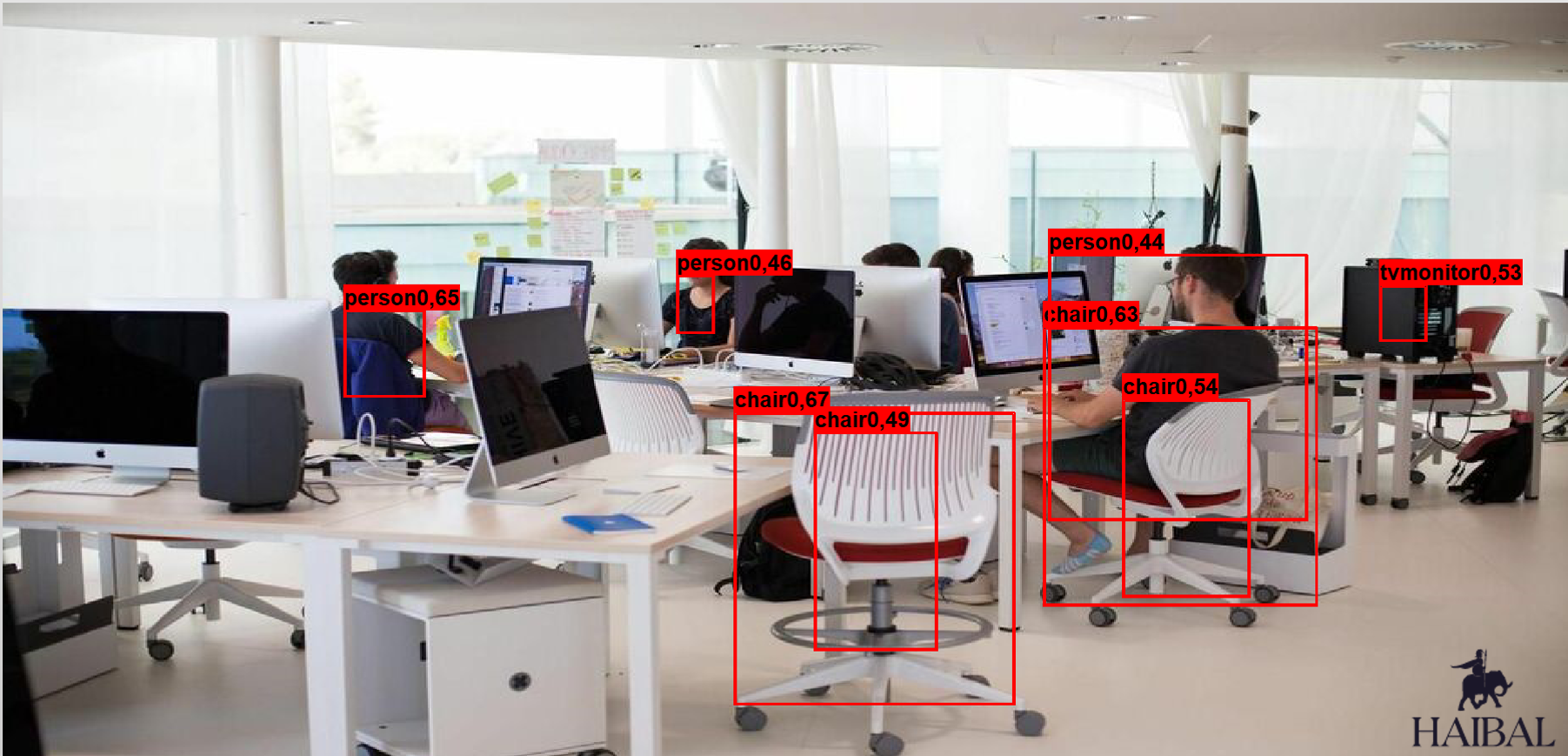

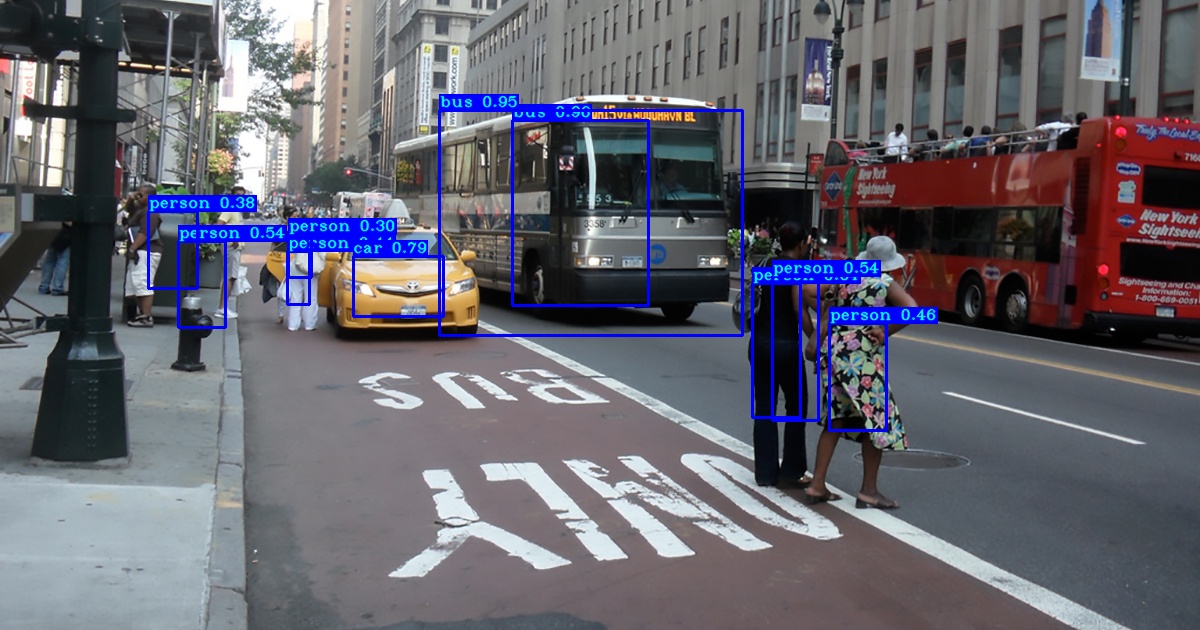

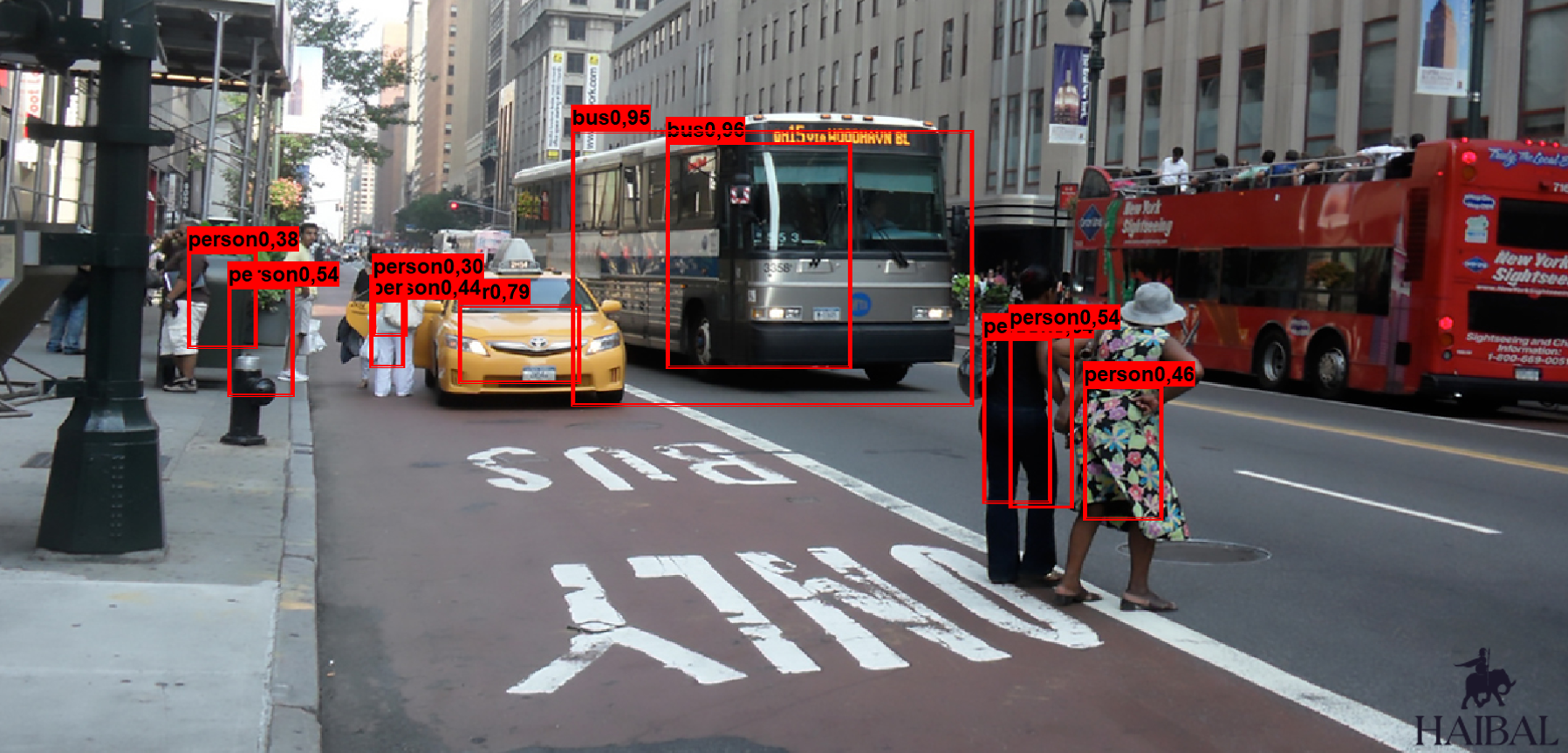

In the below images, we predict the same result (boxes) as the Keras Model.

On the left the image processed by Keras and on the right the same image processed with our HAIBAL library (city)

On the left the image processed by Keras and on the right the same image processed with our HAIBAL library (Open Space)

On the left the image processed by Keras and on the right the same image processed with our HAIBAL library (Street)

The Tiny YoloV3 pretrained model exemple will be proposed in the HAIBAL library to permit our community to use and modify it.

Software needed to run Tiny YoloV3 model

- LabVIEW 2020 (or latest)

- HAIBAL Deep Learning development module

- Recommended Vision development module 2020 (or latest) to capture pictures and display the overlays