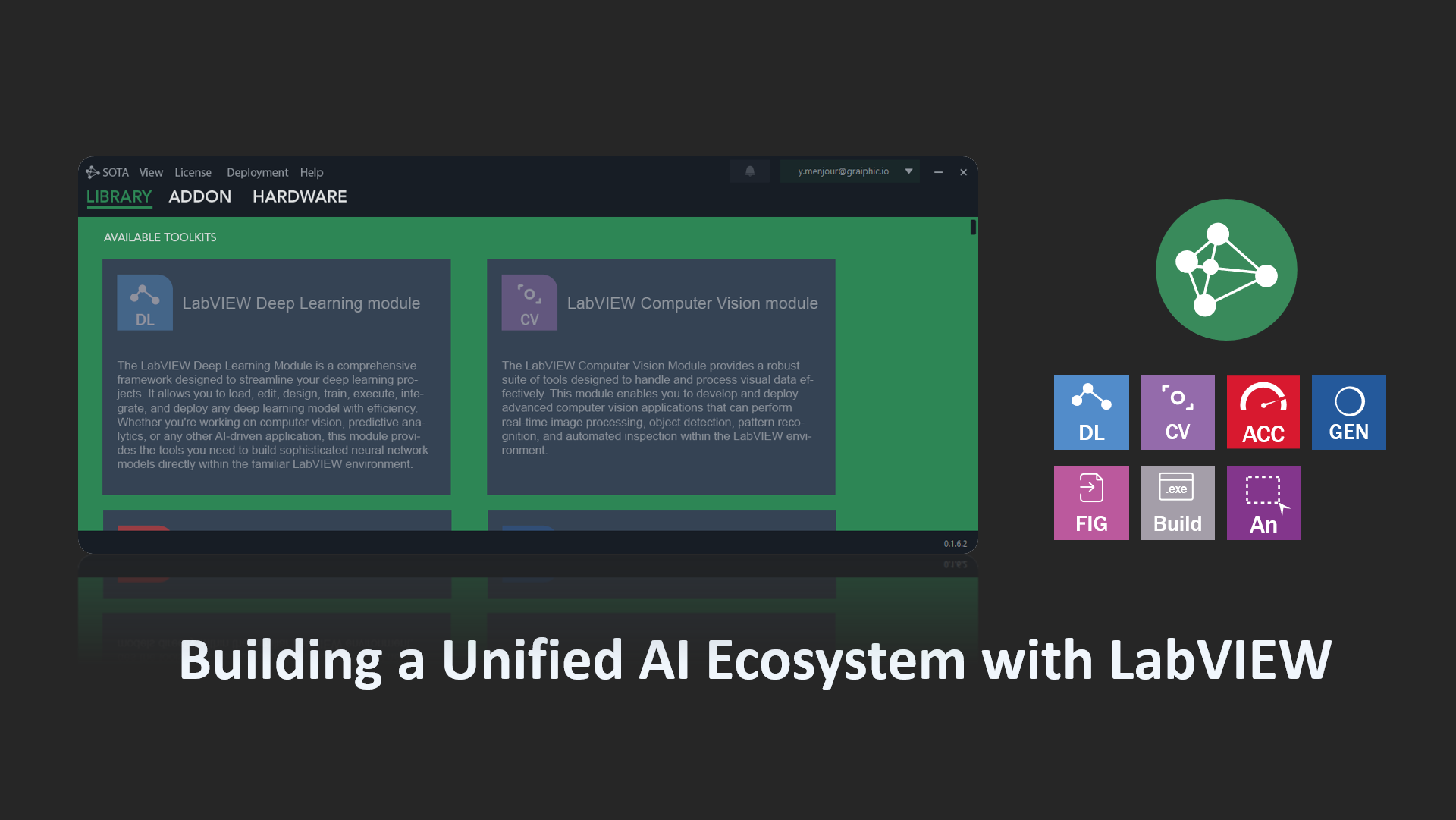

At Graiphic we publish our direction in the open. This year’s roadmap is simple to state and rich in consequences: turn ONNX from a static file into a living orchestration layer. In practice, that means one portable graph that carries AI models, control flow, and real hardware I/O—and one cockpit, LabVIEW, to author, configure, deploy, and monitor the whole system. Instead of stitching scripts and vendor SDKs, teams manage a single artifact that runs on CPUs, GPUs, FPGAs, and heterogeneous SoCs. The result is clarity (one source of truth), portability (same logic across boards), and auditability (optimized graphs you can inspect and version like code).

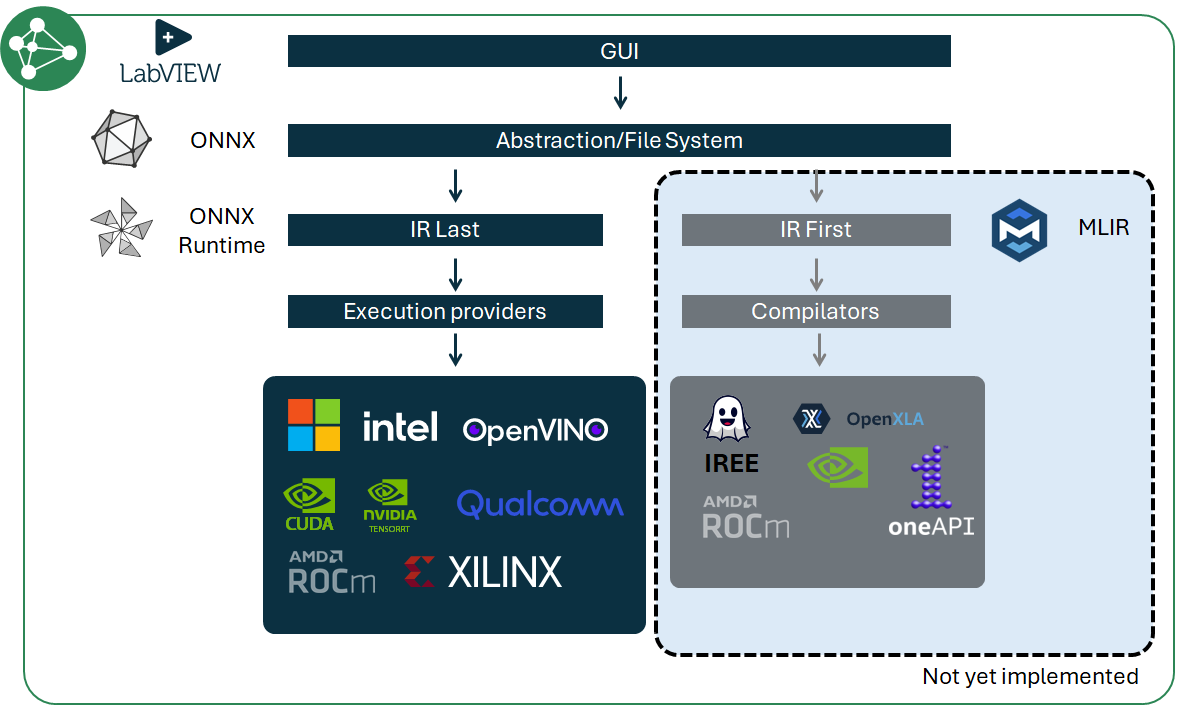

A central pillar of this vision is the compiler pivot, captured by our IR-Last vs IR-First diagram. Today we favor IR-last via ONNX Runtime: the ONNX graph remains the contract while the runtime analyzes, fuses, and partitions subgraphs to Execution Providers (CUDA, TensorRT, DirectML, OpenVINO, ROCm, …) for fast time-to-production and broad coverage. Tomorrow we open the optional IR-first lane with MLIR toolchains—IREE, OpenXLA, oneAPI—to enable ahead-of-time specialization, static-shape lowering, and tighter determinism for safety-critical deployments. Both lanes live under the same LabVIEW cockpit and the same device profiles, so each project can choose runtime breadth or compiler-grade specialization per target, without changing authoring habits.

Hardware is first-class in this roadmap. Our GO HW direction brings GPIO, timers, ADC/DAC, PWM, DMA, triggers, watchdogs, and registers into the graph as explicit nodes, alongside If/Loop/Scan for control. On a Jetson, Raspberry Pi, Zynq, or industrial PC, the same ONNX artifact can be compiled into a resident session with stable memory plans and zero-copy bindings where feasible. Operations gain a clean, repeatable loop: Author → Configure (profile) → Deploy (session bundle) → Monitor (metrics, taps, safe states). Hot-swap and rollback complete the operational story. This is how we bridge AI with the physical world while keeping timing budgets and safety visible, not implicit.

Finally, we treat energy as a first-class metric. Beyond latency and accuracy, we measure joules per run through hardware counters and LabVIEW-native test benches, then make energy part of optimization itself (e.g., multi-objective losses such as L = α·error + β·joules). By publishing open, reproducible benchmarks per platform, we align performance with sustainability—Green AI by design. To prevent fragmentation and accelerate adoption, we are also advocating an ONNX Hardware Working Group to standardize hardware nodes, device profiles, session manifests, and safety semantics. The destination stays the same: one graph, one engine, one cockpit—a living orchestration layer that carries your system from lab to line with transparency and confidence.

Read the full GO HW whitepaper

If you have any questions or suggestions, feel free to reach out at hello@graiphic.io