A Graphical Language for a Complex World

For readers discovering Graiphic for the first time, everything starts with LabVIEW. LabVIEW is a graphical programming language built on dataflow, where systems are drawn, not written. Instead of lines of text, engineers wire logic, computation, and hardware together visually. This choice is not accidental. We believe complex systems are better understood when they can be seen. In a world where AI, hardware, and software are increasingly intertwined, visibility becomes a strategic advantage. LabVIEW is our canvas, our cockpit, and the foundation on which we decided to build something bigger.

2025: A Difficult Year, and a Necessary One

2025 was paradoxical. Financially, it was one of the most difficult years Graiphic has faced. Every decision mattered, every delay hurt, and every mistake was felt immediately. And yet, paradoxically, it was also our most innovative year so far. Against all odds, we delivered in January 2025 the first stable production release of SOTA, our software distribution platform for toolkits, tools, models, examples, licensing systems, and drivers. It worked. And then it broke. A lot. By the time this article is written, SOTA has reached its 162nd version, which means we shipped, on average, a new release every two days throughout the year. It was intense, exhausting, and sometimes brutal, but it was necessary. You do not build a foundation by polishing the roof. You dig, you hit rocks, and you keep digging.

Reinventing Distribution, Not for Fun but for Survival

SOTA was never built for the pleasure of building yet another platform. It was born from a very concrete problem: how do you distribute advanced AI toolkits in LabVIEW in a way that is modern, reliable, and scalable. Existing solutions in the LabVIEW ecosystem, such as VIPM or the NI Package Manager, are solid and historical, but they were not designed for fast-evolving AI workflows. We wanted to catch up with them, and then go further. We took inspiration from modern ecosystems like Steam in the video game industry or Adobe Creative Cloud in the graphics world. SOTA is our answer: a living ecosystem, continuously updated, where software is not frozen but evolving, and where users gain clarity, speed, and long-term stability.

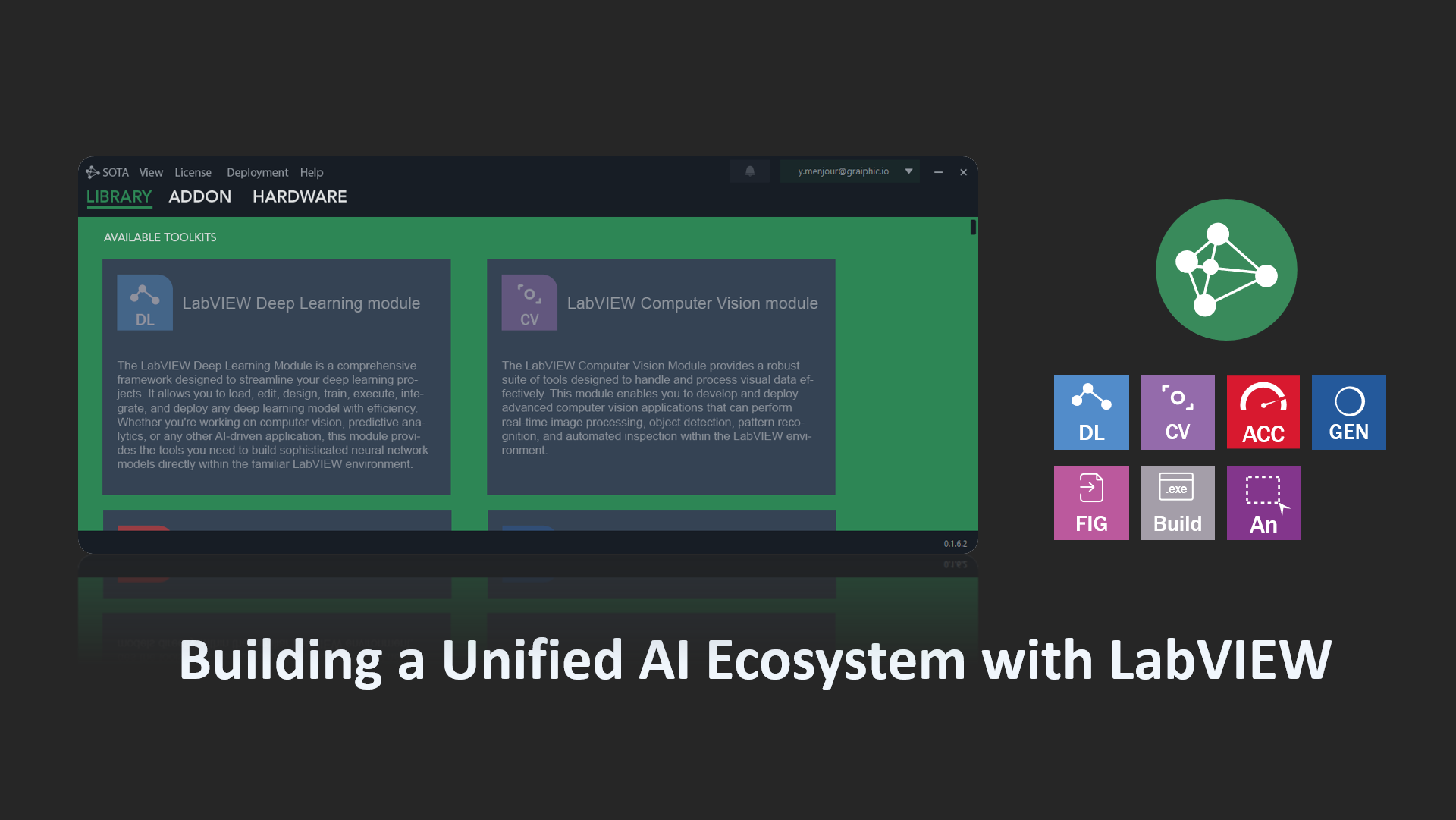

Building an AI Ecosystem, Not Just a Toolkit

The real reason SOTA exists is ambition. In 2025, we released the LabVIEW Deep Learning Toolkit, designed to cover the full AI lifecycle directly inside LabVIEW. Writing models, editing graphs, training, optimizing, and executing models efficiently is now possible without leaving the graphical environment. Execution is optimized across multiple providers including CUDA, TensorRT, DirectML, oneDNN, and OpenVINO. We deliberately chose ONNX as our open, universal, and interoperable file format, ensuring full compatibility with today’s active AI ecosystems. This choice guarantees portability, longevity, and sovereignty. ONNX is our contract. LabVIEW is our language. ONNX Runtime is our engine.

From Execution to Orchestration: Inventing GO

To go further, we committed deeply to the open-source ONNX Runtime project. Our focus was clear: bring training and orchestration natively into ONNX graphs. This led to what we introduced in 2025 as GO, Graph Orchestration. By combining LabVIEW, ONNX, and ONNX Runtime, Graiphic introduced a unique concept: a single graph capable of expressing inference, training, logic, and execution flow. One artifact. One runtime. One source of truth. This was not incremental innovation. It was a structural shift. And once you see systems as graphs, it becomes very hard to go back.

Completing the Vision with Computer Vision and Acceleration

Deep learning alone is not enough. In parallel, we developed a Computer Vision Toolkit to cover image acquisition, preprocessing, annotation, and integration with learning pipelines. This allows users to handle the full computer vision spectrum in a coherent way, which is essential for industrial and scientific applications. Then came a turning point. At NI Connect 2025 in Fort Worth, discussions with NI engineers made something obvious: acceleration should not be limited to deep learning. In August, we released the LabVIEW Accelerator Toolkit, a general-purpose graph computing toolkit that mirrors the LabVIEW palette and allows GPU acceleration by simple copy-paste of diagram logic. ONNX stopped being “just AI”. It became a general graph execution engine. That idea, again, is a Graiphic invention, and it works remarkably well.

Local Generative AI, by Design

2025 also marked the release of our GenAI Toolkit, enabling local execution of LLMs, VLMs, and SLMs directly inside LabVIEW. No cloud dependency. No hidden pipelines. This opens the door to intelligent agents fully integrated into LabVIEW software architectures. Privacy, determinism, and performance are not afterthoughts. They are guaranteed by design.

2026: From Power to Accessibility

With the industrial foundation laid in 2025, 2026 will be the year of accessibility, refinement, and business. Performance is there. Now clarity matters. Our roadmap focuses on simplifying user experience without sacrificing power. We will introduce a new generation of Express VI Assistants across all toolkits, guiding users step by step through model execution, graph orchestration, and image processing. The goal is simple: reduce friction, increase confidence, and guarantee results.

From Data to Model, Seamlessly

One of the key releases of 2026 will be a new Annotation Tool, designed to take any image dataset, annotate it efficiently, train a model, and deploy it, end to end, inside a single LabVIEW pipeline. No glue code. No fragmented tools. A complete solution that saves time, reduces cost, and delivers measurable gains.

Hardware Becomes Part of the Graph

2026 is also the year where Graiphic enters hardware. We will design a first generation of intelligent, reprogrammable DAQ hardware, combining FPGA and GPU on custom boards. Software updates will redefine hardware behavior. This does not exist today in this form. The ecosystem will grow, and hardware will become a first-class citizen of graph orchestration.

Beyond the LabVIEW Runtime

Still on hardware, we will open the path toward a new runtime, complementary and potentially alternative to the LabVIEW runtime, enabling deployment on targets like NVIDIA Jetson or DGX platforms with the same simplicity and guarantees. The concepts are already designed. The graph remains the contract.

Learning Systems That Interact with the World

Reinforcement learning will also expand in 2026, with new examples, new environments, and broader coverage inside SOTA. Graiphic will start developing its own simulation environments by late 2026 or early 2027, closing the loop between perception, decision, and action.

Ambition, with a Smile and Short Nights

Let us be honest. Every new feature costs us a few months of life expectancy. The team knows it. Sometimes we sleep at the office. That is how, in 2025, we managed to demonstrate autonomous driving of an F1 car in the Formula G environment at NI Connect, using reinforcement learning as a proof of capability. We push hard, because the ambition demands it.

A Different Economic Model

Paradoxically, while building all this, we are seriously exploring making the entire Graiphic ecosystem free, with a licensing model inspired by Unreal Engine. You use it. You build with it. And only if you generate revenue thanks to it do we take a share. Fair, aligned, and sustainable. A solution designed to help you, not lock you in.

Looking Forward

Our vision is clear. Make LabVIEW the language of AI. A language to design, edit, integrate, and deploy intelligent systems on any hardware, efficiently and visually. 2026 will be a year of better communication, stronger documentation, richer GitHub content, and bold surprises. We promise results. We promise innovation. And we promise to be exactly where you do not expect us.

The graph is only getting bigger.