The growth of artificial intelligence is not only measured in model size or dataset scale. It is increasingly measured in megawatts. Generative AI has triggered a surge in demand for computational resources, and with it, an unprecedented appetite for electricity. This raises a fundamental question: do we address the challenge by building ever-larger power plants, or by rethinking how AI models are designed and executed?

The Big Tech Approach: Infinite Power Supply

Several tech leaders — Sam Altman, Bill Gates, Jeff Bezos among them — are investing in advanced nuclear reactors, including Small Modular Reactors (SMRs) and fusion startups. Their rationale is straightforward:

- Secure energy for hyperscale data centers: a private or dedicated nuclear plant guarantees massive, stable, low-carbon electricity.

- Strategic independence: controlling power production reduces reliance on state grids and energy markets.

- Green image: nuclear is promoted as a low-carbon alternative, aligning with net-zero pledges.

- Long-term profitability: once built, nuclear plants generate cheap electricity for decades.

- Innovation race: whoever commercializes safer, more compact nuclear solutions first gains a decisive geopolitical and economic edge.

In short, Big Tech is betting that the future of AI requires limitless centralized energy.

An Alternative Path: Efficient Local Execution

At Graiphic, we believe sovereignty is not only about owning power plants. It is also about sobriety by design:

- Over 90% of AI use cases do not require massive cloud models.

- Industrial, medical, and embedded applications are more reliable and responsive when running locally on SoCs, microcontrollers, or edge GPUs.

- Local execution avoids data transfer overheads, lowers latency, and reduces the hidden energy cost of cloud infrastructure.

Our philosophy is simple: most AI should run close to where the data is produced, not in a distant datacenter.

Making Energy a First-Class Metric

Traditional benchmarks focus on accuracy and latency, but ignore energy. Programs like Machine Learning to Physics (DARPA ML2P) already stress the importance of treating energy as a scientific metric — joules per inference — alongside performance.

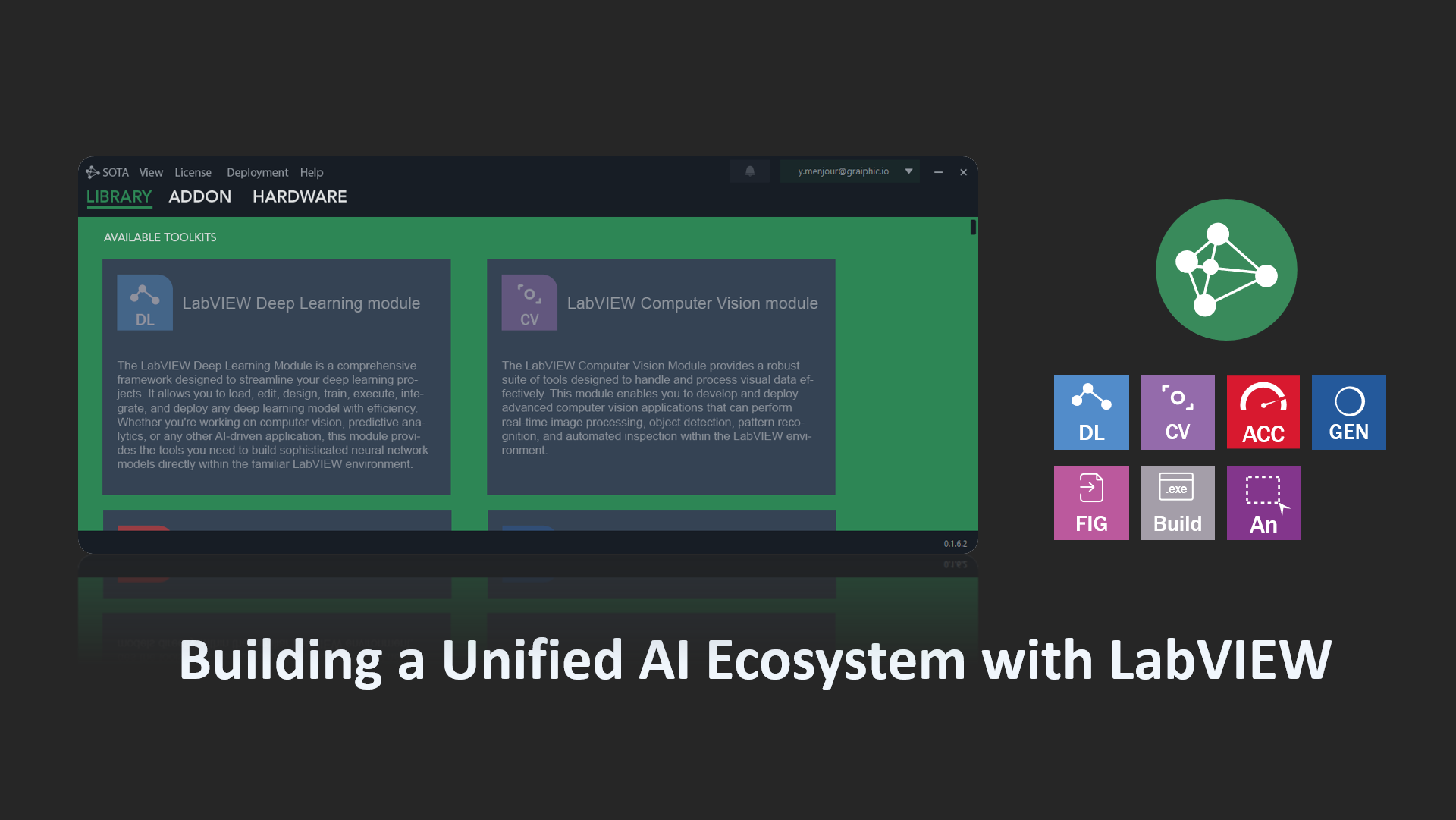

With SOTA and GO HW, Graiphic is embedding this principle directly into technology:

- Energy-aware graphs: ONNX models enriched with energy semantics and real measurements.

- Multi-objective optimization: balancing accuracy, latency, and energy as first-class trade-offs.

- Forensic-grade monitoring: LabVIEW-native instrumentation makes joules per inference auditable, reproducible, and transparent.

This approach turns “Green AI” from a slogan into a measurable reality.

The Strategic Tension: Cloud vs Edge

The paradox is clear:

- Big Tech bets on hyperscale AI in the cloud, tied to massive energy infrastructures.

- Most real-world applications demand efficient AI at the edge, where constraints are physical, financial, and ecological.

The real challenge is not only technical but also strategic: making local AI as easy to develop and deploy as cloud AI. This is where ONNX Runtime, TensorRT, CoreML, OpenVINO — and Graiphic’s GO HW integrated with LabVIEW everywhere — play a decisive role.

Conclusion

Energy efficiency is not a side metric. It is the new frontier of AI design. While some build nuclear plants to fuel the future, we believe the smarter path is to make AI itself lighter, more efficient, and deployable everywhere.

At Graiphic, we call this sovereignty through sobriety: AI that runs where you need it, when you need it, with the energy it truly requires — no more, no less.

Graiphic needs you! 🚀

We’re building the next wave of AI and LabVIEW innovation — and you can be part of it. Here’s how:

💡 Share our work — visibility is everything.

🤝 Collaborate — R&D, industrial use cases, LabVIEW projects.

💶 Fund & invest — we’re looking for partners to scale.

🔗 Partner with us — bring our tech into your solutions.

🧪 Test & feedback — help us improve by trying our tools.

🛠 Contribute — much of our ecosystem is open source (MIT).

🗣 Spread the word — your network could be our next journey.