All layer are now coded in native LabVIEW. First test of importe HDF5 from Keras Tensorflow and a graph generator success.

STATUS OF THE LAYER DEVELOPED IN NATIVE LABVIEW

- 16 activation functions (ELU, Exponential, GELU, HardSigmoid, LeakyReLU, Linear, PRELU, ReLU, SELU, Sigmoid, SoftMax, SoftPlus, SoftSign, Swish, TanH, ThresholdedReLU)

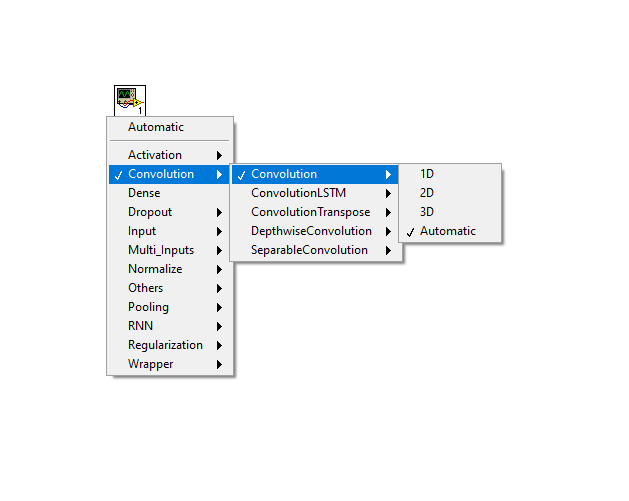

- 84 functional layers (Dense, Conv, MaxPool, RNN, Dropout…)

- 14 loss functions (BinaryCrossentropy, BinaryCrossentropyWithLogits, Crossentropy, CrossentropyWithLogits, Hinge, Huber, KLDivergence, LogCosH, MeanAbsoluteError, MeanAbsolutePercentage, MeanSquare, MeanSquareLog, Poisson, SquaredHinge)

- 15 initialization functions (Constant, GlorotNormal, GlorotUniform, HeNormal, HeUniform, Identity, LeCunNormal, LeCunUniform, Ones, Orthogonal, RandomNormal, Random,Uniform, TruncatedNormal, VarianceScaling, Zeros)

- 7 optimizers (Adagrad, Adam, Inertia, Nadam, Nesterov, RMSProp, SGD)

WORK IN PROGRESS & COMING SOON

Work is far to be finished and we still have a lot of development to do. In short, we are doing our best to release the first version of our library as soon as possible.

Still a little patience …