In late August, the U.S. government shocked the semiconductor world by taking a 10% stake in Intel — a bold geopolitical move to secure domestic chip sovereignty. At the same time, NVIDIA unveiled Jetson Thor, a system-on-chip delivering 7.5× more AI compute at 3.5× better efficiency per watt compared to its predecessor, Orin.

Not to be outdone, AMD announced its Instinct MI350 series, promising up to 35× speed-ups on inference workloads with FP4/FP6 precision. Meanwhile, Google’s TPU v6e (Trillium) quietly entered the scene with a 67% jump in energy efficiency for cloud inference. And on our laptops? Microsoft, Intel, Apple, and Qualcomm are fighting for dominance in the on-device AI market, each shipping NPUs with 40–48 TOPS performance at single-digit watts.

The conclusion is clear: the hardware race for energy-efficient inference has never been more intense.

Why efficiency is now the currency of AI

The performance-per-watt curve has become the decisive metric across every domain:

-

Datacenter: NVIDIA’s Blackwell claims up to 25× energy savings per token on LLM inference when scaled at rack level. AMD and Google push FP4 and FP6 formats to squeeze more tokens per joule.

-

Robotics & edge: Jetson Thor (40–130 W) enables humanoid robots and industrial arms to think and react in real time without cloud dependency. Amazon Robotics, Caterpillar, Boston Dynamics, and Figure are already in.

-

PCs & mobile: Intel’s Lunar Lake (48 TOPS), Apple’s M4 (38 TOPS), and Qualcomm’s Snapdragon X Elite (45 TOPS) show that inference at the edge isn’t just about speed — it’s about battery life and autonomy.

But while silicon advances, a bottleneck remains: fragmentation.

CUDA, ROCm, Vitis AI, oneAPI, QNN SDK, OpenVINO… every vendor pushes its own toolchain. The result? Developers are forced to juggle languages, APIs, and runtimes just to deploy the same AI model across different chips.

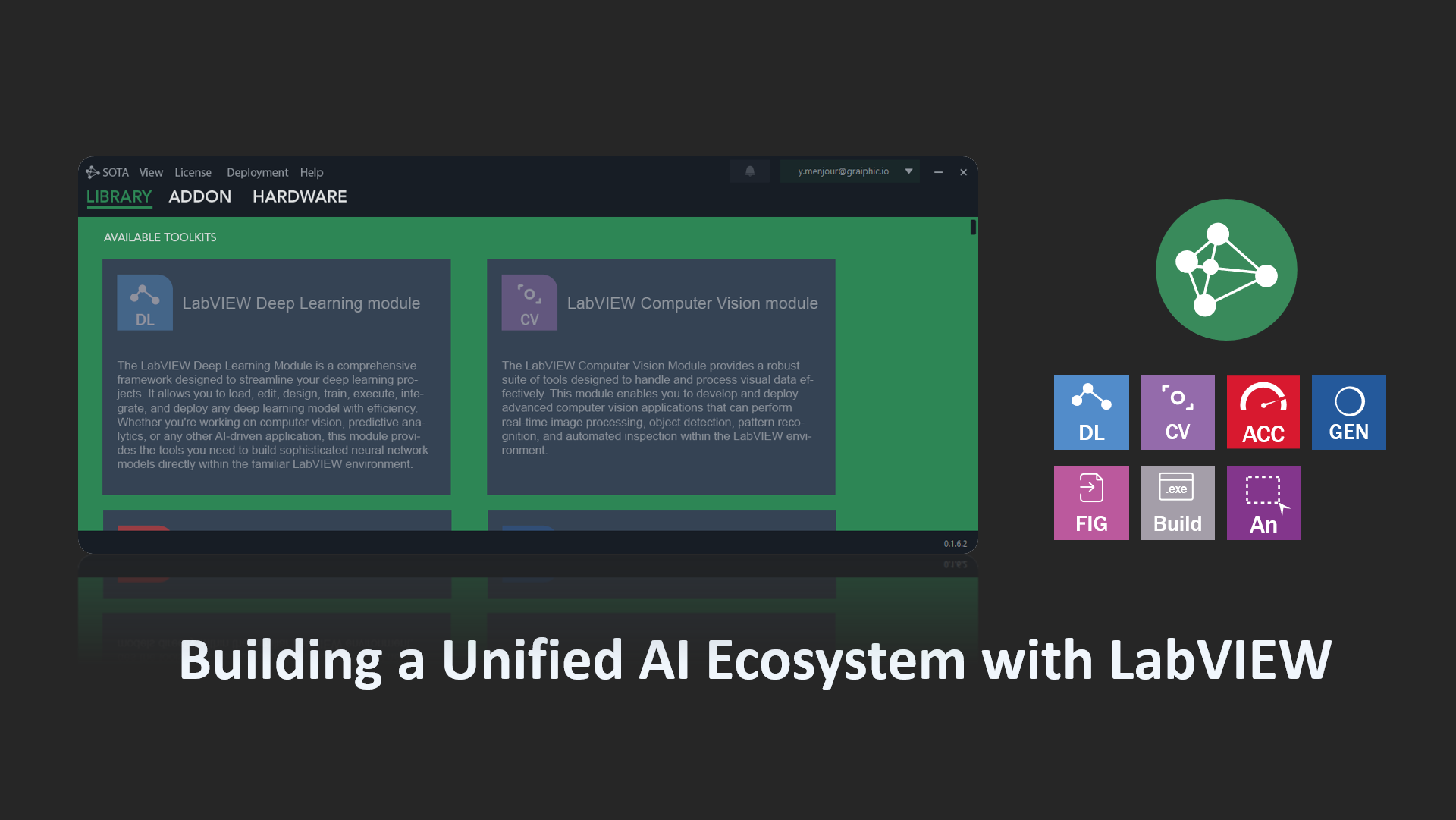

Enter Graiphic’s GO HW: One Graph, Any Hardware

At Graiphic, we believe the next great race isn’t only about TOPS or joules — it’s about who provides the universal cockpit to program them all.

That’s what we’ve been building with GO HW (Graph Orchestration for Hardware):

-

One artifact: a single ONNX graph for AI models, control logic, and I/O.

-

One engine: ONNX Runtime compiles the graph, optimizes kernels, and binds directly to hardware (GPU, NPU, DMA, GPIO).

-

One cockpit: a LabVIEW-style graphical IDE where engineers drag, drop, and orchestrate inference + control flows without vendor lock-in.

Instead of rewriting code for Jetson, Zynq, Raspberry Pi, or Intel IPCs, developers configure a device profile, compile once, and deploy. Lifecycle operations like HotSwap or Rollback are built-in, with gRPC monitoring for safety-critical domains.

The new race: not silicon, but orchestration

The hardware race is fierce — but the IDE race is just beginning.

If NVIDIA’s Jetson Thor is the new brain for robots, if AMD and Intel are fueling data centers with FP4-powered chips, then Graiphic’s GO HW aims to be the central nervous system that makes all these brains usable in a unified, efficient way.

Because in the end, what will matter most is not just how many tokens per second a chip can generate, but how many developers can harness that power easily, portably, and safely.

-

Imagine writing one AI control graph in a visual IDE, deploying it seamlessly on Jetson Thor, an Intel Lunar Lake laptop, or a Xilinx Versal FPGA.

-

Imagine robots, industrial controllers, and PCs all running from the same ONNX bundle, orchestrated graphically.

That’s the bet Graiphic is making with GO HW.

The silicon giants are winning on watts. The next giants will win on how we program them.

© Graiphic – GO HW